分享节省游戏内容数字传输成本的方法

作者:Colt McAnlis

如今的游戏开发者在数字传输平台上拥有了更加广泛的选择。当一款游戏过大时,开发者便需要使用一种与配送服务不同的资源去传输其中的某些内容。而对于那些不熟悉网页开发的人来说,这便是一个全新的领域。

所以我将通过本篇文章详细阐述如何在网页上传输游戏内容。亲自发行自己的数字资产对开发者来说非常有帮助,因为这能让你更好地取悦用户。

当提到数字传输时,我们总是需要考虑两大主要元素,即时间和金钱:时间是指用户下载内容并开始游戏的过程中所花费的时间,而金钱则是指开发者在传输位体时所花费的金钱。并且这两大元素相互联系。

衡量时间的成本:带宽和延迟

我们都知道用户讨厌过长的加载时间,这也很大程度地制约着一个网站的成败。为了更好地理解这一问题,我们将着眼于有关下载时间(从全世界的服务器到圣何塞的图书馆内的一台公共电脑)的速度测试结果并对此进行分析。

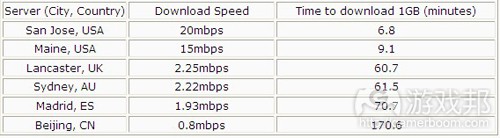

基于标准的下载速度,以下是不同地区的服务器在下载1GB内容所需要的时间:

基于服务器所处的不同地区,传输相同数量的数据将会花费7分钟至3个小时不等的时间。为什么会出现下载时间多样性的情况?为了搞清楚这一问题,我们首先需要考虑两个元素:带宽和延迟。

带宽是指在特定时间内能够进行传输的信息量。我们可以通过从一个位置传输一个数据包到另一个位置所需要的时间进行测量。开发者对于带宽具有一定的控制权(如能够压缩数据包的大小或传输数据包的数量),但是最终带宽还是受控于用户与传输服务间的基础设施,并且每个服务协议都拥有它们各自的互联网服务提供商(ISP)。

举个例子来说,ISP将够提供给用户不同的带宽服务层面,或者将基于每日使用限制去控制带宽的大小,而与用户平台的最终连接也将影响着带宽的传输(游戏邦注:例如缓慢的网络连接便是一大限制因素)。

延迟是关于测量系统中的时间拖延。在网络术语中,延迟总是与往返时间(RTT)联系在一起,因为这是关于两点之间的传输,所以我们能够很轻松地进行测量。我们可以将RTT作为一个从目标上反弹并回到发射器上的声音。Unix的ping指令便是基于这一原理。

作为开发者,你总是很难去控制延迟。你只能通过算式而得知延迟所需要的费用,但是关于发射器与接收器之间的实体距离以及光速等元素都让你很难真正理解RTT。也就是一个特定媒介(如铜线或电缆)所连接的两个点的实体距离影响着数据的最终传输速度。

你也许认为通过购买更快速的带宽连接便能够确保内容的快速传输。如果你清楚用户的具体位置并确保他们不会到处移动,这一方法便非常有效;但是就像Ilya Grigorik所指出的,关于延迟的改进将更大程度地影响着下载时间,而非带宽的完善。

降低延迟率的最简单也是最普及的一种方法便是基于网络加速器(CDN)而缩短用户与内容服务器之间的距离。

基于位置而缓解延迟问题

CDN将通过复制并在世界各地的数据中心保存你的数据而减少从全球任一点获取一个文件所需要的时间。举个例子来说,我最初在圣何塞的CDN服务器上传了一份文件,而来自北京的用户则可以通过中国的服务器接受这一文件。

当你在使用CDN时,你便可以利用网络结构的一大基本功能:互联网的核心便是一个缓存数据的层面。YouTube便是个典型的例子。当你上传了一个视频后,它将被分散到世界各地的YouTube数据中心,从而避免了从原始数据中心面向任何未知位置传输文件所需要的高额成本。Google Cloud Storage便是基于这一原理——在需求较高的区域同时设置多个中间缓存。例如在巴黎便具有2个附加数据中心。

你也许不知道网络中还隐藏了另一种效能:为了在之后进行更快速的检索,客户端机器总是会对数据进行缓存,而ISP也会在面向终端用户传输数据前对其进行缓存——这么做能够确保位体更接近用户并最终达到降低数据传输成本的作用。

基于技术而缓解延迟问题

一些CDN提供了能够加速传输速度的高级传输协议。Google App Engine便支持SPDY协议——旨在缩短延迟时间并克服客户端中并发连接受限的情况。

CDN还能够确保数据访问的灵活性。Google Cloud Storage便支持跨源资源共享(CORS)以及访问控制列表(可用于编写脚本)。而这些工具能够帮助你明确最佳内容间隔,并基于特定用户类型而匹配特定资产。Google App Engine便可以用于编写脚本。编写脚本能够帮助你提高在线资源的安全性,例如编写能够察觉到任何可疑行为的代码,如来自多个客户端对于某一资产的连续请求。

使用CDN能够帮助你面向世界各地的用户更有效且更安全地传输数据。

关于手机内容的传输

除了延迟和下载速度外,手机网络还存在着不同的问题。Ilya Grigorik对此做出的解释:

“手机网络是一个完全不同的领域,但是在这里情况并没有好转。如果你足够幸运的话,当你打开收音机,并且网络和信号均保持稳定时,你便只需要花费50至200毫秒便能够连接到网络骨干。而基于这一连接时间而乘以2便是手机上的RTT范围(即100至1000毫秒)。

以下是来自Virgin Mobile(游戏邦注:隶属于美国第四大无线通讯运营商Sprint)的一些常见网络问题:Sprint 4G网络的用户希望能够体验到3Mbps至6Mbps的下载速度,以及基于150毫秒延迟时间的1.5Mbps的上传速度。在Sprint 3G网络中,用户则希望体验到600Kbps 至1.4Mbps的下载速度以及350Kbps至500Kbps 的上传速度,并且平均延迟时间为400毫秒。

更糟糕的是,如果你的手机出现了停顿或收音机中断的情况,你便只能增加额外的1000至2000毫秒的时间去连接无线电线路。”

所以任何手机开发者在试图提升数据传输速度时都必须考虑到这些问题。并确保你的流系统和压缩系统都能够补偿这些额外的负担。

内容传输的现金成本

为了使用CDN,你需要投入一定的金钱。例如Google Cloud Storage每次传输所收取的费用便为0.12美元(这是关于每个月首个1太字节数据传输的费用)。

让我们以例子进行说明。假设你的游戏平均每个月拥有340万的独立用户。你的游戏内容为1GB,而Google cloud storage每次传输收取的费用为0.085美元(每月能够传输0.66拍字节),那么你每天便需要为此投入9633美元。

所以为了保持收支平衡,你每天必须从每个用户身上赚取0.002美元才能继续传输更多内容。而如果你每个月能够获得340万名用户,你便很容易做到这一点。

但是不得不承认的是这些数字都是不现实的;你根本不可能每天面向340万名独立用户提供1GB的内容。如此估算,只需要几个月的时间世界上所有人都能够看到你的内容——所以这并不能算是一种长期的方法。

明确所需要的时间和成本便是成功的一半

当你清楚资产传输所需要的时间和成本,你便能够规划接下来的任务了。

只传输用户需要的内容

直到现在我们仍然在假设每个用户在开始游戏时都需要1GB的内容,但这却大错特错。事实上,用户只需要一个数据子集便能够开始游戏。基于分析,你将发现我们总是能够快速传输那些初始数据,所以用户总是能够快速进入内容体验,而剩下的数据将会默默地流向幕后。

让我们举个例子来说,如果用户从一个网站或数字软件商店中下载了首个20兆的内容,他们能否立刻开始体验游戏内容或在之后获得剩下的内容?需要经历多长时间他们才能接触到下一个20兆的内容?那下个400兆的内容呢?CDN是否能够更快或更灵活地传输后续内容?优化这种使用方法将能够减少加载时间以及总体的传输成本,并加强产品的易用性和实惠。

确保用户能够接收到更新内容

在现在的游戏开发世界中,同时运行于多个不同的平台已经不是件新鲜事了。所以当你的内容能够进行更新时,你便需要花点时间去确保所有用户都能够接收到新内容。

假设你已经对游戏服务器进行了更新,但是有些玩家却不能与之保持同步,如此你的AQ测试员便会遇到像“OMG th1s g4m3 duznt werk!”等漏洞。控制应用的更新过程与时间具有很大的好处——尽管有时候更新逻辑是受控于操作系统,并且完全不受你控制。你必须确保所有用户都将清楚你的应用何时进行更新,并能够第一时间获得这些更新内容。

大多数应用都将包含一些平台所特有的资产。例如,受硬件支持的纹理压缩格式在不同平台上也各不相同,你便需要在手机上运行一个低分辨率模式的单独层面,或者某些内容在不同区域中也各不相同。而当任何资产发生改变时,与之相关联的更新也必须随之发生改变。如果你能够基于平台或区域去划分你的内容,你便能够更好地控制更新时间,更新对象以及传输内容了。

进一步发展

老实说,我们在此所讨论的所有传输策略并不是针对于每一个人。如果你的应用小于10兆,你便不需要分割你的资产或脱离主要的传输点去传输内容。

而如果你的应用较大,你便应该理解传输游戏的数字资产到底需要花费多少成本,以及你该如何去压缩这些成本和及用户成本。除此之外你还必须掌握传输策略是如何减少加载时间并避免更新所引起的各种问题。通过把控内容传输,你便能够有效地节约成本并提高终端用户的体验质量了。

(本文为游戏邦/gamerboom.com编译,拒绝任何不保留版权的转载,如需转载请联系:游戏邦)

Cover your assets: The cost of distributing your game’s digital content

By Colt McAnlis

Today’s game developers have a wide choice of digital distribution platforms from which to sell their products. When a game is very large it’s often necessary to deliver some of its content from another source, separate from the distribution service. This can be new territory for those of you who are unfamiliar with web development.

In this article, we’ll discuss some of the concepts and strategies that will help you decide how to distribute your content on the web. It is well worth the effort to distribute your digital assets yourself because it gives you the chance to make your users happier.

When thinking about digital delivery, the two main factors to consider are time and money: The time it takes the user to download content and start playing, and the dollar cost to you, the developer, to deliver the bits. As you might suspect, the two are related.

Measuring the cost of time: bandwidth and latency

We all know that users hate long load times, which is already known to be a large factor with the success of websites. In order to better understand the issues, let’s take a look at some results from running speedtest to measure download times from various servers around the world to a public computer in a San Jose library.

Using the measured download speeds, here are the times it would take to download 1GB from various locations:

Depending on the location of the server, it can take from about 7 minutes to almost 3 hours to transfer the same amount of data. Why is there such a variation in download time? To answer the question, you must consider two terms: bandwidth and latency.

Bandwidth is the amount of data that can be transmitted in a fixed amount of time. It can be measured by how long it takes to send a packet of data from one place to another. Developers have some control over bandwidth (for example by reducing packet size or the number of packets transmitted), but bandwidth is ultimately controlled by the infrastructure between the user and the distribution service and the service agreements that each has with their respective ISPs.

For instance, an ISP may offer different tiers of bandwidth service to users, or may throttle the bandwidth based upon daily usage limits, and the “last mile” of copper – or whatever the connection is to the user’s platform – can also cause bandwidth to degrade. (i.e. A chain is only as strong as its weakest link.)

Latency, measures the time delay experienced in a system. In networking parlance latency is often referred to as round trip time (RTT), which is easier to measure since it can be done from one point. You can imagine RTT as the time it takes for a sonar ping to bounce off a target and return to the transmitter. The familiar Unix ping program does just that.

As a developer, you have little control over latency. Your algorithms might contribute some overhead that will accrue to latency, but the physical realities of the distance between the transmitter and receiver and the speed of light impose a hard lower bound on RTT. That is, the physical distance between two points across a specific medium (like copper wire or fiber cable) caps how fast data be transmitted through it.

At first glance, you might think that you can buy your way to faster content delivery by purchasing higher bandwidth connections. This might work if you knew exactly where your users are located and they don’t move; however, as Ilya Grigorik points out, incremental improvements in latency can have a much greater effect on download times than bandwidth improvements. (His argument relies on some interesting work by Mike Belshe who also makes the same case.)

The simplest and most popular way to reduce latency is to minimize the distance between the user and the content server using a Content Delivery Network, or CDN.

Attacking latency with locality

Content Delivery Networks duplicate and store your data in multiple data centers around the world, reducing the amount of time it takes to fetch a file from an arbitrary point on the globe. For instance, I may have originally uploaded a file to a CDN server that happened to be in San Jose, but a user downloading the file from Beijing would most likely receive the file from a server in China.

When you use a Content Delivery Network you take advantage of one of the basic features of internet architecture: The internet is, at its core, a hierarchy of cached data. YouTube is an excellent example. Once a video is uploaded, it is distributed to the primary YouTube data centers around the world, avoiding the higher cost of sending the file from the originating data center no matter where the request is coming from. Google Cloud Storage also uses a similar policy. In high-demand areas multiple intermediate caches can exist. For example, there may two additional data centers in Paris.

You may not be aware of another hidden efficiency of the net: Client machines usually cache data for faster retrieval later, but data can be cached by an Internet Service Provider (ISP) as well, before sending it on to the end user – all in an attempt to reduce the cost of data transfer by keeping the bits closer to the users.

Attacking latency with technology

Some CDNs provide advanced transfer protocols that can speed up delivery even more. Google App Engine supports the SPDY protocol, which was designed to minimize latency and overcome the bottleneck that can occur when the number of concurrent connections from a client is limited.

A CDN can also offer flexibility in controlling access to your data. Google Cloud Storage supports Cross-origin resource sharing (CORS), and Access Control Lists, which can be scripted. These tools can help you tailor the granularity of your content, pairing specific assets with specific kinds of users for example. Google App Engine is scriptable. Scripting can help you increase the security of your online resources, for example by writing code that detects suspicious behavior such as an unusual barrage of requests for an asset coming from multiple clients.

Using a CDN allows you to scale and deliver data to your users around the world more efficiently and safely.

A note on mobile content delivery

Mobile networks have additional problems involving latency and download speed. Ilya Grigorik does a great job of explaining this:

“The mobile web is a whole different game, and not one for the better. If you are lucky, your radio is on, and depending on your network, quality of signal, and time of day, then just traversing your way to the internet backbone can take anywhere from 50 to 200ms+. From there, add backbone time and multiply by two: we are looking at 100-1000ms RTT range on mobile.

Here’s some fine print from the Virgin Mobile (owned by Sprint) networking FAQ: Users of the Sprint 4G network can expect to experience average speeds of 3Mbps to 6Mbps download and up to 1.5Mbps upload with an average latency of 150ms. On the Sprint 3G network, users can expect to experience average speeds of 600Kbps – 1.4Mbps download and 350Kbps – 500Kbps upload with an average latency of 400ms.

To add insult to injury, if your phone has been idle and the radio is off, then you have to add another 1000-2000ms to negotiate the radio link.”

So for you mobile developers, be aware of these issues when trying to get data to the user fast. Be sure your streaming and compression systems are designed to compensate for these extra burdens.

The cash cost of content delivery

You must spend some money to use a CDN. (Sadly, the free lunch comes next Tuesday.) For example, Google Cloud Storage charges around $0.12 per gig for the first 1 terabyte of data transferred each month.

To put that in perspective, let’s say your game sees 3.4 million unique users monthly. Assuming your in-game content is 1GB in size, and Google cloud storage charges about $0.085 per gig to transfer 0.66 petabytes a month (the pricing tier at that usage level), then your cost would be about $9,633 per day.

Putting things in perspective, to break even you’d need to earn about $0.002 per user per day to distribute that much content. Hopefully, if you’ve got 3.4 million monthly users you should easily be able to do that.

Admittedly these are worst-case numbers; the chances that you’re serving 1GB of content to 3.4 million unique users daily is a bit far-fetched. Within a few months everyone on the planet would have your content; so this scenario doesn’t represent a long-term estimate.

Knowing is half the battle

Once you’re aware of the time and financial costs involved with distributing assets, you can plan a path to do something about it.

Only send to users what they need (right now)

Up until now, we’ve assumed that all 1GB of content is required for each user to start the game, but that’s a great, horrible lie. In reality, the user may only need a subset of data to begin play. With some analysis you will find that this initial data can be delivered quickly, so the user can start to experience the content immediately, while the rest of the data can be streamed in the background, behind the scenes.

For instance, if a user downloads the first 20MB from a website or digital software store, can they start playing right away and stream in the rest later? How long until they need the next 20MB? What about next 400MB? Would a CDN be able to deliver the follow-on content in a faster, or more flexible manner? Optimizing for this sort of usage can decrease the perceived load time and the overall transfer cost, enhancing the accessibility and affordability of your product.

Own your content updates

In the current world of game development, it’s common to run on many different platforms. When an update is available, it takes time to be sure that the new content has been received by all your users.

For instance if you’ve pushed a new build of your game server, some of your players can be out of sync for an extended period, which can generate lots of “OMG th1s g4m3 duznt werk!” bugs for your QA testers. Controlling how and when your app performs updates can be highly beneficial – though it must be noted that in some cases the updating logic is managed by the operating system and is out of your hands. Whenever possible, your app should be aware of new updates and capable of fetching them.

Most applications will contain some number of platform-specific assets. For instance, the types of hardware-supported texture compression formats can vary by platform, you may need a separate tier of lower-resolution models to run on mobile, or some of your content can vary by region. When any of these assets change, the associated update need not be universal. If you can segment at least a part of your content by platform and location you can better control when you need to update, who needs to update, and what you need to send.

Moving forward

To be fair, the distribution strategies we discussed here are not for everyone. If your entire app is under 10mb, there’s little need to segment assets or distribute content outside of the primary distribution point.

But if your app is hefty, it pays to understand the costs involved with distributing your game’s digital assets, and how you can reduce those costs – and the users’ costs as well. It’s also wise to consider how a distribution strategy can decrease load times and reduce headaches caused by updates. By taking control of distribution you have the ability to save money and increase the quality of the end-user experience.

Which is the point, right?(source:GAMASUTRA)

闽公网安备35020302001549号

闽公网安备35020302001549号