如何成功进行游戏测试的6大诀窍

作者:Steve Collins

“一个精确的测量值顶得上1000位专家的意见。”——Grace Murray Hopper

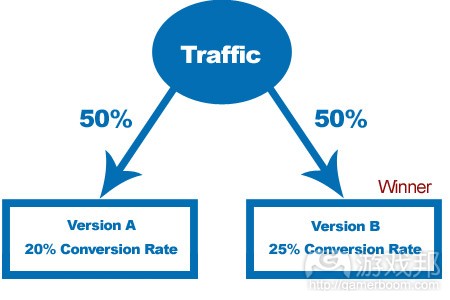

这句话应该是对于A/B测试最精辟的概述。它总结了为何许多游戏工作室都想要利用A/B测试去辅助设计和开发过程——也就是不再止步于猜测什么内容会起作用,而是真正搞清楚结果。

任何经历过设计过程的人应该都知道存在更好的方法去做出这些决策。这一方法便是使用A/B测试中玩家所提供的真实数据。

但这只是测试理念的一部分。我们还必须清楚该从何开始。所以本文便通过提供6个成功测试诀窍去帮助开发者们更好地完善自己的业务。我们必须专注于一些真正重要的元素,即用户留存,转换率以及收益。

从简单的开始

也许这听起来很理所当然,但却是值得我们注意的–如果你想把测试文化带进自己的团队中,你就需要寻找能够快速制胜且得到证实的方法去获得结果。这便意味着采取一种“倾向性”方法,快速验证理念,而不是在测试前便创建一个巨大且复杂的结构。

幸运的是做到这点并不困难。不管你采取的是何种方法,你都能够较轻松地完成简单测试。而这里所存在的最大弊端便是你有可能会害怕“改变游戏”,但是不管怎样游戏都会基于某种程度而发生改变。

我们可以通过测试一些争议性较低的玩家体验中的某些元素(但是具有很大的影响)去缓解害怕。即包括:

延迟首个呈现于玩家眼前的插播广告的时间。我们可以基于点击率,用户留存和其它KPI而快速明确这种改变的影响。

教程中可替代的引导角色。我见识过教程中不同角色的使用具有不同的效果。不要在设计会议上挣扎了,只要测试不就完了!

是否要呈现注册页面(如果适当的话)。为了加深与玩家间的关系而让他们注册游戏是否是种明智的做法?或者这么做会导致玩家的流失?请找到答案。

当我们开始尝试一些简单的测试时,我们可能需要投入额外的时间去获取一些真正有意义的统计结果(游戏邦注:如果你使用的是A/B测试平台的话这便不是问题了),但这却是有价值的。所以你不要妄想着抄捷径。

删除拖延问题

我们想要鼓励开发者频繁且快速地进行测试。因为如果单个测试就花费了大量时间,并需要进行代码修改,那将会影响新应用的创建,并导致我们面临较高的失败测试成本,从而大大影响了测试的最终利益。长时间的测试最终只会将我们带进一个充满风险,高成本且浪费时间的情境中。

幸运的是我们能够轻松地解决这种拖延问题。关键是我们必须禁止工程师参与该任务。因为如果每个测试都需要工程师重新编写代码,进行QA等等任务,我们便什么都做不了了。除此之外,工程师们还有更重要的事要做。

通过将测试框架分离出工程循环过程,我们便可以创造出短期的测试循环,并由产品经理或市场营销团队去运行。为了做到这点,我们需要创造出“受数据驱动”的游戏,真正理解并同意将数据点面向测试而开放。当我们到达这个点时,进行测试就像改变数据表中的一个数值那么简单了。

更好的是,当我们创造了一个合理的变量后,基于环境去改变它就变得如创建最初的测试那般简单了。我们可以看到测试结果快速产生影响,并转向下一个挑战。

这一方法也能够让我们暂时封锁那些不包含与测试中的游戏元素,从而减少项目风险。

“删除开关”

有时候也会出现问题。即当我们遭遇“失败”,并创造了一种敏捷且且自动适应的受数据驱动文化时,便会出现这些问题。所以请确保减少失败的影响并从负面结果中吸取经验教训。

你的系统必须帮助团队摆脱“害怕失败”的想法,而最简单的方法便是“删除开关”。你希望能够在测试运行时完全控制它,并在某些时刻禁止测试,而无需等待工程师的输入或之后应用的发行。

你的测试应该覆盖整个核心游戏体验。后者是默认设置,但却不意味着我们不能对此进行完善,而我们必须确保“删除开关”能在任何时候都将玩家带回这种默认状态中。

好消息便是,基于正确的A/B测试QA程序,你便会发现不大需要使用“删除开关”了。不过掌握这一理念能够帮助你更好地进行实验,并且这也是你取得测试成功所需要具备的态度。

孤立变量

这听起来也很平常。但是我们在设计测试时却很容易忘记这一基本原则,即只收到一些积极结果但却不清楚为什么我们能在某些情况下取得成功。

值得强调的是:在设计测试时我们必须确保只面向一个对象进行测试。

关于我们很容易忘记这一原则的一个例子便是,面向特定玩家群体提供特别的内容–即致力于测试改变特定商品的价格所产生的效果。我们可能想在相关玩家群组间插播广告而“支持”测试。但当真正执行时会发现,我们是在同时测试两种内容—-即价格改变以及游戏内部信息的使用。但是我们甚至从未讨论该信息的设计和内容。

而基于这一例子的正确方法便是向所有群组呈现插播广告,提供两种价格然后明确哪种更适合游戏内部购买。如果游戏内部购买有所提高,我们便可以确定价格改变的有效性,尽管这仍是关于是否要插播广告的测试。

同样地,在测试内容改变,如商品的描述时,我们需要专注于一些更明确且具有反复性的改变。从中我们可以了解到一些描述比其它描述更有效。这是一种非常有帮助的方法。

检查纵向影响

在测试时,你需要预先明确成功的标准。这一步骤非常重要。而同时我们也需要定义转换事件,如教程的完成或特殊购买,这与测试本身紧密相连,所以我们需要花些时间去检查测试的纵向影响。

我的意思是我们可以从更广泛的意义上去了解变量和控制群组在一段时间内的表现。经过片刻的沉思你便会清楚为何“综合检查”如此必要了。我们完全能够设计一种测试,即利用带有侵略性的游戏内部信息去推动玩家做出特定的购买行为。如果这种提供带有欺骗性,我们便不难猜到用户留存和长期收益会出现下降—-即使核心测试结果是积极的。

记住这点,我们需要始终着眼于各种变量和控制群组的“整体业务”体验。

你必须始终清楚自己在寻找什么,并事先记下任何会带来不利影响的KPI。如果我们着眼于多个测试中的多种参数,显然在不久之后我们便能够获得真正有意义的结果。改变是我们所期待的变量,但在此基础上却是无意义的。将我们自己设定在希望改变的特定KPI中将减少“正误识”的风险。

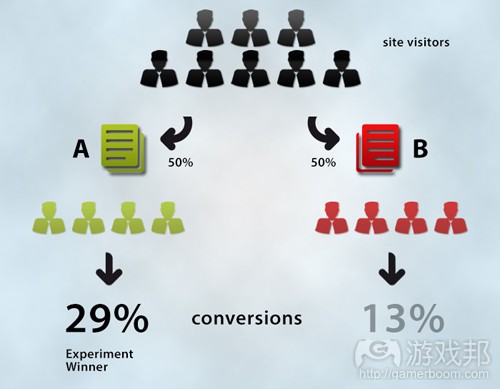

区别对待新用户与现有用户

选择用户群体进行测试有时也需要一定的计划。设定测试框架而将测试瞄准某一小群体是非常便捷的方法。你可能想要将测试瞄准某一特定区域的用户,或者将某些用户排除出测试中(游戏邦注:例如你最忠实的用户)。

你总是希望能够单独测试新用户。也就是你想通过测试去搞清楚用户在最初玩游戏时的看法。

让我们假设你正在测试游戏插播广告(即与你的网络中的其它应用进行交叉推广)的布局。作为测试的一部分,我们正在这些插播广告中改变按键布局,以此去推动更高的点击率。

关于这一方法的问题便在于现有用户将使用现有的UI,并且因为他们已经遭遇过插播广告了,所以他们的这种“习得性行为”将影响最终的测试结果。就像现有用户将默认地点击错误的位置,或者会因为UI中的改变而受挫。如此你便只能得到一些虚假的结果,即现有用户带着情绪进行点击。

相反地,你应该选择那些从未看过UI的新用户进行测试,如此你才能获得有关UI性能的准确评价。这便是心理学中的“首因效应”。所以你应该创建只将测试瞄准新用户的测试框架。

(本文为游戏邦/gamerboom.com编译,拒绝任何不保留版权的转载,如需转载请联系:游戏邦)

How To Get Started In A/B Testing: 6 Tips For Success

By Steve Collins

“One accurate measurement is worth 1,000 expert opinions”

- Admiral Grace Murray Hopper

Those words are probably the single most succinct argument for A/B testing we have. They sum up why any game studio might want to explore A/B testing as an aid to design and development – namely a desire to move beyond guessing what might work, and towards knowing what will.

Anyone who has experienced design by committee or simply HiPPO (highest paid person’s opinion), should by now be aware that there’s a better way to make those decisions. That method is using the real data supplied by real players within an A/B testing, data-driven decision making process.

But it’s one thing to be committed to the concept of testing. It’s quite another to know where to start. On that basis, this article provides 6 tips for successful testing that can help deliver real change to your business. Moving the needle on the metrics we all care about – retention, conversion, and of course revenue. So without further ado…

Start Simple

It sounds obvious, but it’s worth stating – if you want to embed a testing culture in your organization, you’re looking for quick wins and a demonstrated ability to get results. That means adopting a ‘lean’ approach, getting quick validation of the concept, rather than building a large, complex structure before a single test has taken place.

The good news is that’s easy to do. Whatever approach you take technically, there is no serious difficulty in rolling out simple tests. The single largest objection is likely to be fear of ‘changing the game’ – but your game changes (or should change) on a regular basis anyway. Often on the basis of little more than a hunch.

We can also allay that fear by testing relatively uncontroversial aspects of the player experience that can nevertheless have big effects. these could include:

?The delay time before a first interstitial ad is shown to players. We can quickly establish what effect this change has on click-through rate, retention, and other KPIs

?Alternative guide characters for tutorials. I’ve seen real differences in tutorial completion rates based on which character is used. Don’t agonize in design meetings – test it!

?Whether to show a registration screen or not (if appropriate). Is it smart to register players in order to deepen the relationship? Or does trying to do so lead to churn? Find out.

As we’re starting simple, getting true statistical significance in terms of your results may take a little extra work (although that won’t be the case if you’re using an A/B testing platform) – but it’s worth taking the time to do this. One group outperforming another means nothing in and of itself. Don’t take shortcuts here.

Remove Latency

We want to encourage frequent and fast testing. In fact, it’s vital that we do, because if an individual test takes too long to set up, requires code changes, and can only be pushed ‘live’ with a new app build, then the sunk cost of a test failure is too high to offset the benefits of our test wins. We end up in a situation in which it’s too risky, expensive or time-consuming to really embrace testing.

Thankfully, it’s easy to tackle the latency issue. The key to doing so is to get engineering out of the process. If every test requires re-coding, QA and sign-off from engineering, we aren’t going to get much done. And besides – they have better things to do.

By de-coupling the testing framework from engineering release cycles, we can create short test cycles, often run by product management or even marketing teams. In order to do this, we need to build our game to be as ‘data-driven’ as possible, with a clear understanding and agreement as to what data points are ‘open’ to testing. When we’ve reached that point, setting up a test can become as simple as changing a value in a spreadsheet or testing platform (which is why marketing can do it!)

Even better, when we’ve established a winning variant, pushing that change out to the live environment is just as simple as setting up the initial test was. We can see test results making an impact quickly – and move on to the next challenge.

This approach also enables us to ‘lock down’ (for the time being) parts of the game which are not open to testing – thus reducing the risks associated with the program.

Implement A ‘Kill Switch’

Sometimes things go wrong. In fact, when we’re encouraging ‘failure’ and creating a agile and adaptive data-driven culture, they often do. So make sure to minimize the impact of failure whilst maximizing learnings from negative results.

Your system must release your team from the ‘fear of failure’ – and the simplest way to do that is the kill switch. You want to have complete control of the test whilst it is live, and you want to be able to disable that test at any moment in time – without waiting for engineering input or a subsequent app release.

Your tests should be seen as overlays over the core game experience. The latter is the default setting, and it is one that ‘works’ – which is not to say it couldn’t use improvement (why are we testing, after all?). But it is essential that our kill switch can at any time return all players to this default state.

The good news is that with the correct A/B test QA procedures (which are relatively straightforward to set up), you will very rarely find yourself need to use your kill switch. But the knowledge that it is there will free you up to experiement with greater purpose and freedom – which is the attitude you will need to make testing a success.

Isolate Your Variables

OK, this sounds obvious. Or rather, it should be obvious. But when tests are being designed it can be very easy to forget this essential principle, leaving you with a positive result but little information as to why we’ve seen success in a particular case.

So it bears repeating: always design your tests to test one thing and one thing only.

As an example of how easily we can forget this principle, consider the creation of a specific offer for a particular player segment – designed to test the effect of changing the price of a specific item in virtual currency. We might decide to ‘support’ that test by delivering an interstitial to the relevant player group. But as soon as we do that, we are now testing two things simultaneously – the price change itself and the use of the in-game message. And we haven’t even discussed the design and content of that message.

The correct way to proceed in this case would be to show interstitials to all groups (variant and control), pitching both prices as an offer and then establishing which one drove greater in-game purchases of the item. If we see an uplift, we can be reasonably confident that it was the price change alone that drove that result – although it would still remain to be tested that this result would hold with no interstitial at all.

Similarly, when testing content changes, such as how items are described, try to focus on clear, repeatable changes. It’s one thing to know that a certain description works more effectively than an alternative. It’s a more powerful result when we learn in a concrete way which type of descriptions work most effectively.

Check Longitudinal Impacts

When testing, you’ll normally define the criteria for success in advance. In fact this step (and recording it) is important. But whilst we should define a conversion event, such as completion of the tutorial or a specific purchase, that is closely linked to the test itself, we should also take the time to examine the longitudinal impacts of the test.

By that, I mean we look in a broader sense at how our variant and control groups perform against certain KPIs over an extended period of time. A moment’s reflection tells us why this ‘sanity check’ is necessary. It would be perfectly possible to design a test in which an aggressive in-game message drive players to a specific purchase. If that offer was deceptive or dishonest, it isn’t hard to imagine a decline in retention – and hence long-term revenue even whilst the core test result is positive.

With that in mind, it’s always smart to look at the ‘whole business’ experience of your variant and control groups.

A word of caution. Always understand what you are looking for, and note in advance any KPIs you fear may be adversely affected. If we look at multiple metrics for multiple tests, it stands to reason that sooner or later we will see what may appear to be significant results. Chances are, however that these lie within normal, expected variation, and on that basis are not meaningful. Limiting ourselves to a specific set of KPIs that we might expect to change minmizes that risk of a ‘false positive’.

Treat New and Existing Users Differently

Selecting the population of users to test will sometimes require a little planning. Having a testing framework that can limit the tests to sub-groups of your users is often very handy indeed. You might want to limit the test to users from a particular region, or to exclude certain users from ever being tested (e.g. your most loyal customers).

One segment of your users that you’ll often want to test separately are your new users. That is, you’ll want to apply tests only to users as they start to use the game for the first time.

Let’s imagine you are testing the layout of your in-game interstitials, which are cross promoting other applications in your network. As part of the test, we are changing the button layouts within those interstitials in order to establish which drives a greater click-through rate.

The problem with this approach is that existing users will be used to the existing UI, will presumably have encountered the interstitials before, and their “learned behavior” will infect the test results. Existing users, may for example, click the wrong place by default, or may be frustrated by the change in UI. As a result you might see spurious results as existing users miss-click or click-in-anger.

Instead, you should limit these types of tests to new users to the game who have not yet “learned” the UI, and where you get a valid assessment of how effective each of the UI variations is on fresh users. This is known as the “primacy effect” in psychology literature and relates to our natural pre-disposition to more effectively remember the first way we’ve experienced something. Your testing framework should allow you to restrict the test to new users only.(source:gamesbrief)

上一篇:浅析3个追踪游戏表现的参数定义