万字长文,关注条件反射行为在游戏设计中运用和结果影响

篇目1,从条件反射行为反思游戏设计的问题

游戏邦注:本文作者是游戏设计师Chris Birke,他从条件反射行为入手,阐明了“乐趣”在神经学上的含义以及游戏如何利用条件反射产生的强制行为,呼吁广大开发者本着道德良心来设计游戏,为玩家的根本利益着想。

大约10多年以前,我读到John Hopson撰写的《Behavioral Game Design》一文。现在回想起来,那篇文章对我的之后的游戏设计观影响相当大。正是从那以后,我总是能发现把心理学和神经学原理整合进设计理念中的新方法,所以我一直追随这些原理。

技术进步日新月异,对网络而言更是如此。10年之期犹如百年,网络革命风起云涌。10年前,维基百科诞生了;不出四年,Facebook、《魔兽》和谷歌Gmail各领风骚;iPhone红遍整个2007年至今;《FarmVille》领衔农场经营游戏,这种游戏现在遍地开花。科学的前进步伐如此之快,神经科学联合高清实时的脑磁共振成像,正揭开行为学理论的神秘面纱。现在,基于分析和实时数据模拟玩家行为,我们的设计工作如有神助。我们不必为每天的设计苦恼了,如果有必要,我们还可以从理论运用中获利。

道德的含义是什么?《Behavioral Game Design》好像对这个古怪的命题也全然不知。现在,这种设计工具箱近乎一种心灵控制,只有提炼技术才有发展前途。在盲目地追逐“乐趣”的日子里,我们给玩家带来了什么?又遗忘了什么?

个人认为,既然我挣够了吃饭钱(可能再加一点点吧),我就有义务本着社会责任心来设计对玩家有好处的游戏,而不是拼命从玩家身上“榨钱”。我希望这些新技术的运用能呈现出积极的一面,同时鼓励与我志同道合的人。

首先,我将从神经科学的角度来阐述条件反射的运作原理,然后再深入解释游戏如何利用这个原理来最大化玩家的强制行为。之后,我还将进一步解释如何本着道德利用这些技术。我希望我的主张能在游戏行业里揭起一股讨论。首先,我们简要地了解一下条件反射行为。

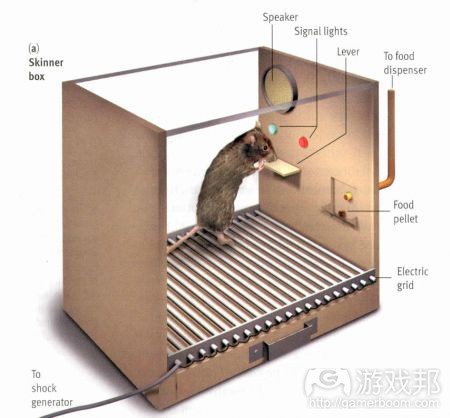

试验室(from gamasutra)

大多数行为学家不说“斯金纳箱”这个词。斯金纳本人也不想被当成一种设备而名垂青史,所以这口箱子应该叫作“操作性条件作用室”(游戏邦注:操作性条件反射的概念是由美国心理学家斯金纳于1954年提出的)。这是一个用来隔绝研究对象(通常是鸽子或老鼠)的笼子,里面有一个可以操作的按钮和对象要学习的刺激(比如照射光)。对象按下按扭,就可以获得奖励(食物),但前提是对象对刺激做出反应后,能正确地按下按钮。

斯金纳就是靠这口笼子来探索学习的本能,后来又发现了如何最大化或破坏研究对象的强制行为。斯金纳的研究结论,简而言之,就是响应刺激而获得的奖励会极大地影响动物(包括人)对训练的反应方式。强制程度最高的行为不是由“固定率“的奖励(游戏邦注:在这种情况下的刺激是对正确行为进行固定的奖励)激发的,而是半随机的”变量”奖励——你可能成功,也可能不成功;但只要你不断地尝试,总是有那么个“万一”存在。

如果过去几年你已经在设计游戏了,那么你应该对此不陌生。综合利用这些研究结论已被证明确实有效。比如,当你听到熟悉的“叮”声,这是《黑暗破坏神》里的怪物掉落戒指时发出的声音,你能抗拒那种感觉吗?这种混合了珍贵的奖励和艰辛战斗的声音,并不是时时有,可以说是半随机出现。

你同样不能反驳前置内容映射到《Rift》(或其他MMO)学习曲线上有益处。在游戏的后期更缓慢地释放奖励内容,不仅能够最大化奖励的价值,而且正好符合最具强制性奖励时序安排的记录结果。只要添加一些强制随机战斗就可以保持游戏的新鲜度。评论称这是好设计,因为它更有趣了,是吧?

因为我就是个这么让人沮丧的还原论者,所以让我告诉你所谓“乐趣”的真相吧。它只是我们大脑中的一种电子和化学反应活动。乐趣就产生于此。你大概可以制作一个曲线图,上面添加销售额、焦点人群或Facebook游戏的DAU作为变量,“乐趣”的轮廓就出来了。即使你认为现在的Facebook游戏一点也不好玩,还是有人在玩(稍后你就知道为什么了)。

什么是“乐趣”?

在我看来,神经科学正在把行为理论当作操纵玩家的最有效手段进行快速拓展。到底是什么使大脑产生相同的东西呢?关于这个问题,目前存在若干不同的理论。在此,我只和读者们分享一下我最赞同的观点。

如果我所说的理论不是产生乐趣的真实机制,那么我至少要提醒你:很快就会发现真相的。早些时候,我看到一篇题为《Predictive Reward Signal of Dopamine Neurons》(多巴胺神经元的预见性奖励信号)的论文,看得我头晕眼花。这篇文章详细地描述了,大脑内存在一种特殊的神经元,专门负责释放一种叫作多巴胺的神经递质,多巴脑就是刺激学习和动机的“奖励系统”。其实,这是相当简单的理论,名为“刺激突起”,其关键在于保持新鲜感。

我们的大脑都是相似的,就好像每个正常人出生时,无名指的指甲盖上的角质细胞类型都是一样的。所以,我们的大脑上有相同的分区,每个人的大脑分区承担的任务(情绪、面部识别等)都是相同的。但每个区的活动都已专门化。

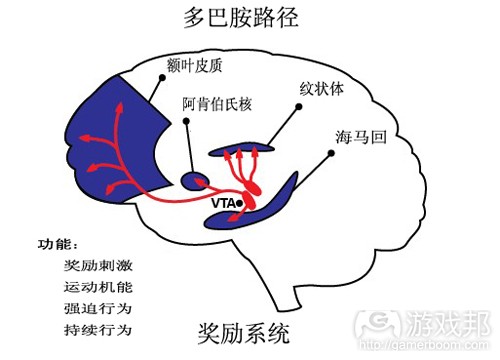

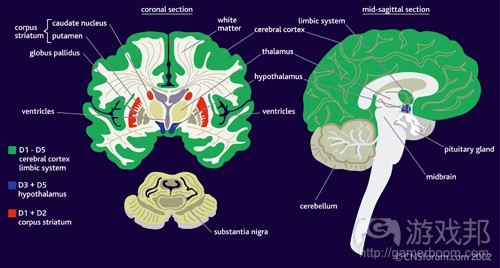

VTA,即腹侧背盖区,是大脑中的一个重要的中枢结构,是由专门释放神经递质多巴胺的神经元组成的。VTA延伸到其他脑区,伺机而动。通过释放多巴胺,这个结构充当了节流阀,可以强化各个区的大脑活动。那么,究竟是什么在操纵这个节流阀呢?奖励是也。

奖励系统(from gamasutra)

无论是斯金纳给研究对象的奖励,还是我们人人都渴望的社会地位、性、战利品等等,本质上都是一样诱人的。这些奖励激发了多巴胺神经元所管理的信号,并且每种信号都对应一定程度的奖励期望。

当意料之外的奖励出现时(或者说是“突出的奖励”),大脑中的多巴胺如泄洪般地增加了。大量释放的多巴胺就像火上浇油,沿着多巴胺能的神经通路,大脑的活动就增强了。

也就是在这个时候,新的记忆正在酝酿,原料就是作为刺激的当前感觉。当大脑体验过新奖励,无论当时大脑的感觉或想法是什么,这种记忆就成了一种模式。

现在,一种新联系建立了,一旦刺激出现,奖励的记忆就会被激活。并且,这个激活动作甚至比奖励本身更早出现。这就是行为学家所说的条件反射。

就以冰激淋为例子吧。现在你面前有一根像奶油一样甜(这作为奖励)的冰激淋,但你并不知道。假设你以前从来没有吃过冰激淋,现在舔一口吧。

冰激淋里的糖应该会触发大脑的自动奖励信号,然后多巴胺系统就会检测到,并且马上让你兴奋起来。(第一次尝试)

吃冰激淋越多,甜蜜的体验就越深刻,所以关于冰激淋的记忆也更加生动鲜明。单纯地看见冰激淋或闻到它的气味(不必尝),就足够让你联想起它的滋味。

冰激淋的奖励成了反射条件。这就是一种“愉悦”的体验,便我还不能称之为“乐趣”。离“乐趣”的形成尚有一步之遥。

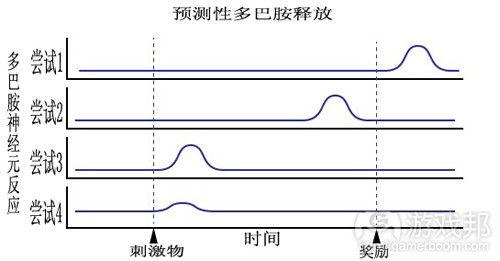

多巴胺的释放会反复四次,直到奖励信号与奖励本身的关系最远

因为你已经对冰激淋建立了条件反射,所以当你再次看到或闻到冰激淋时,甚至在你还没吃到之前,就会激活多巴胺反应(第二次尝试)——不是在最初的那片区域(那些最接近品偿体验的神经元已经开始期望冰激淋了,不像新区域那么兴奋)。相反地,还没尝过冰激淋奖励的新区域此时正在被激活。

记着,现在,在你吃到冰激淋以前,条件反射就已经发生了。当你站在等着买冰激淋的长队里,多巴胺的释放仿佛在大脑中放了一把火,对冰激淋的渴望熊熊燃烧起来。脑海中的记忆和刺激又被强化了(第三次尝试)。这是你的大脑正在记录奖励的期望。就算站在购买的队伍里和正在吃冰激淋完全是两回事,但你还是产生了得到奖励的反应,因为你的记忆强迫你这么做。这就是我们所说的“乐趣”。

“且慢,”你说,“站在队伍里不管用吧!”(你是对的。)对你我都不管用了,因为你已经做过太多次了,但是,试着想象一下你第一次站在买冰激淋的队伍里。我肯定你一定为期望兴奋到不可自制。这就是多巴胺的作用。多巴胺把冰激淋的记忆浸润得如此生动鲜活,以致于你几乎像尝到了冰激淋,所以在记忆的驱使之下,你走向冰激淇店,看着各种口味的冰激淋,琢磨着要购哪一款(巧克力还是奶油?)

所有这些经历和选择都是大脑的新记忆,并且都与冰激淋的奖励建立起坚实的联系。正是这些新记忆让多巴胺不断释放。只要新刺激可以预测到奖励,多巴胺系统就会不断引发记录体验的兴奋感,这整个体验都带着一种对奖励的不可抗拒的感觉和期望。所谓的体验不是指奖励本身,而是学习得到奖励的新记忆和渴望奖励的动机感。

这就是我想到的:当新的预测性刺激形成映射时,多巴胺就会强化奖励的经验。尽管每个人的大脑都是有差别的,有些人几乎免疫,有些人则非常容易受影响,但神经元和多巴胺总是存在的。再进一步推论,这就是游戏设计的工具。确保游戏给玩家带来明显的奖励,同时这种奖励要有足够的复杂度,但也不可过于复杂,否则就不能再刺激玩家。

为什么《魔兽》的服务器崩溃时,你仍然乐意不断地点击“重新链接”?为什么你总是重设旧机制,只要还能把它卖给推销给从来没有接触过的人(如儿童、新玩家)?奖励如何散布到多个大脑系统中(社交、听觉、机械、视觉)?条件反射正好解释了这些问题。有点令人意外的是,Vegas居然没有想出如何把赌博变成除了赌徒以外所有人都玩的社交游戏。

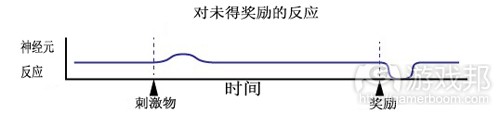

当期望中的奖励落空,人的沮丧之感瞬间就产生了

当所有可以联系到冰激淋的东西都联接完了,多巴胺系统就安定下来了。相关的神经元已经习惯了——感觉到刺激时也不再兴奋。这些神经元只是期望应该得到冰激淋奖励的时候就得到。因为没有学习新的东西,神经元不再引发兴奋。站在买冰激淋的队伍里失效了。

虽然冰激淋本身还是很美味可口,站在队伍里你仍然可以得到冰激淋,但“感觉就是不对了”。不仅如此,多巴胺神经元仍然能感觉到刺激,且如果当它期望时,奖励却没有出现,整条反应线就拉平了。当这条线彻底拉直了,就算冰激淋送到面前,你也不会再有什么冲动了,你还非常可能感到愤怒。这是厌恶理论,和乐趣理论类似。

冰激淋这个例子也暗示了成瘾原理,“致瘾“游戏和毒品有所不同——主要是多巴胺释放的强度和方式。几乎所有致瘾药品都会影响多巴胺,如去氧麻黄碱,会使多巴胺立即以十倍之于正常水平的量释放。你已经知道了奖励系统的运作,所以你可以想象得出这是多么强大的条件反射作用。游戏只不过以正常的感觉渠道刺激多巴胺的释放,释放的量也尚属于健康可接受的范围。

根据这个理论,头几次玩游戏释放的多巴胺是最多的。如果你有过恨不得冲回家玩一整天的游戏,那么你应该明白严重上瘾是什么感觉。另外适应性和压抑性会抑制多巴胺的进一步释放。

游戏有没有可能像毒品那样,形成足够强大的条件反射,从而立即刺激多巴胺释放?可能吧,从经济利益的角度上讲,我们很有动机确认这个可能性。我们有理由假定,有些人可能被训练至强制玩游戏。

根据反馈数据设计游戏

每个街机都有一个收集钱币的柜子。一到月底,街机老板就把柜子里的币统计一下,一部分付帐,其余的留作后期经营资金。老板就是凭这些钱决定要买哪款新街机。

你可以想象这种系统对游戏设计的影响,你可以认为它是分析的主要形式。当下的Facebook游戏的发布方式绝大多数在本质上是一致的,与计算利润仅有一步之遥。然而,因为数字营销和实时参数指标,这个世界一下子就改头换面了。

分析就是分析数据后再整成报告。由于早期的Facebook游戏不是由传统的游戏工作室制作的,所以数字分析最早是由网页开发者引进游戏领域。网页开发者知道分析的价值,而掌机游戏领域现在才开始意识到,并尝试着将其运用于游戏开发。

把网页中的“点击量”和“跳出率”概念换到Facebook游戏中,就成了“DAU(日平均用户)”、“MAU(月平均用户)”和组合参数“DAU/MAU”(用于衡量留存率)。

这些词除了给开发商带来声望,还设立了清楚明了、不容辩驳的成功指标:曲线图上的点。通过这些简单的指标,你可以即时地看到你的游戏如何快速积聚用户,留住用户和赢利。

然而,在DAU这种水平上分析,基本用途非常有限。这种分析只能反映现在,不能解释游戏为何、如何产生这些数字。正是通过设计理解、深入分析和经验的组合,才能进阶到下一级别。

现在游戏已经“云”化了。流媒体技术是早期的数字分布渠道之一,很快就深入到分析学和硬件调查的领域。Facebook拥有更高级的用户统计法,且已经嵌入分析功能(更别说它还重塑了我们的隐私概念)。

Android和iOS设备都通过“云技术”传输内容。Google拥有大量在线API用于分析,我只盼望某天像OkCupid这样的网站可以提供分析程序。(这是一个分析领域的金矿!)当代和次世代游戏机都有数字渠道,我认为这个趋势将继续发展。

我们在那个方向上走得越远,我们就越容易得到分析数额,此外,社交图谱也随之而来。所有玩家之间的关系,游戏内的互动和玩家的游戏史,都可以编成一份独有的文件夹。网页分析和游戏设计开始把这些海量的信息整合成新的东西。

因为游戏比网站提供的用户活动信息的层次更深,所以你也可以利用分析来更深入地观察玩家的行为。早在我们想到Facebook以前,Will Wright会不会是利用分析进行游戏设计的第一人呢?2001年,在电脑游戏期刊《Game Studies》对他的采访中,Wright说到数据如何揭开两大《虚拟人生》玩法:建房和建交。之后,这款游戏果然进一步为这两大玩家的目标而服务。

他继续谈到,有朝一日,分析可以用于为个体玩家打造游戏。现在这已经有可能了。不只是从参与的玩家身上收集信息,玩家行为的方方面面都可以收集起来用作分析。通过什么、何时、谁和为什么这些问题以及大量推测性信息现在都可以进一步研究了。

把玩家分成两个不同的类群,这样游戏开发者就可以根据玩家的类型来制作游戏。如果在动物园游戏中针对紫色企鹅收费10美元,无法填补你的月预算赤字,那么就把10美元的紫色加布迪(游戏邦注:意大利著名跑车品牌)引入差不多完全相同的汽车收集游戏中吧(迎合部分收入颇丰的中东受众)。

并非所有玩家群体的价值都是相等的。通过制作针对少数人的内容,你可以从主流受众中开发出“间隙市场”,或者把主流受众划分成更易管理的发展目标。并非所有玩家的价值都是一致的,这是同样的道理。

识别你的早期玩家和作为社交中心的意见领袖,对游戏公司大有益处。这些玩家是病毒传播的代理人,是成功的途径。识别他们并且让他们知道自己的奖励和特权地位。尽早告之下一款游戏,鼓励他们去尝试新游戏。剩下就好办了,其他玩家可能就会追随他们的步伐相继而至。

别忘了,这都可以自动化。服务器上的游戏一天可能更新两次,如果有必要,没理由让每个用户都看到相同版本的游戏。你不仅可以给最受欢迎的玩家“特别版”,你还可以发布“设计版A”给15%的用户,“设计版B”给剩下的用户。

观察分析看看哪个版本运作得更好,然后把整个游戏都变成那版设计。清洗、漂白、重复。这就是所谓的“A/B测试”,你可以用它来探索设计的方方面面。只是要注意不要测试相同数字内容的不同成本,因为人们会比较论坛上的交流意见,然后心生怀疑。

不过我认为没必要这么赤裸裸。我们不能消极被动,而要先发制人,通过前瞻性设计找到成功所在。因为提早列出概况,我们现在有了强大的理论框架,这个理论框架是关于与玩家联系、通过行为理论和神经学发展强制的游戏反应。A/B测试、统计、社交图谱和无数自愿参与的玩家都集中在这座旨在实现完美设计的实验室里。

我建议用小部分玩家做实验,看看他们对某种机制的条件反射情况如何,这样就可以优化时间和奖励分配。要雇用和培养统计员,这样我们可以根据他们的敏感性来追踪用户,定位用户、并最终留住用户。

不是一次又一次地修改相同的设计,而是推测特定市场范围内某种设计的有效性,然后把旧设计改成新的,更复杂的设计。通过MAU/DAU比值来增加留存率可以证实这一点。同时要记住,旧设计也许适用于新的受众群体。

游戏是否利用了玩家?

前面我们已经说到,我们有一套经过科学确证的奖励系统,又有大量社会学实验室每天开展的成千上万个实验的修正,真是太好了。这套系统总是能成功地牢住之前没有玩过游戏的人、网络环境下成长的孩子、失业的成年人和把时间消磨在工作上的人。游戏给他们什么回报呢?“乐趣”?

诚然,营利性实验室早在电子游戏分析学出现前就存在了。从一开始,我们就互相操纵,我们建立实验室来接收别人的想法(这叫作“交流”)。在游戏出现以前,广告就在改良操纵方法,而在广告出现以前,传道和修辞又差不多是这么做了。甚至我写下这些文字也是操纵你去思考我现在所讲的事实。

网络赌博、神经科学和分析学只是提供了控制行为和产生利润的强大利器。这种情况并不仅局限于游戏领域。

Jesse Schell在DICE大会的演讲《Design Outside the Box》中刻画了一个令人堪忧的未来版本:商业的动机就是披上跟踪销售的“游戏化”外衣,但现实已经是这样了。

让我担忧的不是买十送一的咖啡券,而是市场上的那一张张信用卡背后的“奖励计划”。为什么会有“奖励计划”这么个奇怪的概念存在?因为它管用啊。

游戏现在变成一种信用骗局?没有叙述、没有社交背景、没有政治立场、也没有表达创意的机会,我们只是把玩家划入小小养殖箱中吗?

作为设计师,我们只是一次又一次地点击相同的程序代码,等着财源滚滚而来,然后我们又可以继续点击代码了?你厌烦了吗?你还有多少空虚的时间要靠点击这些东西来打发?能提供更多价值的游戏才值得更多的付出,人们只是需要认识到这点而已。

让用户理解系统,向他们证明为什么你的游戏值得他们付出。让他们抛开其他游戏。支持能够传递价值的游戏,拒绝利用简单的奖励机制把游戏变成数字娱乐的麦当劳食品。用故事创造游戏,用艺术创造游戏。机制和对乐趣的理论性理解是表达信息的强大工具,这远比积分、音乐和电影更强大。那就是道德,你可以靠它获利。

媒体的未来会朝这个方向发展。尽管开发者总是嘲笑“将游戏视为艺术”的这种想法,但毫无疑问,这个想法在讨论中将越来越频繁地提起。史密森尼博物馆计划2012年办一个游戏展,全国艺术基金会(National Endowment for the Arts)现在也开始资助有艺术价值的游戏。

这意味着什么?我几乎没遇到过哪个谈到这个问题的设计师是人文学科背景出身的,所以我抓住机会,按设计方向把它转译成:艺术创造文明。从个体水平上交流和操纵精神不能产生文明,而是在大多数人中共享想法和道德才能产生文明。

艺术创造了忠诚的亚文化,在这个水平上的条件反射行为更高级、更广泛,远超过老虎机和Facebook游戏的简单设计。在独立游戏开发者的庆典、芯片音乐的小宇宙和人们对《最终幻想7》角色忠贞不渝的喜爱中,亚文化形成了。 Link的美德影响了整整一代人,而《CityVille》中的道德观也许永远也不可能达到这个境界。

我们让行为操纵产生积极的作用。耶鲁大学进行了一项名为“Play 2 Prevent”的项目,旨在探索如何将游戏作为提高青少年在性行为中预防HIV意识的工具。可能有些人会资助游戏来训练和改造犯人,因为比起无线电视,这些游戏应该更有教育和改良意义。

分析学也是你的朋友。你可以像大型游戏发行商那样在你的游戏上做实验、优化你的游戏——给予用户更多对他们生活有益的东西。用户们喜欢分析学,只要它对他们的还有益处。只要把数据整合成一份友好的程序包发给用户,然后再取回反馈。与其他设计师分享数据(也许我们需要一个玩家分析学网站?),共同对抗隐藏数据的公司。创意地设计,脱离社交图谱数据制作机制,以深化与真实玩家的互动。

大量服务已经在朝这一目标迈进(例如Xbox排行榜、OkTrends博客),且人们有意识地把它像病毒一样传播开来。用分析学进一步发展你的概念。如果数据表明,玩家数量在下午5点达到顶峰,那么就把游戏活动安排在那个时间。实时调整游戏的系统,像《Left 4 Dead》中的AI指导,可以由分析学数据来驱动。欣然接受“云”技术这个概念,运用这个数据保持游戏动态。

心中有爱后才能追求利润(from gamasutra)

结语

玩游戏可以是一件很美好的事,甚至可能延展生命,但也可能导致人们上瘾般地沉迷其中,却不知自己在为素未谋面之人创造大量利润。因为我已经学过这些东西了,一天不见它们,我就过不下去了。当我因为不能完成逃不掉的专题报告而停止玩游戏时,我会想到它。当我读到某些我想学但不能学的论题,但文本写得很差时,我就会生气,因为我的意愿浪费在厌倦之中。

当我看到孩子们每天重复地做着枯燥无味的数学作业,我就心生同情,当我看到制作精美的广告却用来宣传不健康的东西,还被贴上艺术的标签,我就痛心疾首。我想到无数才能都浪费在做些事当中。一想到那些上瘾的人不断地玩维加斯的老虎机,所有的潮人不停地猛拍手机,希望Facebook快升级,满大街走着的都是一群不会思考的动物,我就不能再继续制作那种游戏了。但我现在还无法停止。

游戏与玩家之间如何互动?带着你的道德来设计吧。有时做点有趣又带强制性的东西也不错。但有时候,你也得被迫做出让步。在开发游戏的过程中,我已经做了不少令人羞耻的事——我妥协了用暴力对抗女人的原则,我模仿军用武器弹药,我还非常努力地研究如何让人们近乎强制地做事。

别放弃。如果你进入游戏行业是出于爱游戏,那么为它而战吧(你必须)。世道变化得快,游戏的未来就靠你了。

篇目2,Nicholas Lovell谈斯金纳机制及其替代方法

作者:Matthew Diener

与F2P的批评者交谈,你会发现他们许多人认为该模式令游戏降格至斯金纳箱,此类游戏设计就是为了引诱玩家为赢得奖励而掏钱。

所谓的斯金纳箱,就是新行为主义心理学的创始人之一的斯金纳为研究操作性条件反射而设计的实验设备。箱内放进一只白鼠,当它压杠杆时,就会有一道亮光或者声音响起,此时会有一团食物掉进箱子的盘中,动物就能吃到食物。实验发现,动物的学习行为是随着一个起强化作用的刺激而发生的。

在此我们将“食物”更换为“宝石”,而“杠杆”则是“触屏”,就很容易看出这一模式为何适用于许多热门F2P游戏了。但是,仅仅是这个模式能够带来成功,并不意味着它就是唯一选择。

在此,我们采访了GAMESbrief网站创办者Nicholas Lovell,与之探讨了F2P游戏未来走向等问题。

skinner_box(from blog.douglas.ca)

让我们先从行为主义入手吧。让开发者将游戏视为斯金纳箱的传统思路是什么呢?

首先我得声明一下,我并不是心理学家,也只能以门外汉的角度理解斯金纳箱。

斯金纳箱——更准确地应该称为操作性条件反射箱,起源于心理学家B.F. 斯金纳为了调查测试对象(通常是老鼠和鸽子)在得到特定奖励时会表现出哪种行为的实验。

老鼠可能按压一次杠杆就能得到奖励,也可能按压10次才有奖励,或者每隔10秒就按压一次。

斯金纳发这一过程中引入奖励大小,以及测试样本所需完成活动的随机性时,这些动物就会反复地重复操作,甚至不惜为此而导致自我损伤。

由此得到的一个结论就是,我们也有可能使用不同奖励结构去鼓励受试动物——甚至是人类,去采取有违自身利益的行为。

早期的Facebook游戏,尤其是那些出自Zynga之手的产品,在某些人看来就是斯金纳箱:你出现了,做了某事,因此而获得奖励,然后又重复这一操作。

对有些玩家来说,这是个令人愉快的体验,对另一些人来说,则是缺乏创意的表现。我认为,因此他们对游戏创新性有一定的期望,所以看到《FarmVille》不合自己预期时就会产生挫败感。

Ville类型的游戏中确有一些心理操控元素,但这些操控几乎在所有游戏设计中都有出现。

在你的GDC Next演讲中,你指出斯金纳箱最终会不可行,因为受试对象会意识到结果并没有什么不同。我们能不能说许多富有“粘性”的游戏之所以能成功,主要得益于玩家还没有这个意识?

我不确定情况是否如此。我喜欢《FarmVille》和《CityVille》,并在其中玩了很久,但我开始玩《CastleVille》时,就发现它与之前的游戏很相似。

我原本计划像之前两款游戏一样,刷三个月的任务,完成升级和相关任务。

但不到一会儿我就发现自己已经可以知道游戏所能提供的所有元素。我没必要在《CastleVille》中继续玩下去了,当然我也未必就是正确的——也许《CastleVille》确实具有我未曾预料的创新。

你也可以以此来指责AAA游戏,虽然这类游戏是以更多资产、场景和更大的预算来实现这种重复。我玩完了《神秘海域2》,但却发现已经没有再玩《神秘海域3》的热情了。

我认为它只是一个披着故事外衣的相同操作。如果它的重心在于故事而非玩法,那我情愿去看书。

对我来说,斯金纳箱结论的重要之处在于,你可以介绍某个之前从未接触这类玩法的人去体验《FarmVille》,这种“做事得奖励”的机制在他们看来可能颇为新鲜而值当。

但当这些人习惯了这一理念后,再向另一款换皮的游戏重复相同操作时,感觉就没这么新鲜了,因为玩家已经开始理解这个系统。

斯金纳箱是关于学习的事物,当你觉得自己已经掌握时,其奖励在本质上已经没有那么强的回报感了。坦白说,我很高兴看到不少用户说“我们厌烦了,来点新鲜的吧。”这是一个好现象。

你是否认为游戏中的“操作性条件反射”——例如基本的老虎机模式是持续可行的?

基于操作性条件反射的游戏将一直存在。而喜欢基本老虎机游戏的群体也同样如此。这里有相对较低的认知负荷,它是一种逃避现实的体验。

最后,如果这种游戏是持续可行的,开发者就需要在三个不同的领域增加游戏体验。

第一,你可以扩大场景——这种情况已经发生了。如果你可以看到一些非常壮观的景象,也就无所谓是不是能从游戏中学到新东西了。这正是为何当今的F2P游戏预算不断高升的原因。

第二,你可以增加奖励水平。如果玩家不再回应这些行为操作性条件反射的东西,开发者可以考虑“提高奖励水平”。真钱赌博运用的就是这个原理。

另一个是日本的complete gacha现象。也就是你提高奖励水平,但如果玩家想获得奖励,就要完成很多任务才行。

你可以三次重复这一循环,它有可能奏效,也有可能无效,如果无效那就重新开始循环。

从操作性条件反射观点来看,嵌入式随机性极具吸引力,以至于日本政府不得不出面禁止这一机制。

最后,你的第三个选择就是进行创新。如果你是基于数据分析的开发者,那就会比以上两者更难实现创新,所以你要不就扩大场景,要不是提高奖励。

日本最近宣布取缔“kompu gacha”这种极端利用操作性条件反射的盈利方法。这一禁令背后的原因在于该机制在赚取玩家金钱方面极为成功。这种机制对于操作性条件反射型的游戏来说难道不是很有意义吗?

如果我早期的结论是它并不可行,那么他们还有必要取缔这个机制吗?

没错,它确实很可行,但它是在极端情况下可行,或者说它会对社会的脆弱群体带来影响。我认为日本发现这种双重随机性如此盛行,以至于需要政府介入保护某些用户的利益。

我并非政府干预的死忠粉丝,但有些情况下确实需要保护人们的利益不受损害,同理,我也不主张这类游戏瞄准意志薄弱的群体。因为这是道德上的错误,也并非什么长久之计。

所以,我并不是说游戏中的操作性条件反射就是完全无用的,或者说它完全不可行。我只是认为在一个拥有1亿人的大众市场中,简单地重复某件事在吸引玩家这一点上将日渐失效,因为我们并没有由此学到或体验到新事物。

如果开发者想最大化高消费用户的终身价值,那么应该采取哪种可替代斯金纳箱机制的方法呢?

我不认为斯金纳箱机制催生了高消费玩家。我对高消费玩家或者“超级粉丝”的看法是,你得让这些人先喜欢上你的游戏,然后让他们为自己真正在乎的东西掏钱。

那么,究竟有什么方法可取代操作性条件反射呢?那就是让人们爱上你的游戏。

有一个方法是让人们对你的游戏上瘾,但实际上,如果能够让人们自愿回到游戏中的,那么你的游戏就会更为成功和令人快乐。

最近EEDAR调查显示,人们并不会为自己认为物有所值的东西花钱而后悔。如果他们真的喜欢游戏,想付钱给你,那就向他们出售一些能够之与产生情感共鸣的物品。

玩家通常会因情感和价值感而购买虚拟商品。玩家希望成为某个群体中的一员,或者与他人保持距离。他们重视自我表达,重视好友以及成为团队的一员,他们重视自己的时间甚于金钱,他们重视的事情有所不同。

这种方法与纯斯金纳箱机制(操纵人们的大脑,让他们去重视实际上并不重要的东西)的区别在于,它的目的在于创建一个让人们乐于花钱的社交环境。

所以,开发者不应该操纵玩家大脑让他们去重视某物,而应该让玩家自己去重视某物?

没错。

在很大程度上讲,这意味着提供一种社交情境。这未必是像《军团要塞》中的同步多人环境,它可以是像《Candy Crush Saga》中的地图,让玩家看到好友的进程,以便玩家加快速度赶上对方的进度。

篇目3,阐述人类大脑中多巴胺释放与游戏之间的关系

作者:Ben Lewis-Evans

几周前当我在听某一游戏播客时,主持人在描述一款特殊游戏时说到这“刺激了多巴胺”,即关于他们在体验游戏时感受到种种乐趣,并希望继续游戏(游戏邦注:多巴胺是一种神经递质,如大脑中的化学物质)。该评论是即兴的,但却反应了一个常见的观点——释放多巴胺是关于乐趣和奖励,因此这与游戏玩法息息相关。但是该观点是否正确?

如果回到30多年前,研究人员将会呈现出多巴胺是关于乐趣和奖励的的观点。更早之前有一个关于该理念的实验,即在老鼠大脑上安插电极去刺激大脑领域对多巴胺产物做出反应。这些老鼠可以通过按压杠杆去表现大脑受到刺激。为了反应这种自我管理的大脑刺激,老鼠将牺牲一些基本代价去按压杠杆。例如,比起吃,社交,睡觉等等它们更愿意按压杠杠。再加上之后的其它证据,这部分大脑领域被标记为“愉快中枢”,似乎也很有说服力。

dopamine-expression(from scienceofeds)

所以那时候的结论便是,多巴胺是让我们喜欢奖励,并鼓励我们去追求奖励的最重要化学物质。同样地,主持人当下也传播了有关多巴胺作为一种奖励和乐趣的化学物质的理念。

不幸的是(不过在长期看来却是幸运的),大脑并没有这么简单。科学一直在发展着,所有的事物也都在发生改变。而该领域的主要研究者建议关于“多巴胺能做什么”的最佳答案是“混淆神经系统科学家。”

虽然该答案很有趣,但却不能帮助游戏领域中的人更好地理解自己的游戏是否能够影响玩家的大脑。同样地,对于我来说撰写本篇文章的目的便是为了解释当前的科学对于多巴胺所扮演的角色和奖励的影响。此外我也会尝试着根据这些神经递质对于游戏创造者的意义而提供一些相关评论。

需要注意的是,在接下来的内容中我将专注于谈论多巴胺(神经递质)本身的效果,而不会谈及有关大脑领域中有关成果或抑制等内容。同时我也会专注于讨论最重要也是最有趣的例子和实验,所以这并不是针对于某一对象的学术评论。

喜欢,学习或想要获得奖励

处理多巴胺问题及它能做什么的一种有效方法便是根据喜欢,学习或想要去分解人们对于奖励的反应。也就是说,当提到你对于奖励的反应(如在游戏中获得某些内容)时,你是单纯喜欢奖励(如它很有趣),掌握了适当的方法去获得奖励(如你能否真正地玩游戏),以及你是否愿意努力获得该奖励(它是否能够刺激你继续游戏)都是完全不同的内容。

喜欢游戏

如果你为了获得某一奖励而有所付出,你便会喜欢该奖励。这便是引导着早前的研究者做出多巴胺与乐趣(“喜欢”)相关的假设的主要原因。基本上来看,他们认为如果老鼠感受不到乐趣的话又怎么会长时间去推动杠杆呢?

关于自我刺激的老鼠的理念是一种可理解的假设。虽然最终证明人们通常都很喜欢奖励,但是为了能够有效改变行为,它们不需要获得人们的喜欢,况且多巴胺本身并不会直接包含于“喜欢”和乐趣中。

随着研究的发展,我们越来越清楚动物也具有自己的能力去创造多巴胺,所以即使它们的多巴胺释放被阻碍或限制(通过药物,手术或遗传),我们也仍然能够证实这些动物“喜欢”某些事物。就像一只因为基因转变而不能释放多巴胺的老鼠它也仍会对糖水或其它食物表现出偏爱。因为这些老鼠喜欢甜味的水,所以在能做出选择时便会略过没有甜味的水。此外,你可以通过遗传工程赋予变体老鼠额外的多巴胺,而这些动物不能呈现“喜欢”不同食物的任何额外标记(尽管在它们的脑子里也充斥着所有的多巴胺)。

这是关于老鼠,那人类呢?研究人员并不能创造变体人类,他们也很难将直接在人体上试用药物或做大脑手术。但是我们可以观察帕金森患者,这便是受到多巴胺生产问题的影响。这些患者与老鼠一样,在对于奖励(如甜味)的喜欢方面也不会发生改变。

考虑到上述的发现以及其它相关内容,研究人员很难继续坚持多巴胺是一种乐趣或“喜欢”化学物质的理念。实际上,在1990年,一名主要的研究员同时也是多巴胺与乐趣息息相关这一理念的主张者Roy Wise便声明:

“我不再相信乐趣的分量与充斥于大脑中的多巴胺数量是均衡的。”

的确,比起多巴胺而言,像类阿片和大麻类等其它神经递质更加贴切“喜欢”奖励。尽管我们也需要注意在大脑中释放类阿片可能间接导致多巴胺系统做出反应,这也再次解释了之前关于多巴胺角色的困惑。但是就像之前提到的,从遗传角度来看老鼠不能在创造出多巴胺后仍喜欢某些事物!

所以你在游戏的时候是否感受到了愉悦?也许多巴胺并不是创造这种感受的原因。同样地,如果有人告诉你他们的游戏是围绕着扩大多巴胺传达进行设计,那么这便不能代表他们的游戏就一定是有趣的。

学习游戏

如果多巴胺并不是关于乐趣,那么它能做些什么?还有另外一种假设(在20世纪90年代开始盛行)是,多巴胺将帮助动物学习如何且在哪里获得奖励(这是在游戏中同时也在生活中需要记得的非常有帮助的内容)。当科学家开始注意到多巴胺活动会在奖励传达前开始提高,从而帮助动物预测到未来奖励这一情况时,这种假设便开始出现了。换言之,当动物之前看到某一刺激与奖励是相互维系在一起时,多巴胺便会被释放出来,从而预测到即将到来的奖励,而不是作为奖励本身的反应。似乎当奖励是不可预测时(就像游戏中随机掉落的奖励),多巴胺系统中的活动便会增加。如果多巴胺是关于学习,那么当动物期待或掌握了一个不可预知的奖励可能出现时,多巴胺活动便会增加。毕竟,如果奖励是不可预知的,你便会投入更多关注/尝试并了解相关奖励标记,从而找出在之后获得奖励的更有效的方式。

再次,虽然有关多巴胺扮演的学习角色的证据也非常合理,但是变体老鼠也再次动摇了这一理念。在有关该领域的某一研究中,华盛顿大学的科学家发现,不能再释放多巴胺的老鼠不仅仍会继续“喜欢”奖励,同时它们也还能学习到奖励来自哪里。也就是这些变体老鼠在接受了咖啡因的注射后仍能掌握奖励是在T形迷宫的左手边。注射到老鼠体内的咖啡因是与多巴胺释放无关的,但却是需要的,因为如果没有这些咖啡因,那么不能释放多巴胺的老鼠便什么都做不了。实际上,这些变体老鼠会因为缺少足够的食物和水而死亡,除非你能够向它们定期注射药物而储存它们的多巴胺功能。

除了上述的实验,当提到学习时,老鼠释放了比往常更多的多巴胺并不能证实它们拥有更多优势。但是就像之前提到的,缺少多巴胺的老鼠会饿死则意味着多巴胺具有一定的作用。但如果多巴胺既不是关于获得奖励的喜悦也不是学习如何获得奖励,它又能做些什么?

想要(渴望,需要)游戏

基于现在的科学,似乎多巴胺与想要奖励更加贴切。这并不是一种主观感受或像“我想要在今晚完成《黑道圣徒IV》”这样的认知声明,而是一种获得奖励的动力或愿望。所以这并不是关于“喜欢”和乐趣的感受,而是推动着我们去做某事的需求或动力。主观上来看,这就像你今晚不得不在停止前于《文明》中再玩一轮或在《暗黑破坏神》中获得更多战利品。当讨论到老鼠实验的结果时,有些研究人员认为多巴胺创造了一种“吸引力”或强制力推动着对象去获取奖励。的确,有人会认为多巴胺扮演“学习”角色的证据只是“想要”获取一个未知奖励的标志,并因此刺激学习作为一种副作用而出现(如果我想要某些事物,我们便有可能尝试着去学习如何获得它)。

我们可以再一次使用变体老鼠去证实多巴胺的“想要”角色。基于不能释放多巴胺的老鼠,它们缺少朝奖励前进的动力。这便意味着这些老鼠虽然喜欢甜味的水,知道甜味水是来自饮水管的右边,但是它们却缺少动力走过去喝水。相反地,拥有比普通老鼠更多多巴胺的变体老鼠拥有更多动力去获取奖励—-不管是基于它们接近奖励的速度还是它们为了获取奖励所付出的努力。是否还记得那些脑子里带有电极,并且会为了获得更多刺激而牺牲一切的老鼠?我们也能够根据“想要”刺激进行解释(而不是“喜欢”刺激)。就像带有强迫性神经官能症(OCD)的人会反复洗手一样,尽管它们并不能从该行动中获得任何乐趣(实际上这是一种被迫性行为)。

让我们着眼于人类,当着眼于帕金森患者时,我们会发现有许多研究显示,当使用药物去加强多巴胺释放时,“想要奖励”的感受便会增加并因此而引出一些强制性问题。就像这些别人都有强迫性购物与其它“疯狂”的行为。

此外,如果我们着眼于有关某个人在大脑的“愉快中枢”中插上电极刺激时,我们便会注意到它们想要执行各种活动的原文和动机在不断提高。但是我们却未能看到有关这些人变得更加高兴的报告。通过种种数值我们可以发现这是对于多巴胺自我刺激的想要回应而不是喜欢回应。尽管它同时也指出,如果你一开始感到沮丧,但是突然受到激励去做某些事,那么这会起到某种副作用而影响你的情绪。

关于上述所有研究的结果便是,比起乐趣或学习,多巴胺更倾向于动机或强迫症。所以当我听到播客的主持人说因为游戏能够释放玩家的多巴胺,所以它们是一种强迫性行为时,我想他们应该是对的。但是多巴胺并不能直接赋予游戏乐趣。

这对于游戏来说意味着什么?

当时我们很爱将“神经”这一词用于各种内容上。在学术界,这便引起了神经怀疑论者的出现,即要求看到更多证据。毫无疑问,这种“神经学”深受欢迎。实际上,甚至有调查显示人们倾向于相信基于神经系统科学模式而呈现的数据,认为它们比基于普通模式的数据更具有科学性。

所以知道所有的这些神经系统科学对于那些创造游戏的人来说意味着什么?从使用角度来看,这对于每日游戏设计的作用并不大。我在此呈现的神经系统科学都是纯理论的,而不是使用科学,是基于我们所知道的某些特定奖励的传达奖励的方法具有特别的刺激性和乐趣的角度(在不同范围内可能或不可能与多巴胺有关)。例如:

*不可预测的奖励(随机掉落的战利品)总是比那些可预测的奖励更具有刺激性。

*奖励应该具有意义,例如食物对于那些已经吃饱的人来说便没有多大刺激性,或者如果你使用的是缺少突出视觉效果的设置,那么全新且独特的刺激内容便能够更轻松地吸引你的注意。

*人们更喜欢即时奖励和反馈,并且很难受到延迟奖励和反馈的刺激。在年轻时期,这种对于即时满足的偏好最为强烈,但也会随着年龄的增长而继续保留着。

*学习去获取并想要某种特定的奖励的想法会因为怎样的行为反应创造了该奖励的即时反馈而增强。关于怎样的行为创造了奖励的不确定性通常都会引起试错式探索,如果出现更进一步的奖励,这种情况便会继续下去。

*如果人们察觉到自己正在朝着奖励前进,即使该过程是幻觉,他们也有可能受到刺激而获得奖励。

*同样的,人们更愿意报告他们努力去挽留自己所拥有的内容,而不是获取自己未曾拥有的。

*人们总是更倾向于大数目。因此在某种程度上它们更喜欢通过战胜每个怪物获取100个XP或需要1000个XP进行升级的系统。

*预测一种奖励能基于行为反应而替代其它奖励(如在游戏中获得点数与拥有乐趣和点数是相互联系,并因此成为了一种刺激性奖励)。

*人们不喜欢那些让人觉得是受控制的奖励。

*如果某些内容比其它奖励更加难以获得,那么精通,自我实现以及不费力的高性能等感受便是一种奖励。

同样地,我所谈及的神经系统科学研究并不是针对于如何创造奖励自己或乐趣,而是注重理解它对生理的影响。如果这是基于一种使用角度,它通常是关于使用药物或直接的大脑刺激去获得结果。

基于游戏从实践的角度来看,然后着眼于游戏设计方向的行为将比从神经系统科学中寻求答案更有价值。的确,即使你能够记录下与游戏相互动的每个玩家的准确多巴胺活动,这也不能创造出具有实质性差别的设计结果。不过也存在例外,即基于理论的神经病学方法可以在无意识的情况下检测出玩家是否想要玩游戏,在这个例子中你也可以无需担心神经病学原理而知道未来行为的结果。这一切都说明,如果你真的想要知道自己的游戏将对人类大脑产生怎样的影响,或者你正致力于一款严肃游戏并想要知道游戏是否能够完善大脑功能的话,那么神经系统科学便是非常有帮助的方法。

因为当下人们更倾向于接受“神经”组件具有科学性的理念,所以基于这种模式去谈论游戏将会是一种更加可行的市场营销策略。但是我们也必须谨慎行事。现在人们似乎认为多巴胺与乐趣相关。同样地他们可能并不在意游戏宣传的目的在于提高多巴胺回应。但是如果某天公众认知发生了改变,人们将会更加清楚多巴胺是关于想要和动机,而不是乐趣,那么这些信息将变得更加难以揣测。因此,信息会从“这款游戏是致力于呈现乐趣,所以你才会想要去尝试它”变成“这款游戏的设计目的是为了让你去进行体验,有可能你根本就不喜欢这么做”。此外,还有一些证据能够证明那些备受压力(生理或心理)或缺少刺激的人更容易受到多巴胺激励效果的影响(这具有进化意义,就好象你现在处在一个糟糕的境遇,你便会受到激励而走出困境并冒险去完善状况—-但是在现代生活中,这种倾向有时候却是有害的)。结果便是,如果你是为了推动玩家多释放多巴胺并将其与盈利维系在一起而设计游戏,你有可能会发现这种方法将有效作用于那些不能有效捍卫自己同时也不大会为游戏花钱的人身上。除了道德感外,这种方法并不能为你带来什么,这种围绕着“多巴胺设计”的游戏(游戏邦注:即依赖于不确定奖励系统且带有直接行为反馈系统的游戏)可能会引来政府的管制(就像日本便已经限制了手机游戏中的某些“赌博”组件)。

一个复杂的问题

在完成这篇文章前,我们需要注意大脑其实是一个复杂的主题。当舆论界提到神经系统科学时,它们通常指代的是大脑的“愉快中枢”或特定神经递质在做一些特定的事。但这并不是实际情况,而是尝试着阐述一个真实故事的副作用那个。在现实中,生理学通常都会遵循着多对一或多对多的模式。也就是说这通常都不是X引起Y的情况,而是X,Z,B或C引起Y,或X,Z,B或C中的任何一个引起Y,J,A,K或U的任何一个,这都是依情况而定。此外,因为神经递质并不是独立存在着,所以你通常都能看到有关一种神经递质中的改变会影响其它神经递质的例子。多巴胺便是一个有效例子,即它是其它神经递质—-去甲腺上腺素的先驱,所以在多巴胺包含于想要奖励的情感中时,其它神经地址也会创造出这种效果。也许多巴胺只能在特定情境下创造出想要情感,但是在其它时间它也具有不同的影响。

另外一个复杂的问题便是,大脑领域的激活(或一直)将创造一种神经递质,如多巴胺,而它的影响将完全区别于其它特定神经递质(这也是我为何在一开始说只会讨论神经递质的行动而不会讨论大脑领域本身的主要原因),此外,接合点经常会被各种不同的化学物质(它们可能是因为能够更有效地吸引某种神经递质)所激活,这意味着在缺少多巴胺的情况下其它化学物质将会与这些点捆绑在一起。还有一个复杂问题则是,多巴胺只会在做出重复性动作时被释放(游戏邦注:如玩《摇滚乐队》或敲打控制器上的按键),而这与奖励可能有关系也可能毫无关系。

总结

在本篇文章中,我也提到其它神经递质的名字(如阿片类和大麻类),但是我主要还是专注于多巴胺所扮演的角色及其对于“想要”奖励情感的影响。

还有许多神经递质能够对人们对于游戏的反应产生影响。就像血清素。同样的,许多游戏也不只是关于奖励。它们也包含了社交元素(催产素和加压素便专注于这一点),竞争,技能表现,负面情感和正面情感,惩罚等等元素。的确,游戏与人类的大脑具有密切的关系。而如果你对大脑这一复杂对象感兴趣,你就需要更深入地了解并学习。但你也必须清楚,当前的人类更倾向于了解与神经相关的主题,而不愿变成神经怀疑论者。

篇目4,以斯金纳原理分析社交游戏道德性及趣味性

作者:Benjamin Jackson

19世纪90年代,苏联生理学家巴甫洛夫(Ivan Pavlov)在圣彼得堡大学研究自然科学,分析狗的唾液分泌情况。巴甫洛夫发现,狗在被喂食前会分泌更多的唾液,而且仅仅看到工作人员的白色实验服就会引发唾液的分泌,即便实验员手上并没有食物。所以,他尝试在给它们提供食物时摇铃,发现随着时间的推移,狗在听到铃声后就会分泌唾液,即便没有向它提供食物也是如此。巴甫洛夫的研究结果就是所谓的“条件反射”,一级强化物(游戏邦注:能够自然触发反应的东西,比如食物或疼痛)与条件或二级强化物有关,比如实验服或铃声。

40年后,年轻的心理学家伯尔赫斯·弗雷德里克·斯金纳(Burrhus Frederic Skinner)以巴甫洛夫观察到的现象为基础构建新的理论。他建造了一个隔音且无光照的场所,在其中喂养小动物。在动物可触及的范围内放置杠杆,该杠杆可以触发一级强化物。这个被称为斯金纳盒的设备成为随后许多实验开展的工具,这些研究都获得了一定的突破,比如可卡因在单独环境与较大社区中的相对成瘾性以及探索老鼠是否拥有情感共鸣等问题。

skinner box(from theatlantic)

现在,斯金纳被誉为操作性条件反射之父,这是种通过联系某些一级强化物来让对象在特定条件下对二级强化物做出反应的研究形式。斯金纳的研究不仅表明自然界中的一级强化物和二级强化物存在联系,还显示出人们还可以制作出新的强化物。

斯金纳和巴甫洛夫证明,一级强化物是很强大的刺激因素。除了性行为和睡眠外,熏肉是自然界中最强大的一级强化物之一,部分原因在于,与其他肉类相比,它拥有丰富的脂肪和蛋白质。熏肉成了人所共知的“开胃肉类”,其味道可以触发很强烈的食欲,对素食主义者也不例外。但是在现代世界中,我们对熏肉和其他高脂肪事物的本能渴望会带来严重的健康问题。

这个盒子还让我们明白了两个基本道理,其中之一的延伸已经大大超过斯金纳的研究范围。与其他动物一样,人类也会对一级强化物做出很大的反应。在我们产生满足感后一级强化物的效果会减弱,于是金钱或社会地位等二级强化物便会成为我们的需求,而这些需求不存在满足点。换句话说,我们很希望能够获得同辈的认同,而且永远都不会满足于所获得的认同。

许多人认为《FarmVille》是无害之物,他们辩解就算没有玩游戏,我们也会把数千个小时浪费在其他行为上。毫无疑问,社交游戏市场依靠虚拟货币和无限的商品成为巨大的摇钱树。当你认真研究《FarmVille》后会发现,这款游戏的所有设计显然只有一个目标:尽量让用户在这片虚拟土地上多花时间。游戏利用了我们回馈好友赠礼的本能,让我们不断请求好友回到游戏中。精心设置的作物成熟时间强迫玩家每天都要定期回去查看他们的农场。其他游戏采用的又是什么技术呢?

道德性

撇开道德相对主义,假设我们能够清晰地确定“坏”的概念,我认为确实会存在“坏”游戏。在《道德形成上学基础》(Groundwork of the Metaphysics of Morals)一书中,德国哲学家伊曼努尔·康德(Immanuel Kant)尝试以“绝对命令”,即一套能够衡量行为道德性的规则对此进行定义。但是,无论我们选择如何评估游戏的道德性,必然会有些特征会将其推向“邪恶”的范围。

不道德游戏的主要特征是操纵、误导或同时兼有两者。从用户体验角度来看,这些游戏呈现出阴暗模式,比如那些引发用户做与其意志相反的设计元素。阴暗模式往往用来使成功指标(游戏邦注:比如注册邮箱、激活或升级数量等)达到最大化。

比如,《FarmVille》、《Tap Fish》和《Club Penguin》利用深层次的心理冲动来从用户处赚取金钱。通过呈现进度条,他们利用玩家更快完成的欲望,鼓励用户付费升级。游戏中的随机时间奖励像赌博机那样吸引玩家回到游戏中,即便这种行为不会让玩家获得多少好处。他们通过让玩家不断向好友提出要求来进行病毒式扩散。

club penguin(from theatlantic)

这种趋势并不只在社交游戏领域中出现,还包括《美国陆军》等许多战斗游戏,这款游戏的制作由美国军方赞助,用来作为招募士兵的辅助工具。有些品牌产品也发布了Facebook游戏,比如《Cheez-It’s Swap-It!》,他们将此作为出售更多产品的工具。这些技术可以用在任意类型的游戏中和任何背景下。

当然,并非所有游戏都能够被清楚地划归善或恶。“好”游戏没有要求玩家进行批判性思考和解决问题,但是仍然会令人感到愉快。《俄罗斯方块》是款复杂和内容丰富的游戏,其吸引力与1988年首次面世时相比丝毫没有减弱,但许多人辩解称,游戏缺乏解决问题的挑战或丰富的故事情节,而这些正是《Sword & Sworcery》和《塞尔达传说》等冒险游戏充满吸引力的因素。不同的人可以看到游戏玩法不同层面的意义,这才是让玩家和游戏设计师对游戏充满兴趣的原因。

困难趣味

横向卷轴跳跃游戏《屋顶狂奔》是最畅销的独立iOS游戏之一。游戏内容是一个小人在虚拟城市的屋顶上奔跑。玩家点击屏幕让小人跳跃,小人的奔跑速度会逐渐增加。《屋顶狂奔》并没有终点,只有无尽的奔跑:目前最长的奔跑记录是,玩家花了8分16秒时间穿过22公里的倒塌建筑物、掉落的障碍物和妨碍小人奔跑的窗口。

屋顶狂奔(from theatlantic)

在2011年10月的IndieCade上,《屋顶狂奔》制作者Adam Saltsman讨论了“持续时间直至死亡”的观点。我们所有人在地球上生存的时间都是有限的,无论任何时候,当我们将时间花在某个行为上,就无法将其花在另一个行为上。这意味着,我们花在游戏上的时间也是投入的成本,这项成本比我们花在游戏上的金钱还要多。如果在这段时间内能够感受到乐趣,那么这样的游戏才值得我们投入时间。

不同的人会以不同的方法来创造价值,但是最直接的是让玩家产生参与感直到他们精通游戏。2011年西雅图Casual Connect举办的讨论会上,游戏设计师兼顾问Nicole Lazzaro描述了两种类型的趣味性:简单趣味和困难趣味。如果游戏向玩家呈现的挑战没有超过“简单趣味”点,那么他们永远也不会精通游戏,这也会使他们失去玩游戏的兴趣。

Zynga纽约工作室总经理Demetri Detsaridis也参加了此次讨论会。Zynga对《FarmVille》及其同类游戏中“真正的趣味性”有着自己的看法,这种看法与公司的商业利益相符。他对公司如何利用“简单”和“困难”趣味的说法是:

我们在开发游戏时脑中并不一定有这样的框架,在我昨天看这场讨论会的主题时,我在想:Zynga的大量开发工作都是在这种‘人类’趣味和‘简单’趣味的循环间完成的。这两者之间并没有清晰的界限,存在许多重叠的部分。我觉得许多社交游戏已经做到了将‘人类’趣味和‘简单’趣味融合起来,至少3/4的游戏已经实现。所以你会看到困难趣味出现在令人惊讶的地方,比如考虑自己的社交圈范围,以及你在现实生活中如何向好友发送请求。这些都是社交游戏的组成部分,而且设计师明白这一点,所以几乎所有‘困难’趣味都是以人类和简单趣味为中心。

Detsardis同意了他的看法,本次讨论会的主持人Rob Tercek总结道:

游戏本身并不是动作发生的地方,其策略元素存在于:你要何时利用社交圈?你要何时向好友发送信息?你是否频繁使用这些功能?

用更容易理解的话来说,Detsaridis认为玩Zynga游戏最具吸引力的部分在于决定何时以及如何利用消息来提醒好友玩Zynga游戏。

创造困难趣味并不是项容易的工作,它要求设计师深入思考玩家体验,而不是只使用简单的技巧来推动参与度。《FarmVille》、《Tap Fish》和《Club Penguin》都采用了类似斯金纳的技术来说服玩家投入更多的时间和金钱。但是,创造参与度的方式还有很多,作为游戏开发者和消费者,我们有义务要求这种娱乐方式呈现更有诚意的趣味性。

篇目1,篇目2,篇目3,篇目4(本文由游戏邦编译,转载请注明来源,或咨询微信zhengjintiao)

篇目1,Ethos Before Analytics

by Chris Birke

[In this article, designer Chris Birke takes a look at research, examines what's going on in the social games space, and argues for an approach that puts creative ethos before data-driven design -- but without ignoring the power that game designers wield over players.]

A little over 10 years ago I read an article titled Behavioral Game Design written by John Hopson. Now, looking back, I see what a huge influence it’s been on my game design philosophies. I have been following psychology and neuroscience ever since, always uncovering new ways to incorporate them into an ever-growing design toolbox.

Technology is moving very fast, and 10 years is very long in internet time. Wikipedia was launched 10 years ago; Facebook, World of Warcraft, and Gmail have been around since 2004. The iPhone was born in 2007, and FarmVille recently turned two. Science is moving just as quickly, and behavioral theory is now being underwritten by neuroscience, and the revelations of high resolution, real-time brain mapping (fMRI). On top of this we now design with the aid of analytics, the real-time data-mining of player behavior. We can roll out a design tweak once a day, if necessary, to maximize profits.

What are the ethical implications? As a curious proposition, “Behavioral Game Design” seemed innocent enough. Now that design toolkit verges on a sort of mind control, and the future is promising only refinement of these techniques. What are we doing to players, and what have we left behind in those innocent days of chasing “the fun”?

Personally, so long as I can make enough money to eat (and maybe have a good time), I feel obligated to design socially responsible games that benefit the lives of players, not just exploit them. I want to explore ideas of how to use these new technologies in a positive way, and to encourage those who feel the same.

I would like to share some of the neuroscience that attempts to explain how conditioning behavioral conditioning works in games, and go into how this can be used in the context of analytical game design to maximize player compulsion. Then I will go into some ideas for how to use these tools ethically, and hopefully inspire discussion in our community. But first, a brief review of behavioral conditioning.

“They’re waiting for you, Gordon, in the test chamber…”

Most behaviorists don’t use the words “Skinner Box.” Skinner himself didn’t want to be remembered as a device, preferring to call it an “operant conditioning chamber.”

It is a cage used to isolate the subject (usually a pigeon, or a rat) with only a button to operate and a stimulus (a light, for example) to be learned. Pressing the operant (button) releases a reward (food), but that’s reliant on pressing it correctly in response to the stimulus.

It was with this that Skinner explored the nature of learning and, further, how to maximize or disrupt the compulsive behaviors of his subjects. The results, in short, showed that the schedule of rewards in response to stimulus greatly affected how animals (like you and me) responded to their training. The most compulsive behavior was not driven by “fixed ratio” rewards, where a stimulus meant a consistent prize for correct actions, but instead by a semi-random “variable ratio” schedule. Maybe you would win, or maybe not. Keep trying, just in case — you’ll figure it out eventually.

If you have been designing games at all in the past few years you ought to be familiar with this. Applying and combining the results of these studies have been proven to work. No one can deny the incredible feeling you get upon hearing the familiar “ting” (YouTube link) of a rare ring dropping off an enemy in Diablo. It’s the combined reward of the long term chase for better stats with the instant gratification of a high pitched chime over the clank and groans of battle. It’s rare and semi-random.

You can’t argue the benefit of front-loading content onto the learning curve like in Rift (or any other MMO) either. Dishing out rewarding content more slowly in the late game not only maximizes its use, it’s fitting nicely to the documented results of the most compelling reward scheduling. Just add some compelling random combat encounters to keep it fresh. Reviews (for example, Gamespot’s Review) call this out as good design, because it’s more fun that way, right?

Since I’m being such a depressing reductionist, let me tell you I believe there is such a thing as “fun.” It’s a specific brain activity within us, electric and chemical. It lives in there, and you can probably graph it with powerful magnets, sales, focus groups, or the staggering 275 million daily active users playing Zynga’s games on Facebook (AppData). Even if you don’t think current Facebook games are fun (and I’ll suggest how that might work), someone out there does.

What is fun, anyway?

In my opinion, neuroscience is quickly extending behavioral theory as the most effective means of manipulating people (players). There are a few different theories of what’s happening in the brain to create the consistent results found in behaviorism (and FarmVille), but I’ll only share my favorite for sake of brevity.

If this isn’t the true mechanism of fun, I’d at least like to warn you: it will be discovered soon. A early paper on the topic entitled “Predictive Reward Signal of Dopamine Neurons” is the sort of thing that makes me giddy. This research describes in detail how the behavior of a particular type of neuron in the brain specializing in the neurotransmitter dopamine works as the “reward system” to drive learning and motivation. It’s fairly simple theory called “incentive salience,” and the key is novelty.

All of our brains are similar. Just as the average person is born with the same sorts of cells in the fingernail cuticle on their ring finger, so, too, do we all share the same brain areas. They work to perform the same tasks in all of us (moods, facial recognition, Counter-Strike, etc.). They’ve specialized.

An important central structure, the ventral tegmental area (VTA), is made up of neurons that specialize in the release of the neurotransmitter dopamine. It stretches out into other brain areas, lining them and waiting for a queue to act. By releasing dopamine, this structure can intensify brain activity in those areas, acting as a sort of throttle. And what’s controlling the throttle? Reward.

(Fig. 1) The reward system.

These rewards are the same sorts of delicious rewards given in Skinner’s Behaviorist research, as well as other things we’re wired up to like. (Social status, pleasant noises, sex, explosions, epic loot, etc.) These things trigger the signals dopamine neurons are carefully monitoring, and each expects a precise level of expected reward.

When a surprising reward occurs (or, as they say in the literature, a “salient” one), a flood of dopamine is released. That flood is like adding fuel to a fire, and the brain activity is intensified along the dopaminergic pathways.

While that’s happening, new memories are being formed, too, encoding the current input from the senses as a stimulus. It’s the pattern of whatever the brain was sensing and thinking while that new reward was experienced.

From now on the link is made, and whenever this pattern (this stimulus) is present, the memory of the reward is activated. This happens even before the reward itself is consumed. It’s what behaviorists call conditioning.

I’ll use ice cream as an example of this process. It’s full of creamy sweetness (which qualifies as an excellent reward), but pretend you don’t know that. Imagine you’ve never before in your life so much as heard of ice cream. Then take a lick.

The sugar should trigger an automatic reward signal in your brain, and your dopamine system will detect it and light you up right afterwards (Trial 1, in the figure below).

The experience of the sweetness is intensified by the heightened activity, so the memory will be vivid. The sight and smell of ice cream alone will be enough to remind you of its taste, and how good it made you feel.

The reward of ice cream is now conditioned. This is the experience of “pleasure,” but I wouldn’t call it fun yet. There’s one last step in the process.

(Fig. 2) The dopamine release moving back in time over four trials until it reaches the furthest reliable signal of reward.

Because you’ve been conditioned, seeing or smelling ice cream (the stimulus) again will now activate a dopamine response even before you taste it (Trial 2). Not in the original area (those neurons closest to experiencing the taste have already begun to expect it, and aren’t as surprised). Instead, new areas that weren’t involved in the exact moment of the ice cream reward are being lit up.

Remember, this is now happening before you’ve actually gotten the ice cream. The dopamine driven brain fire is happening while you’re standing IN LINE for the ice cream. The memory and stimulus is being recorded progressively further out across time (Trial 3). It’s your brain encoding a prediction of the reward. Even though standing in line is not at all the same as eating ice cream, you’re doing it because you’re compelled by the memory. That’s what we call “fun.”

“But wait,” you say, “standing in line sucks!” (And you’re right.) It sucks for you and me because we’ve done it too many times, but try to imagine the first time you stood in line for ice cream. I’d bet you were giddy with expectation and could barely contain your excitement. That’s the dopamine system at work. It’s intensifying the experience by making the memory of the ice cream so vivid that you can almost taste it, while laying down a memory of walking up to the ice cream shop, looking over the flavor choices, and trying to decide which topping (chocolate fudge or whipped cream?)

All of these experiences and choices are new processes in your brain, and all of them are receiving an novel link to the reward of ice cream. That’s keeping the dopamine flowing, and making it all feel good. As long as a reward can be predicted by a new stimulus, the dopamine system will keep causing the excitation needed to record it, and the whole experience carries a compelling feeling and expectation of the reward. It is not the reward itself, but the fresh process of learning to get it and the motivational feeling of wanting the reward.

So that’s what I’ve come to think of: the dopamine-enhanced experience of reward while a new predictive stimulus is being mapped out. A human software interface.

Although every brain is born different, some nearly immune and others very susceptible, the neurons and dopamine are always there. With a little deduction it’s a tool of strong game design. Keep the dopamine flowing by making sure you provide salient rewards and just enough operant complexity to keep it all from ever being mapped out, but not too much or else you shut them down.

It explains why you’re willing to keep clicking “reconnect” when the WoW server goes down, and it explains why you can rehash old mechanics so long as you’re selling them to an audience who hasn’t already learned them (i.e. children, new gamers.) It also establishes how splitting your rewards across many brain systems (social, aural, mechanical, visual) is such a compelling combination. It’s a bit surprising that Vegas hasn’t figured out how to turn gambling into more of a social game for people besides the high rollers.

(Fig.3) A momentary depression occurs when an expected reward is absent.

Once everything that can be reliably linked to ice cream has been wired up, the dopamine system goes quiet. The neurons involved have become acclimated; they no longer get excited when they sense the stimulus (Trial 4). They simply expect their reward of the ice cream in due time. With nothing new to learn, they no longer trigger excitation. Standing in line for ice cream now sucks.

Even though ice cream itself is still delicious, the line is simply work you do to get it. Things “just don’t feel the same anymore.” Even more than that, those dopamine neurons are still watching, and if the predicted reward doesn’t show up when they expect it, the whole system flat-lines. When they fall on their face, you have no motivation to continue, and quite possibly feel pissed. It’s a theory of boredom, as well as fun.

It hints at addiction too, but in doing so it resolves the difference between an “addictive” game and a drug. It has to do with the strength and means of the dopamine release. Almost all addictive drugs like methamphetamine affect dopamine, and can trigger its immediate release at tenfold normal levels. Given how this reward system operates, you can imagine how strong this conditioning is. Games only trigger the release of dopamine through normal sensory channels, and at a more healthy and sustainable rate.

By this theory, the largest dopamine rush accompanies the first few times you play. If you’ve ever felt a compulsion to rush home and play a game all day, you are likely getting a hint of what a strong addiction feels like. Afterwards, the combined effects of acclimation and inhibition curb further dopamine release.

Can a game lay down enough conditioning over time that begin to match the levels of reinforcement seen in drugs that immediately release dopamine? Perhaps, and we have strong profit motivation to see if this is true. It’s reasonable to assume some people can be trained to compulsively play games.

Mario Collects Coins

Every arcade cabinet collected a certain number of quarters in a month. At month’s end, the arcade owners counted these up, paid their bills, and sunk the rest into their futures. These were the people who decided which new arcade machines to buy.

You can imagine the effect this system had on game design, and you can think of it as a primitive form of analytics. The majority of analytics in current games are essentially the same, only one step away from counting profit. However, with the realities of digital distribution and real time metrics, this world is quickly changing.

As a refresher, analytics is the process of crunching data to inform decisions. They came to games via web developers, as the early Facebook games were not created by the traditional console studios. Web developers knew the value of analytics in a way the console world is only beginning to appreciate, and built them into the game.

Webpage “hits” and “bounce rate” translate fairly well into the world of Facebook games as “Daily Average Users” and “Monthly Average Users,” and the combo stat “DAU/MAU.” (It’s used to measure retention.)

Beyond helping producers sound important, they provide a clear and inarguable metric of success: points on the graph! Through simple metrics like these you can see in real time how quickly your game is gaining users, keeping them, and turning a profit.

However, analytics used at the level of DAU are fundamentally limited. They show only the present, and say little or nothing as to why and how the game is creating these numbers. It’s through a combination of design insight, deeper analytics and experiment that takes it to the next level.

We’re putting games in the cloud now. Steam was one of the early digital distribution channels, and got into analytics right away with its hardware surveys. Facebook has more advanced demographics and built in analytic functionality (not to mention its pioneering work in redesigning our concept of privacy).

Android and iOS devices all deliver through the cloud. Google has numerous online APIs for crunching analytics, and I’m just waiting for the day websites like OkCupid start hosting apps. (It’s an analytics goldmine!) The current and next generation consoles all have digital channels, and I don’t see any indication of this letting up.

The more we continue to shift (or float) in that direction, so too will the analytics become easier and easier to access, and what’s more, with the social graph that accompanies it all. The relationships between all the players, their in-game interactions, and the histories of players over time and across games can now be tied to a unique profile for each user. This massive well of information is where web analytics and game design begin to synthesize into something new.

Because games offer a much deeper level of user activity than websites, you can use analytics to probe much deeper into player behavior, too. Will Wright was a pioneer in using analytics for game design long before we’d dreamed of Facebook. In a fantastic 2001 interview with Game Studies he spoke of how data revealed two main types of play in the Sims: House Building vs. Relationship Building. The game was then tailored to further support these two player goals.

He went on to speak of how analytics might one day be used to customize games on a per-user basis. It’s something now possible, and in real time. Rather than just gathering information on player attendance, every aspect of player behavior can now be collected as analytical data. Questions of what, when, who, and why can be asked, and a whole slew of predictive information can now be developed.

Dividing players into different demographic groups allows developers to tailor (both in content and mechanics) to particular types of players. If charging $10 for the rare Purple Penguin in Zoo Collector will not make up for your monthly budget deficit, then introduce the $10 Violet Bugatti in the nearly identical Car Collector (catering to a segment of the Middle Eastern audience with disposable income).

Not all demographics are of equal worth. By mastering specific content for a number of smaller demographics you can expand into niches beyond the mainstream, or divide the mainstream up into more manageable developmental targets. Not all players are of equal worth, either.

It’s very valuable to identify your early adopter players and the opinion-generating players who act as social hubs. These players are the agents of virality, and your means to success. Identify them and let them know how special they are with rewards and privilege. Tell them early about your next game, and encourage them to move on to it. The rest will follow.

Don’t forget this can all be automated, too. Games on a server can be patched twice a day, if needed, and there’s no reason every user will see the same version of your game. Not only can you give your most popular users the “Special Version,” you can also release “Design Version A” to fifty percent of your users and “Design Version B” to the rest.

Watch the analytics to see which version does better, then shift over the entire game to that design. Wash, rinse, repeat. This is known as “A/B testing,” and you can use it to explore almost every aspect of design. Just be careful not to test various costs on the same digital content, as people might compare notes on the forums and get suspicious.

I see no reason to be so blatant, though. Rather than being reactive — staring at your feet while trying to get ahead — find success through forward-looking design.

As outlined earlier, we now have a strong theoretical framework for interfacing with players and developing a compulsive play response through behavioral theory and neurology. A/B testing, demographics, social graphs, and an endless stream of willing players is the perfect laboratory setup for perfecting such designs.

I would recommend experimenting on subsets of users to see how well they condition to certain mechanics such that the timing and distribution of rewards can be optimized. Hire or train statisticians. Users should be tracked according to their sensitivity, and targeted to maintain engagement.

Rather than rehashing the same designs over and over, predict the effectiveness of certain designs on specific market segments and transition them from an old design to a new, more complex one the moment they are ready. This can be verified as increased retention via the MAU/DAU ratio. Meanwhile, the old design can be reapplied to a fresher audience segment.

I’d recommend that if I was a tool, that is, and unfortunately it’s already happening.

Who wants to be a thief?

At some point we’re getting carried away. To review, we have a system funded by the success of a theory for conditioning behavior with the neurological science and imaging to refine it, and a massive social laboratory performing millions of experiments daily to get it right. That’s quite a beast to be reckoned with. It most effectively targets players who’ve never seen a game before, children growing up online, unemployed people, and people wasting time at work. What do the games provide in return? “Fun”?

To be sure, this for-profit laboratory existed long before video game analytics. We’ve been manipulating each other since the beginning of time, and we are built to accept the thoughts of others (it’s called communication.) Advertising long precedes gaming in refining these methods, and before that propaganda and rhetoric did nearly as well. Even writing these words is in a way of manipulating you into thinking them.

Internet gaming, neurobiology, and analytics just provide great new ways to control behavior and generate profit. It’s hardly limited to games, either.

Jesse Schell projected an alarming version of the future in his “Design Outside the Box” talk at DICE, where all commerce was motivated with a point-tracking “gamification” layer, but that’s now well underway too.

It’s not the Buy-Ten-Get-One-Free coffee cards that worry me, but the “Rewards Program” available for every single credit card on the market. Curious name, “Rewards Program.” It exists because it works.

Is that what games are now becoming, a sort of credit scam? Without narrative, without social context, without political stance, and without an opportunity for creative expression, are we just dividing our players into little boxes to be farmed?

Are we, as designers, just clicking the same square tiles over and over waiting for the coins to pop out, so we can click them too? Are you bored, or do you still have a few more years of empty clicking in you? Games that provide more value are worth more; people just need to know.

Educate consumers about the system, and show them why your games are worth paying for. Get them to shun other games. Turn games exploiting simple reward mechanics into the McDonald’s food of digital entertainment, while standing up for games that deliver something worthwhile. Create games with story and create games with art.

Mechanics and a theoretical understanding of fun are wonderful tools for expressing a message in a way more powerful than print, music, and film. That’s ethos, and you can generate profit with it.

The future of the medium is growing this alternative. Though developers often scoff at the idea of “games as art,” it is unquestionably coming up more frequently in discussions. The Smithsonian plans an exhibition of games in 2012, and the National Endowment for the Arts is now funding games that have artistic merit.

What does it mean? I rarely encounter a developer with the humanities background to engage the question, so I’ll take a chance at translating it to something engineering-oriented: art creates culture. It is not the communication and manipulation of minds on the individual level (as described in this article), but a formation of shared opinions and ethics across masses of people.

Often, art creates whole subcultures of devotion, and in doing so it engages people at a level of behavioral conditioning far more advanced and comprehensive than the simple designs of slot machines and Facebook games. It’s already begun with the celebration of indie game developers, the microcosm of chiptunes, and people’s unwaveringly fond devotion to Final Fantasy VI and VII characters. The virtues of Link are present in a generation, whereas the ethics of CityVille will never be.

Behavioral manipulation can be used positively. A program at Yale university called Play 2 Prevent is exploring the use of games as a tool for increasing awareness of HIV in teen sexual activity. Perhaps someone will fund games for the training and rehabilitation of prisoners, as they ought to be more educational and passively reformative than cable TV. With each day passing methods such as these are becoming accessible to all developers. Articles by researchers (like this recent one by Ben Lewis Evans) are now routinely appearing on Gamasutra.

Analytics are your friend, too. You can do experiments and optimize your games in the same way as mega-publishers; just do it in a way that gives your users something that actually benefits their life. Consumers love analytics, so long as it’s for their benefit. Just put the data together in a friendly package and give it back.

Share out this data with other developers (maybe we need a PlayerAnalytics.org?), and collectively out compete the companies who hoard data. Design creatively, and build mechanics off social graph data to deepen interactions with real people.

Numerous services are already doing this (from Xbox Leaderboards to the OkTrends blog) and people consciously make it viral. Push your existing concepts forward with analytics, too. If data reveals a peak number of players at five pm, schedule in game events for that time. Systems that adjust the game in real-time, like the AI Director from Left 4 Dead, can be driven with analytic data too. Embrace the concept of the cloud and use this data to keep your game from becoming static.

Love before profit.

Play

The zen and flow of play can be beautiful and life-expanding, or it can drive people into the rut of a junkie for the profit of someone they will never know. As I’ve learned these things, I can’t go a day without seeing them in my life. I feel it when I stop playing games because I just can’t finish the unskippable tutorials. I get angry when I read poorly written textbooks on topics I want to learn, but can’t, as my will is needlessly sapped with boredom.

My heart goes out to the pain of kids trying to finish the dry repetition of their math work, and it goes black when I see the finely crafted advertisements for unhealthy things tagged onto the finer moments in art. I think of the millions in talent spent to make it happen. With all the zombies pulling slots in Vegas, all the hipsters swiping down on their mobiles in hopes of a new Facebook update, and all the worn paths paced by desperate animals in the zoo, I don’t want to make another game like that. But I probably will.

Design your ethics into how games will interact with players. Sometimes it’s okay to make something fun and compelling. Other times you’ll be forced to make concessions. I’ve done some pretty shameful things in development. I’ve compromised on principles of violence against women, I’ve modeled munitions for the army, and I’ve studied very hard at how to make people keep doing things compulsively when they otherwise wouldn’t.

Don’t give up. If you got into game design because you love games, then fight to show it (and you will have to fight). Things are changing very quickly, and the purpose of games is created through you.

篇目2,Nicholas Lovell: Free-to-play gamers are tired of Skinner Boxes, and that’s a good thing

by Matthew Diener

Speak to free-to-play detractors, and many will argue that the model reduces games to mere Skinner Boxes, with titles designed to trick players into parting with their cash after being treated to enticing rewards.

For those unfamiliar with Skinner Boxes, they work by rewarding subjects (in B.F. Skinner’s time, lab rats) with food for pressing the correct lever when stimulated by a bright light or loud noise.

Replace ‘food’ with ‘gems’, and ‘lever’ with ‘touchscreen’ and it’s easy to see why the model can be applied to many high profile free-to-play games. However, just because the model fits – and, for a time at least, offers success – doesn’t mean it’s the only option.

Indeed, for GAMESbrief founder and author of The Curve: From Freeloaders into Superfans Nicholas Lovell, many players are eager for developers to move on.

We caught up with Lovell at GDC Next in Los Angeles for his take on free-to-play’s next steps.

Pocket Gamer: Let’s start by talk behaviourism. What’s the traditional line of thought that convinces developers to view games as Skinner Boxes?

Nicholas Lovell: Firstly, a disclaimer: I am not a psychologist, and have a layman’s understanding of Skinner Boxes.

Skinner boxes – more accurately called Operant Conditioning Chambers – originated as an experiment by psychologist B.F. Skinner to investigate how test subjects, typically rats and pigeons, could be encouraged to display certain behaviours if they are given suitable rewards.

A rat might press a lever and get a reward, or have to press that same lever ten times to get a reward, or press it once with a 10 second period and so on.

Skinner found that introducing randomness into the process, both in terms of the size of the reward offered and the activities that the test subject was required to complete, led to the creature to repeat the action over and over again, to the extent of injuring themselves.

One conclusion is that it is possible to use a variable reward structure to encourage test animals – and by extension, humans – to act against their own best interests.

Early Facebook games, particularly those created by Zynga, had the look of Skinner Boxes to some people: you turned up, you did a thing, you got a reward for that thing, and you repeated it.

For some players, this was satisfying and enjoyable; to others, it lacked creativity and agency – partly, I think, because they expected a particular type of creativity and agency in their gaming and were frustrated that FarmVille didn’t match their expectations.

There are clearly elements of psychological manipulation in Ville type games, but then again, those same manipulations exist in nearly all game designs.

In your talk at GDC Next, you noted that Skinner Boxes eventually stop working because subjects learn there isn’t a different possible outcome. Is it fair to say that the success of many ‘sticky’ games lies in players not coming to this realisation?

I’m not sure that is true. Sure, sometimes a repetitive thing can get, well, repetitive. I enjoyed FarmVille and CityVille and progressed far in them, but when I started CastleVille, it felt very familiar.

In my mind, I projected forward the next three months of grinding and upgrading and mission-completing that I had enjoyed in the previous two games.

That projection took all of five seconds and I concluded I’d already seen all that the game had to offer. I didn’t bother carrying on with CastleVille, although of course I might have been wrong – maybe CastleVille innovated in ways that I didn’t anticipate.

You can level the same accusation at the triple-A approach though, although it does its repetition with more assets, spectacle and unhealthy budget. I finished Uncharted 2, but found it hard to muster the enthusiasm for Uncharted 3.

I expected it to be basically the same action, with a story tacked on top. If it is just about the story, not the gameplay too, then I would rather read a book.

For me, the important bit about Skinner Boxes coming to the end is that you can introduce somebody – in the case of FarmVille – from a population that’s never been exposed to this type of gameplay before, and the “Do something, get reward” thing feels new and rewarding.

Then, when the population is used to this type of idea, just repeating it in the next reskinned game doesn’t feel so new and rewarding because the players have started to understand this system.

Skinner Boxes are all about learning stuff, and when you feel like you’ve already learned that thing the reward is intrinsically less rewarding. Honestly, I’m delighted that the audience is saying “we’re bored, give us something new”. That’s a good thing.

Would you say that ‘operant conditioning’ in games – e.g. the basic slot machine model – is sustainable?

There will always be operant conditioning driven games that will work. There will always be a population who likes basic slot machine games, for example. It has a relatively low cognitive load and is an escapist experience.

Ultimately, if it is going to be sustainable, developers need to look at increasing the game experience in three different areas.

Firstly, you can up the spectacle – and we’re seeing that happening. It doesn’t matter so much if you’re not learning something new, if you are seeing something spectacular. That’s why we’re seeing budgets in free-to-play games going up.

Secondly, you can increase the reward level. If players are no longer responding to this behavioural operant conditioning stuff, developers can think “let’s ratchet up the rewards.” Real money gaming is way of doing that.

Another is the Japanese phenomenon of complete gacha. That’s where you ratchet up the rewards but in order to gain the rewards you have to do a bunch of tasks to gain an item.

You repeat this cycle three times again to get four quarters of a lottery ticket just to submit it. And maybe you win, maybe you don’t, and if you don’t, you start the cycle again.

The nested randomness is very compelling from an operant conditioning standpoint, so much so that the Japanese government has essentially banned it.

Finally, your third option is to do something new and creative. If you are, by background, an analytics-led suit, it’s harder to imagine how to do the new and creative than the other two options above, so you’ll up the spectacle or up the reward.

Japan recently outlawed the “kompu gacha” practice, which is an extreme example of operant conditioning applied to monetisation. The apparent reason behind the ban was its overwhelming success in parting players from their money. Isn’t this a point in favour of operant conditioning for games?

So, okay, you might ask ‘why did they bother outlawing it if in my argument earlier it doesn’t work?’

Well, it does work, but it works either when it’s extreme or it works on a vulnerable section of society. What, I think, the Japanese found was that this double-tier of randomness become so compelling to a subset that it was time for the state to step in and protect some people from themselves.

I’m not a massive fan of state intervention, but there are some cases where people being protected from themselves is important and, by the same token, I don’t like the idea of targeting people who are vulnerable. That’s morally wrong, as well as being a bad long-term strategy.

So, I’m not arguing that operant conditioning [in games] is totally useless, or that it doesn’t work. I’m arguing that at the mass market of 100 million players, simply repeating the same stuff again and again becomes less effective at engaging players because we’re not learning or experiencing anything new.

What alternatives does a developer have to the Skinner Box approach if they want to maximise the life-time value of a heavy spender?

I don’t think Skinner Boxes drive heavy spenders. My view of heavy spenders, or ‘superfans’, is that you’ve got to allow those people who love what you do to spend lots of money on things that they really value.

So, what are the alternatives to operant conditioning? Make people love your game.

One proxy for that is to make people Skinner Box-y addicted to your game, but in actuality, you will have a more successful, satisfying game if people are coming back to it because they want to.

There is recent EEDAR research which says people don’t regret spending money if they feel that they’ve got good value. If they’re really enjoying the game, and they want to give you money, give them things to spend on which have emotional resonance for them in the context of the game.

In context of virtual goods, it’s emotions that drive spending and a player’s sense of value. Players value being a part of something, or standing apart from everyone else. They value self-expression, they value friends and being part of a team, they value their time more than their money, they value any number of different things.

The difference in this approach to a pure Skinner Box which – at its most cynical – aims to trick the brain into valuing something it doesn’t, is that it’s about building a social context in which people want to spend money.

So, basically, instead of tricking the brain into valuing something, developers should work on getting players to value something?

Yes, exactly.

And that, to a large extent, means providing a social context. Not necessarily a synchronous multiplayer environment like Team Fortress but in, say, Candy Crush Saga where there’s a map where you can see the progress of your friends and you might want to progress faster or catch up to be part of the crowd.

You may enjoy sending lives to each other, which means you understand the value of lives and are happy to spend on them, and so on.

篇目3,Dopamine and games – Liking, learning, or wanting to play?

by Ben Lewis-Evans

A few weeks ago when listening to a gaming podcast, I heard the hosts describe a particular game as “giving them their shots of dopamine” in terms of the pleasure they had experienced with the game, and their desire to keep on playing (dopamine being a neurotransmitter i.e. a chemical used in the brain). The comment was made off-hand but reflects a common view – that having dopamine released is related to pleasure and reward, and therefore is relevant to gameplay. But is this view correct?

Well, if we go back around 30+ years, the view that dopamine is the chemical related to pleasure and reward was being presented by researchers. One classic experiment that led to this view of dopamine being related to pleasure comes from even further back and involved rats that had electrodes in their brain stimulating brain areas that (it turns out later) can be responsible the production of dopamine [1]. These rats could press a lever to get this part of the brain activated. In response to this self-administered brain stimulation the rats would push this lever at the expense of basically anything else. For example, they would rather press the lever than eat, be social, sleep, and so on. This, and other later evidence, led to this area of the brain being labelled as the ‘pleasure centre’ and seemed pretty convincing.

So, the conclusion at the time is that dopamine was the most important chemical that made us enjoy rewards and at the same time could motivate us to seek them out [2]. As such, the idea that dopamine is a reward and pleasure chemical, spread and is now mentioned off hand by podcasters (a very scientific metric of cultural spread, I know).

Unfortunately (well, actually in the long run, fortunately) the brain is not that simple. Science has moved on and things have changed. Indeed, a leading researcher in the area has, jokingly, suggested that that the best answer to the question “what does dopamine do?” is “confuse neuroscientists” [3].

That answer, while amusing (I laughed at least), doesn’t really help those working in games to understand what their games may or may not be doing to the brains of those playing them. As such, the aim of this blog is for me to as clearly as possible explain what Science currently says about the role of dopamine and rewards. More than that though, I will also try and provide some comment in terms of what all this neurotransmitter stuff actually means for people who make games.

Please note, in the following blog I will be limiting myself to talking about what is known about the effect of dopamine (the neurotransmitter) itself and not discussing what is known about the brain areas that are related to its production or suppression. I have also limited myself to only discussing the most relevant and interesting (in my opinion) examples and experiments as this is not the place for a full academic review of the subject (If that is what you want, check out the academic reviews listed in the reference section at the end of this blog [4-8]).

Liking, learning, or wanting rewards in gameplay

One useful way to approach the question of dopamine, and what it does, is to break down people’s reaction to rewards in terms of liking, learning, or wanting. Which is to say, that when it comes to how you react to a reward (such as achieving something in a game) then whether you like the reward (i.e. is it fun), have learned the appropriate way to get the reward (i.e. can you actually play the game), and if you want to work to get the reward (i.e. is it motivating to play), can be completely different, and independent, things.

Liking to play

It seems to make sense that if you do something for a reward then you must like that reward. This is indeed part of the reasoning that led early researchers looking at dopamine to assume that dopamine was to do with pleasure (‘liking’) [2]. Basically, they thought, why else would the rats have pushed that lever for so long if they weren’t enjoying themselves?

The idea that the self-stimulating rats were enjoying themselves may have been an understandable assumption. However, it turns out that while rewards are often liked they do not have to be liked in order to be effective in changing behaviour and, furthermore, that dopamine itself does not appear to be directly involved in ‘liking’ and pleasure.

Specifically, as research has moved on, it has become clear that animals (usually mice and rats) that have had their ability to produce dopamine stopped or restricted (either via drugs, surgery, or genetic alteration) can still be seen to demonstrate that they ‘enjoy’ and ‘like’ things. One demonstration of this would be that mice that have been genetically altered to not produce dopamine (a type of mutant “knock out” mouse, or an X-Mouse if you prefer) still show a preference for sugary water and other foods [9]. In that, these mice like and seem to show signs of enjoying the taste of sugary water and when given the choice will pick to drink it over plain, non-sugary, water. Furthermore, you can also genetically engineer mutant mice to have excess dopamine and these animals do not show any additional/enhanced signs of ‘liking’ different foods despite all the dopamine floating around in their brains [10].

So, that is mice but what about humans? Well, researchers aren’t really allowed to make mutant humans and it is not easy to get permission to give drugs or do brain surgery on people. But, we can look at patients with Parkinson’s disease, which is characterised by problems with dopamine production. These patients, much like the rats and mice, also do not appear to show any decreases in the liking of rewards, such as sweet tastes [11].

Given the above findings, and many others (e.g. see the reviews of [4-8]), it became hard for researchers to continue with the idea that dopamine is a pleasure or ‘liking’ chemical. In fact, by 1990, Roy Wise, a leading researcher and initial proponent of the idea that dopamine is related to pleasure stated:

“I no longer believe that the amount of pleasure felt is proportional to the amount of dopamine ?oating around in the brain” ([12] – p. 35).

Indeed, rather than dopamine it seems that other neurotransmitters, such as opioids (Endorphins! Remember when they were trendy to talk about?) and cannabinoids are actually more often involved in ‘liking’ a reward [4, 10, 13-15]). Although, it should be noted at this point that opioid release in the brain can, indirectly, also lead to reactions in the dopamine system, which again could explain the early confusion over the role of dopamine. However, as mentioned above, mice that are genetically unable to produce dopamine still do like things!

So, that pleasurable feeling you get when playing a game? Dopamine is probably not the cause. In the same fashion, if someone tells you their game is designed to maximise dopamine delivery then this does not necessarily mean their game will be fun or enjoyable to play.

Learning to play

If dopamine isn’t related to pleasure, then what does it do? Well, another hypothesis, which became popular in the 1990’s, is that dopamine helps animals learn how and where to get rewards (a very useful thing to remember in games but also in life in general). This hypothesis arose when scientists started noticing that dopamine activity appeared to increase before a reward was delivered and therefore could be helping animals predict the arrival of a future reward [6-8]. That is to say that dopamine was produced when an animal saw a stimulus (such as a light coming on) that had been previously linked to getting the reward and, therefore, the dopamine release was predictive of the reward coming and not a reaction to the reward itself (as it would be if it was just about ‘liking’ that reward or getting pleasure from it). There also seems to be an increase in the activity in the dopamine system when a reward is unpredictable (like a random loot drop in a game). That dopamine activity increased most when an animal was expecting or learning about an unpredictable reward appears to make sense if dopamine is about learning. After all, if a reward appears to be unpredictable then you should pay more attention/try and learn about what signals the reward so you can work out how to better obtain that reward in the future [16].

Again, this evidence for dopamine’s role in learning looked pretty good [7-8]. However, once again mutant mice have shaken up this idea. In a quite clever study in this area, scientists at the University of Washington showed that not only do mice that cannot produce dopamine still ‘like’ rewards but that they were also capable of learning where a reward was [17]. Specifically, these mutant knock-out mice could still learn that a reward (food) was in the left hand side of a T shaped maze, although they did so only after being given caffeine. The addition of caffeine to the mice is unrelated to dopamine production but was needed because without this mice that cannot produce dopamine don’t do much of anything. As you can see for yourself in this video with a normal and a dopamine deficient mouse the mutant mouse tends to just sit there. In fact, these mutant mice do so little that they will die from not eating and drinking enough unless given regular shots of a drug that effectively restores their dopamine function for a day or so [3].

In addition to the above experiment, it also appears that mice that have more dopamine than normal do not demonstrate any advantages when it comes to learning [3]. However, as mentioned, the fact that dopamine deficient mutant mice will essentially starve to death means that dopamine must do something. But if dopamine isn’t for pleasure from rewards, and isn’t for learning about rewards (although argument here still exists), then what does dopamine do?

Wanting (desiring, needing) to play

It turns out, as far as where science is currently at (and remember science does, and should, change as new evidence is found), that it seems that dopamine is most clearly related to wanting a reward [4-6, 15, 17]. This is not wanting as it would perhaps commonly be used in terms of a subjective feeling or cognitive statement like “Oh, I want to finish Saints Row IV tonight” but rather as a drive, a desire, or a motivation to get a reward. So, this is not about a feeling of ‘liking’ and pleasure, instead what we are talking about is a feeling of a need or drive to do something. Subjectively this may be like when you just have to take one more turn in Civilization (or start playing and then 5 hours later realise you are still going) or when you have just get a few more loot drops in Diablo before you stop for the night. Indeed in the literature, when discussing the results of experiments on mice, some researchers suggest that dopamine creates a ‘magnetic’ attraction or compulsion towards obtaining a reward [3, 6]. Indeed, it could be argued that the evidence for dopamine being involved in ‘learning’ is in fact just a sign of ‘wanting’ being directed towards an uncertain reward, which then motivates learning to occur as a side effect (i.e. if I want something, I am likely to try and learn how to get it).

Again, here, we can look at mutant mice to confirm the role of dopamine in ‘wanting’. In mice that cannot produce dopamine, their motivation to move towards and work for rewards (which, remember, they do like and have learnt how to get) is deficient [3, 17]. This means that these mice do like sugary water, and they have learnt that the sugary water comes from the drinking tube on the right; however, they just aren’t motivated to walk over there and drink it [3]. Conversely, mutant mice that have more dopamine than is normal have been shown to be more motivated to gain rewards, both in terms of how fast they approach rewards and how much effort they will expend to get the reward [3, 10]. Also remember those rats with electrodes in their brains working away at the expense of everything else just to get more stimulation? Well that can also be explained in terms of ‘wanting’ the simulation rather than ‘liking’ it [1, 2]. Kind of like how someone with OCD will wash their hands over and over and over again, even though they often get no pleasure from this act (and in fact it can be quite distressing).

Looking at humans, if we examine patients with Parkinson’s disease then there are also studies that show that some of these patients demonstrate increased ‘reward wanting’ and have compulsion problems when given a drug that enhances dopamine production. For example, such patients have been reported to go on obsessive shopping sprees and demonstrate other ‘manic’ type behaviour [18].

Furthermore, if we go back and look at reports of people who, like the previously mentioned rats [1], had direct (self) electrical stimulation of their so called ‘pleasure centres’ in their brain (usually for questionable medical reasons), then we see subjective reports of increased sexual desire or motivation to perform various activities (or just to press the button to self-stimulate, which they would do thousands of times). However, we do not actually see clear reports from these people of increased pleasure, sexual or otherwise (these accounts are mostly from the 70’s & 80’s where this kind of thing was going on, and are summarised in [2] if you are interested). In one highly ethically questionable example, the researchers actually hired a female prostitute at the request (although, one could easily question if this was a true, ethically acceptable, request) of an electrically self-stimulating man who was being ‘treated’, in part, for homosexuality, along with depression, drug abuse, and epilepsy [19]. So, in these human accounts we see suggestions of what looks like a wanting response to self-stimulation of dopamine related brain areas but not necessarily a liking response (i.e. the subjects expressed increased desire but not necessarily increased pleasure). Although, it should be pointed out that if you were depressed, and then suddenly started feeling motivated to do things again that this may, as a side effect, increase your mood [2].

The upshot of all of the research mentioned above is that it appears that dopamine is not directly about pleasure (or learning) but rather it is about motivation or, if you want to be more sinister, compulsion. So, when those podcasters I was listening to said that a game was compulsive because it was giving them their dopamine shot, they may have been right. However, dopamine was not directly responsible for also making the game they were talking about fun (please note, I am not so serious that I expect videogame podcasters to be exact about this kind of thing, rather I am just using them as a convenient example).

What does this mean for games?

It is very popular at the moment to attach the term ‘neuro’ to almost anything. In academia this has led to a, justified in my opinion, neuroskeptic movement that is calling for, well, more evidence. However, there is no doubt that this ‘neurofication’ is popular with people. In fact, there is even research [20] showing that, at the moment, people seem to have a bias towards believing that data that is presented to them in a neuroscience-like fashion (i.e. via an image of a brain scan) is more scientifically valid than the same data presented to them in a more mundane fashion (i.e. via a bar graph).

So, what does knowing all this neuroscience mean for those who are making games? Well, from a strictly pragmatic and applied perspective it could be argued it means very little for every day game design. The neuroscience I have presented here is mostly pure, not applied, science and comes from the perspective that we already know that certain rewards and ways of delivering reward are particularly motivating and pleasurable (and may or may not have anything to do with dopamine to different extents). For example:

- Rewards that are unpredictable (loot drops) are generally more motivating than rewards that are predictable (100 xp per monster) [21-23].

- Rewards should be meaningful, e.g. food is not particularly motivating for most people if you are already full, or if you are in a relatively visually sparse setting then new, unusual, stimuli will attract your attention more readily [16].

- People tend to have a preference for immediate rewards and feedback and are not so motivated by delayed rewards and feedback. This preference for immediate gratification is strongest when young, but persists throughout life [24-26].

- Learning to get and want a certain reward is enhanced by immediate feedback about what behavioural response produced that reward. Uncertainty about what behaviour produced the reward will often lead to trial-and-error type exploration, which will be more likely to continue if further rewards arrive [23, 27, 28].

- If people perceive they are progressing towards a reward, even if that progress is artificial/illusionary, they are more likely to be motivated to obtain the reward (just one more turn…) [29].

- Similarly, people tend to report that they will work harder to keep what they have rather than to gain something they don’t yet possess [30].

- People have somewhat of a bias towards large numbers. Therefore to some extent will prefer, and be more motivated by, a system where they earn 100 xp per monster and need 1000 xp to level up over a system where they earn 10 xp from a monster and need 100 xp to level up [29, 31].

- A predictor for a reward can serve/become a replacement for that reward in terms of behavioural response (e.g. getting points in a game becomes associated with having fun and points can therefore become a motivating reward in themselves) [21-23, 29, 32, 33].

- People tend to dislike rewards that are delivered in a way that is perceived to be controlling [22, 34-36].

- Feelings of mastery, self-achievement, and effortless high performance appear to be quite rewarding, if somewhat more difficult to achieve than other types of reward [35-37].

As such, the neuroscience research I have discussed isn’t, primarily, aimed at working out how to make a reward motivating or pleasant but rather at understanding why it is so at a physiological level. Or if it does take an applied view, it is usually about using drugs or direct brain stimulation to get results.