关于游戏的现代体积渲染技术概述

作者:Stoyan Nikolov

几个月以前,索尼公布了他们即将推出的MMO游戏《EverQuest Next》。让我非常兴奋的是,他们选择把游戏世界立体化。我一直对体积渲染技术充满兴趣,所以借本系列,我将解释为什么这种技术最适用于当下的游戏以及近未来的游戏。

在这个系列文章中,我将介绍一些算法的细节以及它们的实际用法。

本系列的第一篇文章将介绍体积渲染的概念以及它给游戏带来的最大好处。

体积渲染是著名的算法家族中的一员,其作用是把一系列3D样本投射为2D图像。它广泛运用于各个领域如医学成像(游戏邦注:核磁共振成像、CRT可视化)、工业、生物学、地球物理学,等等。它在游戏中使用得并没有那么多,案例包括《Delta Force》、《Outcast》、《C&C Tiberian Sun》等游戏。体积渲染技术的使用一直在萎缩,直到最近,它才被人们“再发现”,重新在游戏中流行起来。

我们通常对游戏的网格模型的表面有兴趣,却很少关注它的内部结构——在医疗运用方面是相反的。极少软件选择体积渲染,而是普遍使用多边形网格模型表现法。然而,体积渲染有两个对现代游戏越来越重要的特点——可破坏性和程序生成法。

游戏如《我的世界》已经表明,玩家非常喜欢按自己的方式创造自己的世界。另一方面,游戏如《红色派系》的玩法重点是对周边环境的破坏。这两款游戏,尽管非常不同,但基本上都需要相同的技术。

可破坏性(当然也包括可构造性)是游戏设计师们积极寻找的属性。

修改网格模型的办法之一是把它变成传统的多边形模型。这是一种相当复杂的做法。中间件解决方案如NVIDIA Apex可以解决多边形网格模型的可破坏性问题,但通常仍然需要设计师做其他工作,且构造性问题基本上没有解决。

体积渲染正是在这方面派上大用场。网格模型的表现法是一种比三角形集合体更加自然得多的3D网格体素(立体象素)。这个立体物已经包含了关于对象形状的重要信息,且它的修改接近于现实世界的情况:要么添加立体物,要么从另一个立体物中减去立体物。使用Zbrush就是类似的过程。

体素自身可以包含数据,但它们通常定义一个距离域——那意味着每一个体素都编码一个值,以表明焦点离网格模型表面的距离是多少。体素也包含材质信息。因为这种定义,作用于体素网格模型之上的体素构造几何法(CSG)变得非常烦琐。我们可以在网格模型上自由地添加或减去任何立体物。这使建模过程更加灵活。

程序生成法是另一个具有诸多优点的特性。首先,它可以节省大量人力和时间。关卡设计师可以程序生成地形,然后微调,而不是从无到有制作每一个乏味的细节。当需要制作大量场景——如MMORPG游戏时,你就会发现这种节省是多么重要。因为新一代游戏机的内存更大,性能更好了,玩家会要求更多更好的内容。只有使用程序内容生成法,游戏开发者才能让未来的游戏满足玩家的各种需求。

总之,程序生成法意味着我们使用具有比较少的输入参数的数学函数来制作网格模型。至少美工不需要雕刻模型的最初版本。

使用程序生成法,开发者还可以获得比较高的压缩比,从而节省大量下载资源和硬盘空间。我们已经看到程序生成材质的巨大优势,所以为什么不把它运用于3D网格呢?

体积渲染技术的使用不局限于网格模型。现在它的使用已经扩展到其他方面,比如:

全局光照(看虚幻引擎4的强大效果)

液体模似

用于视觉效果的通用图形处理器(GPGPU)Ray Marching

接下来,我将介绍大部分工作室都使用的基本技术。

技术类型

体积渲染技术可以分为两大类——直接的和间接的。

直接技术根据场景的体积表现法产生2D图像。几乎所有现代算法都使用光线投射的某些变体,然后在GPU上做计算。

尽管直接技术能产生很漂亮的图像,但它有一些缺陷导致无法在游戏中广泛运用:

1、相当高的每帧成本。计算严重依赖计算着色器,虽然现代GPU有非常好的性能,但仍然不能高效地绘制三角形。

2、难以与其他网格混合。对于虚拟世界的某些部分,我们可能仍然希望使用常规的三角网格。美工普遍使用这个工具来编辑东西,将它们用于表现体素,可能极其困难。

3、难以与其他系统上互动操作。大多数实例物理系统要求使用三角网格表现。

间接技术产生暂时的网格表现。他们从体积中产生三角网格的效率很高。转换成更熟悉的三角网格有许多好处。

多边形化(从体素转变成三角形)只要一次就能完成——在游戏/关卡加载时。之后在每一帧渲染三角网格。GPU很擅长处理三角形,所以我们可以得到更好的每帧表现。我们还不必对引擎或第三方库做太大的改变,因为它们可以处理三角形。

在本文中,我将讨论间接体积渲染技术——多边形化的过程和如何高效地使用已生成的网格和快速渲染它——即使它很大。

什么是体素?

体素是立体物表面的构成部件。所谓的“体素”就是“体积元素”,是我们更加熟悉的像素的3D版。每一个体素在3D空间中都有一个位置和与之相关的属性。尽管可以有任何我们想要的属性,我们将讨论的所有算法都要求至少有描述表面的数量值。在游戏中,我们最感兴趣的是对象的表面而不是它的内部——这给我们优化的空间。从更技术性的角度说,我们希望从数量场(体素)中抽出等值面。

生成网格的一系列体素通常在形状上是平行六面体,叫作“体素格”。如果我们使用体素格,那么它当中的体素的位置就是隐蔽的。

在所有体素中,我们设置的数量通常是这个体素所在空间的点的距离函数。距离函数一般写成 f(x, y, z) = dxyz的形式,其中dxyz是从空间中的点x,y,z到表面的最短距离。如果这个体素是“在网格中”,那么这个值就是负数。

想像一下,一个网格做成的球表现为体素格,所有在球“当中”的体素都是负值,所有在球之外的体素都是正值,所有正好在体素格上的体素的值都是0。

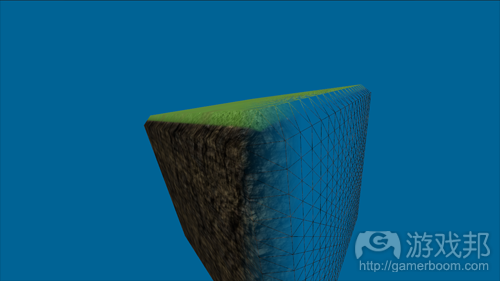

(用MC算法做的方块多边形化——注意边缘细节的缺失)

移动立方体法(MC算法)

最简单最广为人知的多边形化算法是“移动立方体”。有许多技术产生的结果比它的好,但因为它的简单和精致,所以仍然值得了解。移动立方人体模型也是许多更先进的算法的基础,这给我们一个简单地比较它们的框架。

这种算法的思路主要是,一次取8个体素形成一个虚拟方块的8个角。我们独立地操作各个方块,产生三角形。

为了决定我们到底要生成什么,我们只使用在角上的体素的符号,形成256个案例中的一个。那些预先计算好的案例表告诉我们要生成哪个顶点,在哪里以及如何结合成三角形。

这些顶点总是在方块的边缘生成,它们的确切位置是通过加入那个边缘的角上的体素的值来计算的。

我不打算深入这个执行过程的细节——相当简单,且可以在网上找到参考资料。但我想强调几个适用于大部分MC算法的要点:

1、这种算法产生平滑的表面。顶点不会在方块内部产生,而总是在表面。如果尖锐特征正好在方块内部(非常可能),那么它会被消除。这使这种算法非常适用于更有机形式的网格——比如地形,但不适合像建筑这种具有尖锐边缘表面的东西。为了产生足够尖锐的特征,你需要有非常高分辨率的体素格,而这种体素格通常是难以执行的。

2、这种算法很快。应该生成什么三角形的困难算法是在表格中提前计算好的。各个方块本身的操作是非常简单的。

3、这种算法很容易平行化。各个方块都是互相独立的,可以平行计算。这个算法属于“高度并行”家族的一员。

移动所有方块后,这个网格就是由生成的三角形组成的了。

MC算法往往会生成许多小三角形。如果我们的网格比较大,可能很快就会带来麻烦。

如果你打算把它用于生产,那么请注意它不总是产生“无懈可击”的网格——存在可能产生小洞的结构。这是非常令人烦恼的,但可以用其他算法解决。

在本系列的下一篇文章中,我将讨论用于游戏的体积渲染执行法的要求是什么,主要从多边形化的速度和渲染性能两方面来说。我还用更先进的技术来达到这两个方面的要求。(本文为游戏邦/gamerboom.com编译,拒绝任何不保留版权的转载,如需转载请联系:游戏邦)

Overview of Modern Volume Rendering Techniques for Games

By Stoyan Nikolov

A couple of months ago Sony revealed their upcoming MMO title EverQuest Next. What made me really excited about it was their decision to base their world on a volume representation. This enables them to show amazing videos like this one. I’ve been very interested in volume rendering for a lot of time and in this series I’d like to point at the techniques that are most suitable for games today and in the near future.

In this series I’ll explain the details of some of the algorithms as well as their practical implementations.

This first post introduces the concept of volume rendering and what are its greatest benefits for games.

Volume rendering is a well known family of algorithms that allow the projection of a set of 3D samples onto a 2D image. It is used extensively in a wide range of fields as medical imaging (MRI, CRT visualization), industry, biology, geophysics etc. Its usage in games however is relatively modest with some interesting use cases in games like Delta Force, Outcast, C&C Tiberian Sun and others. The usage of volume rendering faded until recently, when we saw an increase in its popularity and a sort of “rediscovery”.

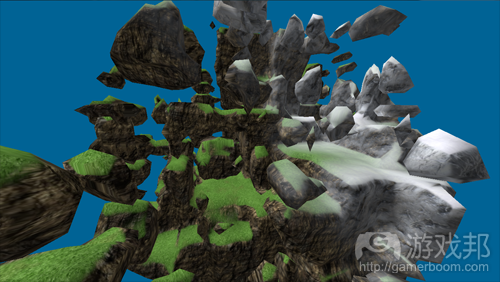

A voxel-based scene with complex geometry

In games we usually are interested just in the surface of a mesh – its internal composition is seldom of interest – in contrast to medical applications. Relatively few applications selected volume rendering in place of the usual polygon-based mesh representations. Volumes however have two characteristics that are becoming increasingly important for modern games – destructibility and procedural generation.

Games like Minecraft have shown that players are very much engaged by the possibility of creating their own worlds and shaping them the way they want. On the other hand, titles like Red Faction place an emphasis on the destruction of the surrounding environment. Both these games, although very different, have essentially the same technology requirement.

Destructibility (and of course constructability) is a property that game designers are actively seeking.

One way to achieve modifications of the meshes is to apply it to the traditional polygonal models. This proved to be a quite complicated matter. Middleware solutions like NVIDIA Apex solve the polygon mesh destructibility, but usually still require input from a designer and the construction part remains largely unsolved.

Minecraft unleashed the creativity of users

Volume rendering can help a lot here. The representation of the mesh is a much more natural 3D grid of volume elements (voxels) than a collection of triangles. The volume already contains the important information about the shape of the object and its modification is close to what happens in the real world. We either add or subtract volumes from one another. Many artists already work in a similar way in tools like Zbrush.

Voxels themselves can contain any data we like, but usually they define a distance field – that means that every voxel encodes a value indicating how far we are from the surface of the mesh. Material information is also embedded in the voxel. With such a definition, constructive solid geometry (CSG) operations on voxel grids become trivial. We can freely add or subtract any volume we’d like from our mesh. This brings a tremendous amount of flexibility to the modelling process.

Procedural generation is another important feature that has many advantages. First and foremost it can save a lot of human effort and time. Level designers can generate a terrain procedurally and then just fine-tune it instead of having to start from absolute zero and work out every tedious detail. This save is especially relevant when very large environments have to be created – like in MMORPG games. With the new generation of consoles with more memory and power, players will demand much more and better content. Only with the use of procedural generation of content, the creators of virtual worlds will be able to achieve the needed variety for future games.

In short, procedural generation means that we create the mesh from a mathematical function that has relatively few input parameters. No sculpting is required by an artist at least for the first raw version of the model.

Developers can also achieve high compression ratios and save a lot of download resources and disk space by using procedural content generation. The surface is represented implicitly, with functions and coefficients, instead of heightmaps or 3D voxel grids (2 popular methods for surface representations used in games). We already see huge savings from procedurally generated textures – why shouldn’t the same apply for 3D meshes?

The use of volume rendering is not restricted to the meshes. Today we see some other uses too. Some of them include:

- Global illumination (see the great work in Unreal Engine 4)

- Fluid simulation

- GPGPU ray-marching for visual effects

In the next posts in the series I’ll give a list and details on modern volume rendering algorithms that I believe have the greatest potential to be used in current and near-future games.

Types of Techniques

Volume rendering techniques can be divided in two main categories – direct and indirect.

Direct techniques produce a 2D image from the volume representation of the scene. Almost all modern algorithms use some variation of ray-casting and do their calculations on the GPU. You can read more on the subject in the papers/techniques “Efficient Sparse Voxel Octrees” and “Gigavoxels”.

Although direct techniques produce great looking images, they have some drawbacks that hinder their wide usage in games:

Relatively high per-frame cost. The calculations rely heavily on compute shaders and while modern GPUs have great performance with them, they are still effectively designed to draw triangles.

Difficulty to mix with other meshes. For some parts of the virtual world we might still want to use regular triangle meshes. The tools developed for editing them are well-known to artists and moving them to a voxel representation may be prohibitively difficult.

Interop with other systems is difficult. Most physics systems for instance require triangle representations of the meshes.

Indirect techniques on the other hand generate a transitory representation of the mesh. Effectively they create a triangle mesh from the volume. Moving to a more familiar triangle mesh has many benefits.

The polygonization (the transformation from voxels to triangles) can be done only once – on game/level load. After that on every frame the triangle mesh is rendered. GPUs are designed to work well with triangles so we expect better per-frame performance. We also don’t need to do radical changes to our engine or third-party libraries because they probably work with triangles anyway.

In all the posts in this series I’ll talk about indirect volume rendering techniques – both the polygonization process and the way we can effectively use the created mesh and render it fast – even if it’s huge.

What is a Voxel?

A voxel is the building block of our volume surface. The name ‘voxel’ comes from ‘volume element’ and is the 3D counterpart of the more familiar pixel. Every voxel has a position in 3D space and some properties attached to it. Although we can have any property we’d like, all the algorithms we’ll discuss require at least a scalar value that describes the surface. In games we are mostly interested in rendering the surface of an object and not its internals – this gives us some room for optimizations. More technically speaking we want to extract an isosurface from a scalar field (our voxels).

The set of voxels that will generate our mesh is usually parallelepipedal in shape and is called a ‘voxel grid’. If we employ a voxel grid the positions of the voxels in it are implicit.

In every voxel, the scalar we set is usually the value of the distance function at the point in space the voxel is located. The distance function is in the form f(x, y, z) = dxyz where dxyz is the shortest distance from the point x, y, z in space to the surface. If the voxel is “in” the mesh, than the value is negative.

If you imagine a ball as the mesh in our voxel grid, all voxels “in” the ball will have negative values, all voxels outside the ball positive, and all voxels that are exactly on the surface will have a value of 0.

Cube polygonized with a MC-based algorithm – notice the loss of detail on the edge

Marching Cubes

The simplest and most widely known polygonization algorithm is called ‘Marching cubes’. There are many techniques that give better results than it, but its simplicity and elegance are still well worth looking at. Marching cubes is also the base of many more advanced algorithms and will give us a frame in which we can more easily compare them.

The main idea is to take 8 voxels at a time that form the eight corners of an imaginary cube. We work with each cube independently from all others and generate triangles in it – hence we “march” on the grid.

To decide what exactly we have to generate, we use just the signs of the voxels on the corners and form one of 256 cases (there are 2^8 possible cases). A precomputed table of those cases tells us which vertices to generate, where and how to combine them in triangles.

The vertices are always generated on the edges of the cube and their exact position is computed by interpolating the values in the voxels on the corners of that edge.

I’ll not go into the details of the implementation – it is pretty simple and widely available on the Internet, but I want to underline some points that are valid for most of the MC-based algorithms.

The algorithm expects a smooth surface. Vertices are never created inside a cube but always on the edges. If a sharp feature happens to be inside a cube (very likely) then it will be smoothed out. This makes the algorithm good for meshes with more organic forms – like terrain, but unsuitable for surfaces with sharp edges like buildings. To produce a sufficiently sharp feature you’d need a very high resolution voxel grid which is usually unfeasible.

The algorithm is fast. The very difficult calculation of what triangles should be generated in which case is pre-computed in a table. The operations on each cube itself are very simple.

The algorithm is easily parallelizable. Each cube is independent of the others and can be calculated in parallel. The algorithm is in the family “embarrassingly parallel”.

After marching all the cubes, the mesh is composed of all the generated triangles.

Marching cubes tends to generate many tiny triangles. This can quickly become a problem if we have large meshes.

If you plan to use it in production, beware that it doesn’t always produce ‘watertight’ meshes – there are configurations that will generate holes. This is pretty unpleasant and is fixed by later algorithms.

In the next series I’ll discuss what are the requirements of a good volume rendering implementation for a game in terms of polygonization speed, rendering performance and I’ll look into ways to achieve them with more advanced techniques.(source:gamedev)

闽公网安备35020302001549号

闽公网安备35020302001549号