阐述《Pugs Luv Beats》中的音乐制作理论和乐趣

作者:Yann Seznec

总是有人问我是如何制作游戏。这点让我非常惊讶,因为我并不认为自己真的在制作游戏——我的本职是音乐制作人兼演奏者,但是因为在四年前我凭借着Wii远程音乐控制软件获得了一定的名气而创建了Lucky Frame后,我发现我和自己的公司正逐渐朝着独立游戏领域而发展,对于这一点我也感到非常欣慰。

作为音乐制作人,我意识到了游戏的互动音频和音乐所存在的巨大潜力。我的一位音乐家朋友曾经对我说,很多时候音乐世界中最具创造性的内容并不存在于音乐中,而是在音乐软件中。我想如果巴赫,Coltrane或Nancarrow还活着,他们肯定也会致力于设计生成音乐系统或新的Monome修补程序。

也许这并不算什么新鲜事了,所以我将在此讨论好几年前便兴起的,将技术作为创造性音乐工具这一热潮,并列举一些作曲家,像Russolo——为了创造出刺耳的噪音而自己创造出疯狂的乐器,以及Daphne Oram及其惊人的Oramics乐器——以便她将自己的作品带进电影中等。

我刚刚提到的Conlon Nancarrow便是另外一位热衷于使用技术的作曲家。当其他音乐家因为他的作品太过复杂而难以适从时,他已经开始在钢琴卷帘上穿孔了,并将其运行于自动钢琴上,如此而创造出所有人都未曾听过的更加复杂的音乐(并使他成为了通过程序去设计音乐的第一人)。

因为在过去20年时间里媒体技术的飞跃发展,这种趋势更是飞速发展。虽然高端录音和制作成本仍然很高,但是现在已经有越来越多人能够以极低的成本去录音,混音,编曲并制作出高质量的音效了。

如果你是生活在1980年并想要获得一个合成器,你便需要支付1300美元去获得一个ARP。但是现在你却可以从无数免费的软件合成器中做出选择(如果你想要创建一个硬件的话成本可能会更低!)使用这些免费软件你便能够更轻松且更快速地创造出你心目中的理想声音了。

关于作曲的另外一大变革,也是Matthew Herbert(游戏邦注:90年代末至今世界最成功的音乐制作人之一)常指出的是,过去的作曲家总会去模拟相应的对象(如贝多芬在编写长笛部分的乐曲时便模拟了夜莺的叫声,Cootie Williams的讲话式喇叭声亦是如此),而现在的作曲家可以走出这种束缚,即使用工具去记录下鸟叫声或别人的谈话声。

但是这一点也让众多作曲家和音乐人倍感困扰,因为如果所有设想都能够轻易实现,那又有何乐趣可言呢?我想这也是为何有越来越多作曲家和音乐人(特别是面向电子乐器)对界面和过程感兴趣的主要原因。如何制作音乐,并且这对于最终结果有何影响?这两个问题便是我在过去几年时间里一直在琢磨的,所以最终便出现了英国真人秀节目《Dragons’ Den》中的各种滑稽表演,以及我最近使用生蘑菇去控制电子乐器的举动。

对于我来说将游戏当成是创作音乐的工具只是时间问题,因为电子游戏是现在最强大的互动界面。所以我们何不尝试着利用游戏互动的强大力量去生成音乐?

为游戏世界的人呈现音频和音乐是非常激动人心的时刻。尽管我很喜欢《吉他英雄》这类型游戏,但是不得不承认的是这股趋势已经逐渐淡却了。尽管它们仍有自己的存在价值(我也敢保证它们最终会强势回归),但是这类型游戏一直都是以玩家为中心而准确地再创现有的音乐(通常都是模拟一种陌生的塑料乐器的界面)。

虽然这么做能够创造出非常吸引人的游戏设计,但是从音频或音乐设计角度来看却一点都不有趣。另外,我还发现这类型游戏与早前的音乐教程非常相似,都是更加关注于精确度而非乐趣,音乐性或创造性。

这便是我们创造《Pugs Luv Beats》(我们最近发行的一款iOS游戏,并在音频奖中获得了IGF Excellence的提名)的出发点。我们希望创造出一款以音乐创造为核心的音乐游戏,并受到音频生成内容的支持而不再强迫玩家将音乐作为一种挑战。

我们的首次尝试便是使用Lucky Frame的程序员/设计师Jon Brodsky不久前所创造的太空射击击鼓器。代号“Space Hero”便是用于设置一系列敌人的系统,最后你需要使用太空船去摧毁它。当敌人出现在屏幕上时他们将触发一种鼓声,而当它们被摧毁时也将发出一种低音——所以说这是一种可编辑且可演奏的击鼓器。多棒的原型电子警报器!

这一原型向我们证明了游戏设计(至少是游戏间的互动)从很多方面看来就是音乐表演和作曲一样的存在。当然这也取决于一系列选择,并且在很多情况下都将包含了开始,结束和循环三大元素。

曾经有位音乐老师对我说,当你创造了一个音符时,你只有4个选择。你可以再次演奏这个音符,你可以以不同方式再次演奏这个音符,你可以改变这个音符,或者你可以选择不演奏任何音符。在游戏设计世界中也存在着同样的选择,以及用于简化过程的技巧。用于编写赋格曲的某种技巧便是一典型的例子,即选取一个旋律并基于多种方式去重叠演奏它。通过这个视频我们将进一步了解巴赫的赋格曲的可视化。

你将会发现第一个旋律线只出现一次,并且当它开始出现时相同的旋律线将发生各种变化。在整首曲子中这种情况会反复地出现。

我总是将4个赋格曲旋律当成4个穿梭于同一个空间的不同角色,也许找到不同的升级机会他们便能够加快前进速度,提升自己的能力等。当然也存在其它比拟方式——如巴赫的可视化能够作为一款平台游戏的关卡编辑器。

《Pugs Luv Beats》是我们基于这一原理所创造的系列音乐游戏的第一款。我们尝试着在游戏中平衡音乐元素和游戏元素——接触一个元素便会衍生出另一个元素,反之亦然。换句话说,我们的目标便是使用编曲技巧去制作游戏,并使用游戏设计技巧去制作音乐。

为了实现这一目标,我们必须同时确保游戏玩法能够具有探索创造性以及音乐生成系统能够提供复杂,,可生成的再现音频内容。不管出于何种原因,我们都必须努力做到这两点。

很明显这是一种复杂的挑战——我们必须确保游戏行动能够创造音乐,但同时也必须具备一定的深度,并提供一些不断重复也不会无趣的价值。这是一种奇怪的科学,因为从某种层面来看,音乐是基于重复和模式而言,但是在游戏中重复的声音却会破坏玩家的幻想(或者或让他们感到无聊)。

除此之外,音乐的复杂性也必须具有限度,否则玩家将会迷失于行动与音乐结果的联系间。举个例子来说吧,如果一个特定的砖块在玩家每次经过时都发出相同的声音,那么这种声音很快便会失去意义。

但是如果这一个砖块在玩家每次经过时都能呈现出不同的音调,这便能够添加一种音乐复杂性。而代价则是会减少行动与声音之间的联系——我们必须想办法做到平衡!

生成的或程序式音频已经变成了一个流行短语,并且我们也可以在网络上找到各种不同的定义。基于生成音乐框架进行思考便会产生不一样的效果,我们可以参考20世纪古典音乐作曲家,如Xenakis和John Cage的作品(还有富有争议的爵士乐作曲家,如Charles Mingus)。

在那个世界中,所有人都全身心地投入于创造一个能让表演者自己探索并生成音乐的系统。从多种方面看来,这也是关于创造一个限制框去实现各种音乐可能性。

例如在John Cage的管风琴乐曲《As Slow As Possible》中并未明确真正的节奏,这就意味着演奏时间可能只是20分钟也有可能长达639年。Cage的《Fontana Mix》进一步呈现了这一点——尽管其明确了“各种数量的磁带,各种数量的演奏者以及各种数量的乐器”使用一系列幻灯片和纸进行记录,并直接为演奏者呈现出创造模式。如此便可以衍生出无限种的模式。

也许这听起来很学术化,但是我们有必要明确这类型音乐编曲(通常被称为不确定性或参数化)与游戏之间的基本联系。当然了,游戏和运动便是设置一个系统并让玩家按照自己的方式行动的缩影。每次的游戏过程都会有所不同,而有时候不同的游戏过程又会导致一些难以识别的结果。这并不是一种巧合,这也是这些20世纪的作曲家深深沉迷于游戏理论的主要原因。

最后,再现性是游戏给予用户的一种基本奖励。就像之前所描述的,游戏中所生成的音乐必须具有深度,让用户能够感受到自己对于音乐创作的干预,但是为了明确游戏性质,神秘感也是必不可少。而这种神秘感必须是可再生的,否则用户将不可能理解相关模式并使用它们——这也是将噪音变成音乐的关键。

所以我们将如何将这些理论付诸实践呢?我们创造了一款关于戴帽子的狗的游戏。《Pugs Luv Beats》是我们在2011年12月所发行的一款iOS作曲游戏。在游戏中玩家将控制一只外星人培育的哈巴狗。统治者支配着一个奇妙且高度发达的文明,而这些哈巴狗变成了他们野心的牺牲品。它们只喜欢去收集音乐节拍——即基于特殊的“luv”所培育出的音乐中。但是因为统治者制定了一个愚蠢的方案,即培育最强大的音乐节拍,从而导致所有的一切都失去控制,而它们所居住的星球也遭到了彻底的摧毁。玩家的任务便是帮助这些哈巴狗培育更多节拍,而以此重建它们的星球,建造新的房屋,并找回失去的技术。

这是一款让我们非常自豪的游戏,特别是因为我们只是一个由3人组成的小团队。Jon Brodsky通过结合openFrameworks,Lua和定制游戏建造引擎Blud去实现所有的代码编程。我则是使用libpd和Pure Data进行音频设计。而Sean McIlroy扮演着团队中的美术人员和设计师的身份,并创造出了非常可爱且新奇的哈巴狗角色,以及整个游戏世界和UI。我们想要强调的一点是,尽管从很多方面看来《Pugs Luv Beats》是披着游戏外衣的音乐程序,但是我们同样也需要确保它不会变成一个音乐软件。

游戏玩法是围绕着哈巴狗所展开。一开始玩家将只拥有一栋房子和一只哈巴狗。玩家需要通过敲击道路让哈巴狗奔跑并去收集甜菜。当哈巴狗撞在甜菜上时它便创造了一个音节(ha!),而玩家的甜菜数量也会随之增加。当获得更多的甜菜后玩家便能购买另外一栋房子以及另外一只哈巴狗。购买更多房子和哈巴狗将能够帮助玩家进一步挖掘这个星球,并获得更多甜菜。而收集到足够多的甜菜玩家便能够购买一颗新的星球并再次开始游戏。

当你不断地扩展你的宇宙时,你将会发现一些新的地形,而这些新地形也将发出不同的声音,而为你的音乐调色板增色。但是这些地形却会降低你的哈巴狗数量,所以你便需要找到并使用帽子和服装去武装你的哈巴狗。你是否想在雪地里走得更快?那就去寻找圣诞帽!你是否想在水里游得更快?那就去寻找鲨鱼鳍!

对于游戏设计和结构来说,这种方法便意味着游戏是一个无止尽的循环过程——当你找到了所有帽子和服装后,你可以继续在这个宇宙中进行探索,并寻找其它新地形。每个星球都是单独的音乐世界,也就意味着你将选择重头开始,或回到之前的星球进行混音或歌曲改写——并且都必须使用装备后的哈巴狗。

在我们的美术风格和收集机制背后有一个生成音乐引擎在实践着这一理论与设计。让我们进一步分析这一引擎。

我们很早的时候便决定使用Pure Data去处理所有的音频。这主要多亏于Peter Brinkmann以及Peter Kirn等人将libpd与Pure Data结合在一起,为那些不想手动编程的音乐制作人创造一个免费的开放源图像编程界面。不得不承认的是我也属于这类人。

我并不是程序员,并且也不可能成为一名程序员。我总是很纠结于屏幕上的文本内容,但是代码中的图解却能帮助我更轻松地理解这些内容。

这也是我为何会选择Max/MSP,这个在当代艺术和音乐世界如此受欢迎的商业图像编程系统。当我开始学习并将其用于制作Wii Loop Machine时,我对于Max/MSP的使用也变得越来越熟练了。

Pure Data便是一种免费版本的Max/MSP,所以当我们能够将其整合到我们的项目中时,我们便算把握住了机会。

Pure Data具有巨大的优势(除了免费之外)。首先,它能帮助我们加快开发速度。我们是一个仅有三名成员的团队,并且其中只有一名程序员。而使用Pure Data让我能够处理所有的音频开发,包括预览,建模,实验等。我可以无需麻烦Jon而独立地使用音频引擎并作出任何复杂的调整。

其次,我们能够利用20多年来所累积的音频研究和开发结果,而不用完全依赖自己去创造所有内容。例如说我们想要一个精确的波表回放系统该如何?如果我们使用的是Pure Data,我们便无需自己创建这一系统,因为我们能够随之获得一个程序包。如果是混响系统呢?我们只需要在谷歌中进行搜索变能够看到许多基于Pure Data的不同混响系统(由世界各地的研究人员和开发者所创造的),并且我们可以轻松地调整这些系统而更好地应用于我们自己的项目中。

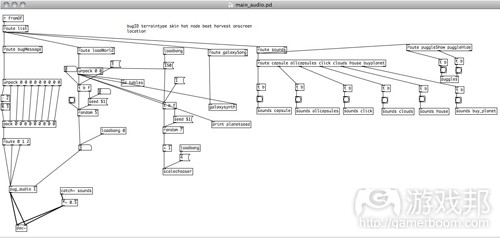

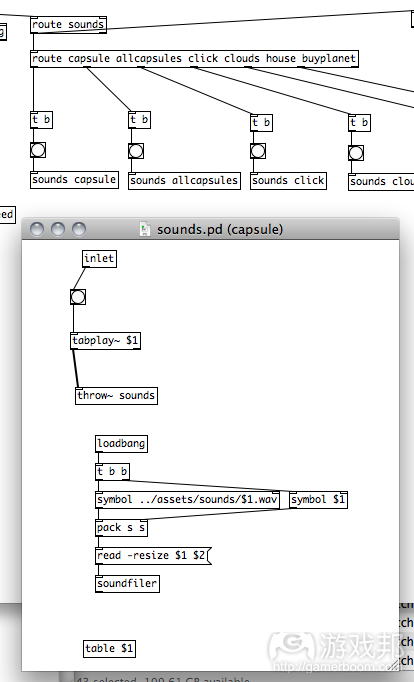

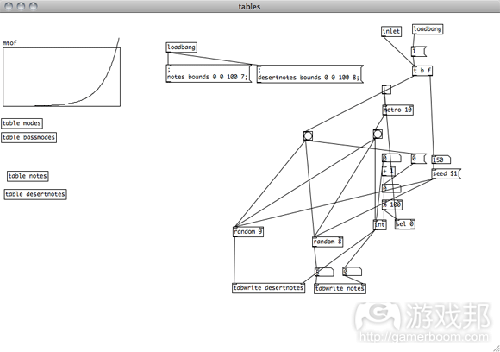

如果要更详细地解释这一系统的功效,我想说的是《Pugs Luv Beats》的整个声音引擎都是包含于这一补丁中。它能够接收到来自Lua/openFrameworks(由Jon所组装的)的信息,并通过整合这些信息去生成并修改相关声音。最简单的便是触发声音,如菜单行动,创建事物等。你可以看到在右边便是“声音通道”对象,即用于处理所有的标准声音。每个“声音”对象都包含了一个小补丁去触发一个声音。

也许使用这一系统去播放一个声音显得太过复杂了,但是它却能够为我们呈现出更加灵活的内容,更重要的是它能够让我(作为声音设计师)在无需麻烦Jon(可能再做一些更复杂的工作)的前提下改变任何与声音相关的内容。

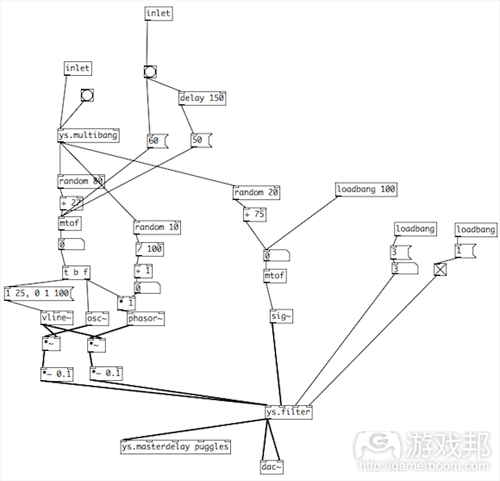

关于引擎中更复杂的一面便是我们所说的Pugglesynth。在这里我们所面临的挑战便是为为“Puggles先生”(引导你观看游戏教程的友好的哈巴狗)创建一个声音库。我们希望他在每次出现时都能够发出一些声音,但是我们却不希望使用任何声音文件。我们希望呈现出更多乐趣和新鲜感,而不是反复使用相同的声音。因此我创造了一个振荡合成器,让这只哈巴狗每次出现时都能够触发一些随机的数字,控制着补丁和滤波器的频率。而每次当他消失时,又会响起两个相同的音调。从而让Puggles先生变成一个可被识别的角色而不会让玩家感到厌烦。

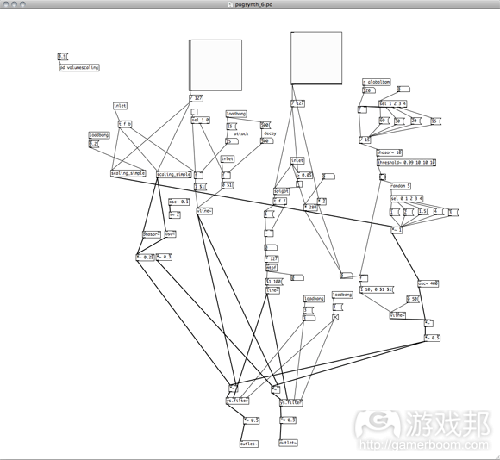

说到合成器,因为《Pugs Luv Beats》的银河合成器实在是太受欢迎了,所以我们最终决定将其整合成属于自己的应用——Pug合成器!没有什么能比x-y pad合成器更让人兴奋了!这里有一个Swamp合成器的快照补丁,并突出了电子乐式滤波器的速度与x轴是紧密相连的。

比起简单的连接,我们将这种速度整合到主BPM(同样也是控制着击鼓器)的倍数中。这就意味着哈巴狗的行动将会与你的节奏保持着一致。这同样也意味着当我们确保所有内容都遵循着一个强大的音乐框架的同时,我们也能够创建一个生成式击鼓器,并轻松地添加各种变奏曲。

这种使用特定主参数去整合所有内容的方法便是我们最初在《Pugs Luv Beats》中所使用的方法。设计一款创造性音乐游戏的一大挑战便是确保所有内容不是围绕着音乐而发展,而是让玩家真正感受到音乐输出的力量。这与我之前所提到的“复杂性”和“再现性”有着密切的关系,所以并不只是一种单一的原理。

我们最终的目标便是保证不管何时生成音乐,都将明确地设定一个稳定的全局参数,其中包含了节奏和音调。前者较为直接,但是后者便需要我们采取更加复杂的方法。

我最引起为傲的一大音频元素便是它对于音调的处理。游戏中有许多旋律库,主要是关于哈巴狗经过不同地形所发出的声音。火山将触发电子吉他;沙地将触发伊朗桑图尔琴等。这种音调的变化主要是取决于星球上的不同地理位置。

这便意味着每个星球都是一个巨大的MPC,或者是样本键盘——哈巴狗站立在任何一个位置上都将在特定的补丁上触发一种特定的声音。

这种声音非常简单,但是因为每个星球都是不同的,所以完成声音的设置却相对棘手。游戏中存在着数百万个潜在的星球,所以我们不可能为每个星球创建一个数据库。相反地,我们可以为每个星球设定一个特定的识别号码去生成能够控制相应音调的表格。

星球的ID将作为一个随机数字,去生成一个查找表而而分配该世界中每个位置所属的音调。这便是对于我们之前所阐述的相关理论的实践——这意味着星球在音调管理方面将体现出一种复杂性,即不同的星球将呈现出不同的音调;而与此同时它也仍具有再现性,因为当玩家每次回到一个特定的星球时,音调的管理将再次趋于一致。

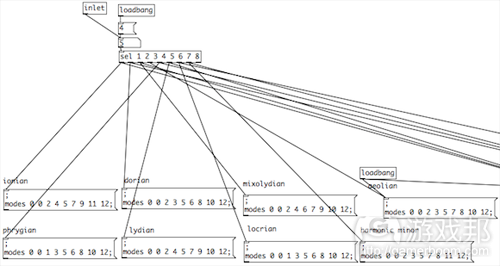

然而我并不满足于这种音乐理论。我希望每个星球都能够拥有属于自己的音乐性。也就是尽管两个星球拥有相同的地形,但是却能够呈现出不同的声音。为了做到这一点,我们便基于星球ID的随机数字而赋予每个星球一个核心节奏和调式。

这种调式(或音阶)便能够用于呈现每个星球的特定音调。你可能会获得一个传统的大音阶,传统的调和小音阶或非常奇怪的Locrian。而为了确保每个调式都能够有效地组合在一起,银河合成器便需要使用相同的调式查找表,从而能够整合每个星球中的相同音调。

整个过程都是关于我们如何为一款iOS音乐游戏创建一个强大的生成音乐引起,即整合我们在过去几年里所明确的一些互动音乐理论。而现在我们也会以不同方式去使用这些理论而创造更多音乐游戏,甚至还会将其引入其它不同的游戏类型中。

有时候我们会发现理论与实践之间是相背离的,但是我希望你们能够通过这篇文章更好地了解我们的创作思维过程。我们将会致力于更多不同的项目,并且从外部看来它们之间可能不存在直接的联系。举个例子来说吧,虽然我们现在正在制作像《Pugs Luv Beats》等iOS游戏,但与此同时我也在为Matthew Herbert的“One Pig Live”巡回演出效力。

而这两个项目有何交集呢?我想互动和界面,特别是有关音乐的内容便是其最大的联系。也就是关于玩家与游戏,或表演者与观众间的互动,或任何其它动态性。最后我们还希望能够加深用户的理解与参与性,从而让他们更加了解游戏并更深刻地体验到游戏的乐趣。这便是我们制作游戏的主要原因。(本文为游戏邦/gamerboom.com编译,拒绝任何不保留版权的转载,如需转载请联系:游戏邦)

Making Music: Pugs Luv Beats’ Theory and Fun

by Yann Seznec

People often ask me how I got into making games. This still comes as a surprise to me, since I don’t really see myself as making games — my background is as a musician and performer — but since founding Lucky Frame four years ago after achieving a small degree of fame (or notoriety) with my Wii remote music controlling software I have found myself and my company identifying more and more with the indie game scene, which is absolutely great.

It’s brilliant, because as a musician I see an enormous amount of groundbreaking work and exciting potential in the world of interactive audio and music in games. As a musician friend of mine once pointed out to me, in many ways the most innovative things happening right now in the music world are not happening in music at all, but in music software. I have no doubt that if Bach or Coltrane or Nancarrow were alive today they would be designing generative music systems or programming new Monome patches.

This isn’t a new or revolutionary thing to say — I would argue that this shift towards technology as a creative musical tool began years ago, with composers like the Russolo brothers (pictured) who built their own insane instruments because they wanted to make cacophonous noises, and continued with Daphne Oram and her incredible Oramics machine, which enabled her to draw her compositions on film.

Conlon Nancarrow, who I just mentioned, is another really interesting example of a composer who became interested in using technology. When his compositions proved too complex for musicians to play, he began manually punching holes in piano rolls and running them through player pianos, allowing him to create enormously complex music that would otherwise have never been heard (and also making him perhaps the first person to program music).

This trend has perhaps been accelerated in recent times due to the incredible explosion of media technology in the past 20 years. Whilst super-high-end recording and production remains expensive, it is now possible for a huge population of people to record, mix, compose, and produce high quality sound at an extremely low price.

You want a synthesizer in 1980? Well, you pay $1300 for an ARP. Now, you are ridiculously spoilt for choice for free software synths (and parts are far cheaper if you want to build a hardware one!) The consequence of this is that every sound you can ever possibly imagine can be created quickly and easily and probably with free software.

Another huge revolution from a composition point of view, as Matthew Herbert often points out, is that whilst in the past composers would be forced to imitate something (Beethoven writing a flute part to emulate a nightingale, Cootie Williams’ talking trumpet), nowadays a composer can go out and record a bird for his piece, or use a recording of someone talking.

This presents a huge conundrum for composers and musicians — if everything can be done, it sort of takes some of the fun out of it. I think that’s why many composers and musicians, particularly of the electronic/digital variety, are becoming more and more interested in interface and process. How is the music made, and how does that affect the end result? That has been the philosophical focus of most of my work over the past few years, resulting in ridiculous appearances on UK reality show Dragons’ Den and more recently with my experiments using live mushrooms to control electromechanical instruments.

It was of course only a matter of time before I became interested in using games for music, since video games are perhaps the most powerful interactive interfaces around right now. Why not try and use the power of game interaction to generate music?

These are exciting times for audio and music people in the game world. The trend of Guitar Hero-style games has perhaps faded, which I see as a very good thing. While they certainly have their place (and I’m sure they will come back), those types of games revolve around players trying to recreate existing music with as much accuracy as possible (often mediated through the interface of a strange plastic representation of an instrument).

This creates what is of course a brilliantly compelling game design, but is not very exciting from an audio or music design and development standpoint. As a side note, I see an interesting parallel between this type of gaming and the old-fashioned music conservatory style of teaching music, in terms of focusing on accuracy rather than fun, musicality, or creativity.

That was our starting point for making Pugs Luv Beats, our recent release for iOS, which was (ahem) nominated for an IGF Excellence in Audio award. We wanted to make a music game that was focused on music creation, and which was powered by the generation of audio, rather than forcing the user to see music as a challenge.

Our first stab at this was with a space shooter drum machine that Jon Brodsky, the Lucky Frame coder/designer, prototyped a while back. Codenamed Space Hero, it was a system for setting up a series of enemies, which you then had to destroy with your spaceship. When the enemies arrived on screen they would trigger a drum sound, and when they were destroyed they would make a bass sound — so it was basically an editable and playable drum machine. AWESOME PROTOTYPE VIDEO ALERT!

This prototype proved to us that game design — or at least interacting with a game — could in many ways be seen as analogous to music performance and composition. Both are essentially based around a series of choices, and both in many cases involve some sort of start, end, and cycle.

As a counterpoint teacher once explained to me, once you have written a note of music, you only ever have four choices. You can play that note again, you can play that note again differently, you can change the note, or you can not play any note. Similar sets of choices inhabit the world of game design — and similar techniques for simplifying the process as well. One particularly good example is the technique used in the writing of a fugue, which involves taking one melody and overlapping it in varying ways. Check out this video to see a visualization of a Bach fugue.

You can clearly see that the first melodic line played through once, and once it starts to develop, the same melodic line is played underneath the variations. This happens several more times throughout the piece.

I always like thinking of the four fugal melodies as being like four different characters exploring the same space, perhaps finding different power-ups that change their speed, double their power, and so on. There are many other comparisons that could be made — for one thing, that Bach visualization would make a pretty cool level builder for a platform game (file under: Future Game Ideas…)

Pugs Luv Beats is the first in a series of music games that we are making that explores this philosophy. We are trying to make music games where both the music and the game aspects are on equal footing — playing one will generate the other, and vice versa. In other words, our aim is to use compositional techniques to make games, and games to make music.

In order to make this happen, we needed to create both a gameplay that lends itself to creative exploration and a musical generation system that will provide complex, generative, but reproducible audio. Each of these things is important for different reasons.

Complexity is perhaps the most obvious — it is the idea that actions in the game which create music must also have enough depth and offer enough value that repetition will not get old. This is an odd science, because on one level, music is based on repetition and patterns, yet in games a repetitive sound can destroy an illusion — or at least become annoying.

Additionally, the musical complexity must be someone limited, or else the audience will lose the connection between the action and the musical result. For example, if a certain tile triggers a sound when the player’s character traverses it that could potentially get old very quickly.

However, if that tile emits a different pitch of the same sound each time, it will add a layer of musical complexity. The trade-off is a decrease in the connection between the action and the sound — a balance needs to be struck!

Generative, or procedural, audio is a bit of a buzz phrase, and there are certainly a number of different definitions floating around the internet. Thinking of it in terms of generative music frames things a bit differently, relating it closer to the world of 20th century classical music composers like Xenakis and John Cage (and arguably, jazz composers like Charles Mingus).

In that world, the concentration can be on setting up a system within which a performer can explore and generate their own music. In many ways, it is about creating constraints and borders, a framework that allows an infinitely variable set of musical possibilities.

For example, in John Cage’s pipe organ piece As Slow As Possible, the actual tempo it is meant to be played at is left undefined — meaning performance times have varied between 20 minutes and 639 years. Cage’s Fontana Mix takes this even further, as even the instrumentation is defined as “any number of tracks of magnetic tape, or for any number of players, any kind and number of instruments”. The score is a series of transparencies and paper, which when layered create patterns that direct the players. A potentially infinite number of patterns can be created.

This may all seem rather academic, but it is important to note the basic parallels between this type of music composition (often called non-deterministic, or parametric) and games. Games and sports, of course, are the epitome of setting up a system and letting the players make their own way. Each play of the game will be slightly different, and in some cases different playthroughs will result in barely recognizable endings. It’s no accident that many of these 20th century composers were fascinated by game theory.

Finally, reproducibility is essential in terms of rewarding the user. As described in the previous two paragraphs, music that is generated in a game needs to have depth, and the user needs to feel agency over its creation, but in order for something to be a game there needs to be some element of mystery. This mystery does need to be reproducible, however, or the user will not be able understand the patterns and play with them — which is a major component of turning an object that makes noise into a musical instrument.

So how did we put all of these things into practice? Well, we made a game with dogs wearing hats. Pugs Luv Beats is a universal iOS music composition game that we released in December 2011. In the game, the player controls an alien breed of pugs. Once the masters of a wondrous and highly advanced civilization, these pugs are the victims of their own greed. They loved nothing more than to collect beats, which they cultivated with their special brand of “luv”. But an ill-advised scheme to grow the biggest beat of all time spun wildly out of control, and their home planet was destroyed. The player must help the pugs to grow more beats so they can rediscover new planets, build houses, and recover their lost technology.

We are very proud of the game, particularly since we are a tiny operation of three people. Jon Brodsky handled all of the coding, using a combination of openFrameworks, Lua, and a custom build game engine called Blud. I did all of the audio using libpd and Pure Data, which I’ll get to shortly. Sean McIlroy is the artist and designer in the team, and he did an amazing job developing the cute, original, and oddly poignant pug characters, as well as the whole gameworld and UI. This was an enormously important aspect — as you’ll soon see, Pugs Luv Beats is in many ways a music sequencer disguised as a game, however we wanted to make sure that it looked nothing like music software.

The gameplay itself focuses on the pugs. You start out with a single pug living in a house. You need to make the pug harvest beets, which you do simply by tapping a path for the pug to run. When the pug hits a beet, it creates a musical beat (ha!) and your beet count rises. More beets lets you buy another house, with another pug. Buying more pug houses lets you uncover more of the planet, and thus more beets. Collecting enough beets will also allow you to buy a brand new planet and start over again.

As you expand your universe, you will discover new terrains — these new terrains will make different sounds, letting you grow your musical palette. However, these terrains will slow your pugs down — that’s why you need to find and equip your pugs with hats and costumes. You want to go faster on snow? Find a Santa hat! How about water? Shark fin!

This approach to the game design and structure means the game is effectively endless — even once you have discovered all of the hats and costumes, you can continue to explore the universe and find new combinations of terrains. Each planet is also a separate musical world, so you can always start from scratch, or go back to your previous planets to remix and recompose — all using costumed pugs.

Behind our aesthetic styling and collection game mechanic there is a generative music engine that puts all of this theory and design into practice. Let’s delve into a bit.

We decided pretty early on that we wanted to use Pure Data to handle all of the audio. This was made possible thanks to the hard work of Peter Brinkmann and Peter Kirn, among others, who put together libpd (go buy the new book!) Pure Data is a free and open source graphical programming interface designed mostly for audio and music geeks who don’t want to have to program everything by hand. I will go ahead and admit at this point that I fall into this category.

To expand on that — I am not a coder, and in all likelihood I never will be. I have a really hard time with text on a screen, but a graphical representation of code makes it a lot easier for me to understand.

That was what led me to Max/MSP, a commercial graphical programming system that is very popular in the contemporary art and music world. I became rather adept at Max/MSP during my studies and used it to make the Wii Loop Machine, among other things.

Pure Data is effectively a free version of Max/MSP (there is a lot more to that story, but it is heroically uninteresting), so when we had the possibility to integrate that into our projects we jumped at the chance.

Working with Pure Data provided several big advantages (beyond being free). First, it allowed us to be far more agile in our development. We are a tiny three-person team, with only one coder. Using Pure Data allowed me to handle all of the audio development, including previewing, prototyping, experimentation, and much more. I could play with the audio engine and make really complex tweaks without ever bothering Jon.

Second, we were able to take advantage of 20 years of audio research and development, rather than having to build everything ourselves. Say we want a sample accurate wavetable playback system? Using Pure Data we didn’t have to build it ourselves, it comes with the package. How about a reverb system? A quick Google search will result in dozens of different reverbs made in Pure Data by researchers and developers around the world that are easily reconfigurable and adaptable to our project.

To explain a bit about how it works, the entire sound engine for Pugs Luv Beats is contained within this patch. It receives messages from the Lua/openFrameworks code that Jon put together, and sends those messages around the subpatches to generate and modify sounds. The simplest thing is does is trigger sounds, for things like menu actions, building things, etc. You can see on the far right the object “route sounds”, which is handling all of these standard sounds. Each “sounds” object contains a little subpatch that plays the sound on a trigger.

While it may seem needlessly complicated to use this system just for playing a sound, this does offer a lot more flexibility, and more importantly allows me (the sound designer) to change anything sound-related without bothering Jon (who is in all likelihood doing something insanely complicated to do with multithreading or whatever).

A slightly more complex section of the engine can be seen in what we called the Pugglesynth. The challenge here was to create a sound library for Mr. Puggles, the friendly pug who guides you through the tutorial. We wanted him to make some noise every time he popped up, but we didn’t want to use any more sound files. It needed to be funny and charming, without being repetitive. I therefore built a two oscillator subtractive synth, and every time he appears, a series of random numbers are triggered, controlling the pitch and filter frequency. Every time he disappears, however, the same two notes are played. This gives him recognizable character, without making him annoying.

Speaking of synthesizers, the galaxy synth in Pugs Luv Beats was so popular that we actually decided to spin it off into its own application altogether — Pug Synth! There’s nothing like an x-y pad synthesizer to get everyone excited. Here’s a snapshot of the patch for the Swamp synth, which features a dubstep-style filter whose rate is linked to the x axis, along with the pitch.

Rather than being smoothly linked, however, the rate is locked into multiples of master BPM, which is also controlling a drum machine. This means your ripping pugstep is always in time with your beats. It also meant we were able to build some wicked generative drum machines, and add variations really easily, while keeping everything within a strong musical framework.

This approach, of using certain master parameters to lock everything together, is something we first employed in Pugs Luv Beats. One of the big challenges of designing a creative music game is to make sure things are controlled enough to not sound horrible, but free enough that the player feels real agency over the musical output. This relates strongly to the “complexity” and “reproducibility” that I mentioned before, and there was no single fix.

What we ended up doing was making sure that whenever music was being generated, certain global parameters were set and unchangeable, including tempo and key. The former is quite straightforward, but the latter required a more complex approach — hold on to your hats!

One of the elements of the audio engine I’m most proud of is how it handles tonality. There are a bunch of melodic sound libraries in the game, linked to different terrains that the pugs run across. Lava triggers electric guitar; sand triggers an Iranian santoor, etc. The note that is played relates to the position of the tile on the planet.

This essentially means each planet is a giant MPC, or sampling keyboard — a pug landing on a tile will always generate a certain sound at a certain pitch.

This sounds very simple, but accomplishing it was relatively tricky, because each planet is different. There are millions of potential planets in the game, and it would be impossible to have a database for each one. Instead, we are using a single identifying number for each planet to generate the tables that control the notes.

The planet’s ID is used as a random number seed, generating a lookup table that assigns musical note values to every tile on the world. This is a practical application of the theories I outlined above — it means that the planet will have a complexity in the sense that the arrangement of musical notes will be different on every planet, however it will be reproducible because every time you return to a specific planet, the arrangement of notes will be the same.

However, the music theory geek in me was not satisfied. I wanted to make sure that every planet would have a musical character. This way, even if you have two planets with exactly the same terrain, they will inherently sound different. We accomplished this by giving each planet a core tempo as well as a musical mode, both selected using the planet’s ID as a random number seed.

The mode (or scale) would therefore be applied to all of the melodic notes played on that planet. You might get a traditional major scale, or a traditional harmonic minor scale, or a very strange (to western ears) Locrian. To make sure everything worked together, the galaxy synth is also using the same scale lookup table, so you can jam along in the same key as your planet.

These things give a little glimpse into how we were able to build a really powerful generative music engine for an iOS music game that incorporates some of our theories about interactive music that we’ve been developing over the past few years. We are now developing a few more music games that try to apply these theories in different ways, using different types of games.

It can sometimes be hard to see the connection between theory and practice, but I hope this article has given some insight into the thought processes that go on at Lucky Frame. We work on a wide variety of projects, and from the outside they may seem rather unrelated. Currently, for example, we are making iOS games like Pugs Luv Beats. Additionally I am currently on tour performing with Matthew Herbert’s One Pig Live show, for which we built an interactive musical pigsty.

What links these projects together? Our philosophical interest is really on interaction and interface, particularly with music. This can mean interaction between a player and a game, or a performer and an audience, or any number of other dynamics. Ultimately we want to deepen understanding and engagement, leading to knowledge and fun. That’s a pretty great reason to make games.(source:GAMASUTRA)

下一篇:深入探讨游戏AI类型及其设计要点

闽公网安备35020302001549号

闽公网安备35020302001549号