分享优化游戏编程的相关实用准则

作者:Niklas Frykholm

有人认为过早优化是万恶之根源;也有人认为后来修订的执行态度会让程序员从引以为豪的“计算机专家”沦落为卑劣的“脚本小子”(游戏邦注:指的是技术水平差的网络捣乱者,借助技巧较高的骇客所创的工具来攻击系统)。

但是却没有人能够明确说出真正的答案。我将在本篇文章中描述我自己的执行方法,即我是如何确保游戏系统的有效运行,并且不会影响其它目标(包括模块性,可维护性以及灵活性等)。

不要无关紧要的环节浪费时间

如果你正在编写一个较大的程序,那么你便不可能如理论上那般快速完成任何一部分的代码设置。当然我们也可以假设每一行代码的运行速度稍微有所提高。

快速创造软件并不总是实现最大化的性能,有时候反倒更关注那些重要部分的良好运行性能。如果你花费3周的时间优化一小块只能在每一帧中调用一次的代码,那么你就算浪费了这三周宝贵的时间。如果你将其投入优化一些真正重要的代码,你便能够更好地提升游戏的帧率。

我们总是无法拥有足够的时间去添加所有的功能,修改所有的漏洞并优化所有的代码,所以我们便需要努力做到以最少的精力发挥最大的成效。

优先采用简单的解决方法

比起复杂的解决方法,简单的解决方法更容易执行。但这只是其优势的冰山一角,我们还可以从长远的角度去挖掘它的真正优势。简单的解决方法容易理解,容易调试,容易维持,容易锁定,容易描写,容易优化,容易平衡并且容易替换。随着时间的流逝,我们将能够发现更多优势。

虽然成效不如复杂的解决方法那般立竿见影,但是简单的解决方法却能够帮你节省不少时间,从而推动整个程序快速进行——因为你可以使用节省下来的时间去优化其它代码。

我只会在适当的时候使用复杂的解决方法,例如当复杂的解决方法功效远远大于简单的解决方法以及当它处于一个较为重要的系统时(即需要花费较长的帧时间)。

当然,究竟何为简单则是一个见仁见智的问题。就像我会认为阵列很简单,POD数据类型很简单,二进制数据块很简单;但是我却不认为12个级别的继承结构很简单或者几何代数很简单。

设计系统时就需考虑性能问题

有些人会认为,为了避开“过早优化”就必须忽视任何内容的性能而直接进行系统设计。即将各大元素拼凑在一起并且在后来“优化”代码的时候才进行修正。

我完全不赞同这一观点。关键不是因为性能本身,而是单纯地出于实用性原因。

当你在设计一个系统时,你必须清楚自己应该如何组织不同内容,需要哪些必要内容以及多久应该添加一些不同功能等等。如此你便不需要投入额外的时间和精力去思考系统的执行以及数据结构的设置等,所以整个过程也就能够快速有效地进行。

相反地,如果你完全忽视性能便开始创建系统,你便需要在后来反复回到系统中“修正”各种漏洞,而让整个开发过程变得更加繁琐。更糟糕的是,如果你在后来发现需要重新整理基础数据结构或者添加多线程的维护,你有可能不得不从头开始编写整个系统。而如果你的系统已经开始投入生产,你可能就会受到一些已发布API以及过于依赖其它系统这两个原因的限制。

同样,你也不能中断任何使用了这一系统的项目。从你最初编写代码到现在已经过去好几个月的时间,你便不得不重新理解自己对于所有代码的想法。但是在改写的过程中你有可能会遗忘一些小漏洞的修改以及一些糟糕功能的完善,如此你可能会带着这些漏洞重新开始编写代码。

所以,按照我们的指导方针即“以最少的精力发挥最大效能”,我们就必须事先考虑性能问题。因为我们希望减少投入而不愿意反复进行修改。

这么做也是合情合理。预先完善性能较为简单,那时候我们还不能保证这么做是否有利于整体过程的发展。尽管后来的属性修改需要投入更多精力,但那时我们也早已明确了自己的侧重点。而在整个开发过程中,平衡又是非常关键的因素。

在设计系统时,我总是会粗略估算每个代码需要在每一帧中执行多少次,并以此估算去引导设计:

*1-10次的执行不碍事。你可以按自己的意愿行事。

*100次时确保运行无误,并且属于数据导向型且具有可缓存性

*1000次时你就需要确保它们是多线程的

*10000次则需要你谨慎思考自己在做些什么

在编写新系统时我还会遵循一些一般原则:

*在不可变的单一分区储存块中保存静态数据

将动态数据分为几大连续区块

*尽可能使用较少的内存

*选择阵列而非复杂的数据结构

*线性地访问内存(基于可缓存性)

*确保程序运行无误

*保持有效的更新,追踪活跃的对象

*如果系统要处理多个对象,就要支持数据平行化

无需花费太多努力,我遵循着这些方针已经编写了许多系统;而我也知道这么做能够发挥优秀的基本性能。这些指导方针主要针对于一些重要而易于实现的目标,如算法复杂性,内存访问,平行化等,从而让我们能够以较少的努力获得较好的性能。

当然了,我们并不可能总是遵循所有的指导方针。就像一些算法其实需要投入多于0(n)次以上的时间。但是我知道当我没有遵循这些指导方针时,我便需要更加谨慎且全面地进行思考,确保自己不会破坏性能。

使用自上而下的分析器发现问题

不论你的预先设计有多优秀,你都需要花费多余的时间去处理代码中一些未预料到的内容。包括人们对于系统的不当使用,并出现许多你未曾想过的问题等。你的代码中将会出现各种漏洞,而有些漏洞并不会彻底破坏整个系统,只是会产生某些劣质的性能。总之肯定存在一些你不曾料到的麻烦。

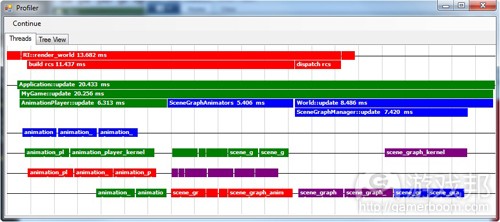

而自上而下的分析器能够让你真正理解程序在每部分内容中真正花费的时间。我们在代码中使用明确的分析范围,并将数据输送到可用多种方式直观显示问题的外部工具上:

自上而下的分析器能够告诉你哪些优化需要投入更多精力。如果你投入了60%的帧时间于动画系统中以及0.5%的时间于Gui(图形用户界面)中,那么你对动画系统的优化可以获得理想的成效,但是对于Gui,你却很难获得相同的成果。

通过使用自上而下的分析器你可以在代码中插入一些更加狭窄的分析范围,从而锁定一个性能问题的根源,即哪个部分真正需要投入时间。

我使用一般设计方针去明确所有系统的基本性能,然后通过自上而下的分析器深入探究,以寻找需要进行额外优化的系统。

使用自下而上的分析器寻找较低级别的优化目标

我发现作为一种通用工具,拥有明确范围的交互式自上而下分析器比自下而上的抽样分析器更有效。

但是抽样分析器也有自己的优势。它们能够找到一些被不同部分调用,并且不会显示于自上而下分析器的热点函数。这些热点就是低级别的优化目标,并且也能够提示你哪部分内容做得不好等。

举个例子来说,如果字符串()被显示为热点,那就预示着你的程序出现了问题,需要进行调整。

在我们的代码中经常出现的热点便是lua_Vexecute()。这并不令人惊讶。它是Lua VM的主要函数,用于执行大多数Lua操作码的分支语言。它能够告知我们一些关于该函数的低级别、平台优化问题,从而让我们实现真正可衡量的性能效益。

注意不同例子的差异

我并不会创造太多复合基准程序,如基于一些人工数据而循环10000次代码并测量执行时间。

如果我不知道进行调整是否有利于推动代码的快速运行,我便会根据真实游戏中的数据进行求证。

以一个基于500件相同实体的基准为例,这些实体虽然播放相同的动画,但是因为拥有50个不同的单位类型,所以即使是相同的场景也具有不同点,所以它们所播放的动画也是不同的,数据存取模式也完全不同。所以,能够用于完善某个实例的优化操作不一定同时适用于其它例子。

优化犹如园艺

程序设计员需要优化引擎。美术人员需要添加更多内容。

优化并不是特定时间的独立活动,它是整个系统生命周期(包括设计,维护和进化)中的一部分。优化是美术人员与程序设计人员关于引擎效能而进行不断讨论的过程。

性能管理就像是照料花园,你必须不断检查并确保每一部分的有序,根除“野草”,并想方设法让“植物”茁壮成长。

美术人员的职责是将引擎推向极限,而程序设计员则负责将它们拉回来,并确保其有效运行。在整个过程中,他们必须寻找最佳中间立场,从而让游戏能够绽放异彩。

游戏邦注:原文发表于2011年12月23日,所涉事件和数据均以当时为准。

(本文为游戏邦/gamerboom.com编译,拒绝任何不保留版权的转载,如需转载请联系:游戏邦)

Opinion: A Pragmatic Approach to Performance

by Niklas Frykholm

December 23, 2011

Is premature optimization the root of all evil? Or is the fix-it-later attitude to performance turning programmers from proud “computer scientists” to despicable “script kiddies”?

These are questions without definite answers, but in this article I’ll try to describe my own approach to performance. How I go about to ensure that my systems run decently, without compromising other goals, such as modularity, maintainability and flexibility.

Programmer Time Is A Finite Resource

If you are writing a big program, some parts of the code will not be as fast as theoretically possible. Sorry, let me rephrase. If you are writing a big program, no part of the code will be as fast as theoretically possible. Yes, I think it is reasonable to assume that every single line of your code could be made to run a little tiny bit faster.

Writing fast software is not about maximum performance all the time. It is about good performance where it matters. If you spend three weeks optimizing a small piece of code that only gets called once a frame, then that’s three weeks of work you could have spent doing something more meaningful. If you had spent it on optimizing code that actually mattered, you could even have made a significant improvement to the game’s frame rate.

There is never enough time to add all the features, fix all the bugs and optimize all the code, so the goal should always be maximum performance for minimum effort.

Don’t Underestimate The Power Of Simplicity

Simple solutions are easier to implement than complex solution. But that’s only the tip of the iceberg. The real benefits of simple solutions come in the long run. Simple solutions are easier to understand, easier to debug, easier to maintain, easier to port, easier to profile, easier to optimize, easier to parallelize and easier to replace. Over time, all these savings add up.

Using a simple solution can save so much time that even if it is slower than a more complex solution, as a whole your program will run faster, because you can use the time you saved to optimize other parts of the code. The parts that really matter.

I only use complex solutions when it is really justified. I.e. when the complex solution is significantly faster than the simple one (a factor 2 or so) and when it is in a system that matters (that consumes a significant percentage of the frame time).

Of course simplicity is in the eyes of the beholder. I think arrays are simple. I think POD data types are simple. I think blobs are simple. I don’t think class structures with 12 levels of inheritance are simple. I don’t think classes templated on 8 policy class parameters are simple. I don’t think geometric algebra is simple.

Take Advantage Of The System Design Opportunity

Some people seem to think that to avoid “premature optimization” you should design your systems without any regard to performance whatsoever. You should just slap something together and fix it later when you “optimize” the code.

I wholeheartedly disagree. Not because I love performance for its own sake, but for purely pragmatic reasons.

When you design a system you have a clear picture in your head of how the different pieces fit together, what the requirements are and how often different functions get called. At that point, it is not much extra effort to take a few moments to think about how the system will perform and how you can setup the data structures so that it runs at fast as possible.

In contrast, if you build your system without considering performance and have to come in and “fix it” at some later point, that will be much harder. If you have to rearrange the fundamental data structures or add multithreading support, you may have to rewrite the entire system almost from scratch. Only now the system is in production, so you may be restricted by the published API and dependencies to other systems.

Also, you cannot break any of the projects that are using the system. And since it was several months since you (or someone else) wrote the code, you have to start by understanding all the thoughts that went into it. And all the little bug fixes and feature tweaks that have been added over time will most likely be lost in the rewrite. You will start again with a fresh batch of bugs.

So by just following our general guideline “maximum efficiency with minimum effort”, we see that it is better to consider performance up front. Simply since that requires a lot less effort than fixing it later.

Within reason of course. The performance improvements we do up front are easier, but we are less sure that they matter in the big picture. Later, profile-guided fixes require more effort, but we know better where to focus our attention. As in whole life, balance is important.

When I design a system, I do a rough estimate of how many times each piece of code will be executed per frame and use that to guide the design:

1-10 Performance doesn’t matter. Do whatever you want.

100 Make sure it is O(n), data-oriented and cache friendly

1000 Make sure it is multithreaded

10000 Think really hard about what you are doing

I also have a few general guidelines that I try to follow when writing new systems:

Put static data in immutable, single-allocation memory blobs

Allocate dynamic data in big contiguous chunks

Use as little memory as possible

Prefer arrays to complex data structures

Access memory linearly (in a cache friendly way)

Make sure procedures run in O(n) time

Avoid “do nothing” updates — instead, keep track of active objects

If the system handles many objects, support data parallelism

By now I have written so many systems in this “style” that it doesn’t require much effort to follow these guidelines. And I know that by doing so I get a decent baseline performance. The guidelines focus on the most important low-hanging fruit: algorithmic complexity, memory access and parallelization and thus give good performance for a relatively small effort.

Of course it is not always possible to follow all guidelines. For example, some algorithms really require more than O(n) time. But I know that when I go outside the guidelines I need to stop and think things through, to make sure I don’t trash the performance.

Use Top-down Profiling To Find Bottlenecks

No matter how good your up front design is, your code will be spending time in unexpected places. The content people will use your system in crazy ways and expose bottlenecks that you’ve never thought about. There will be bugs in your code. Some of these bugs will not result in outright crashes, just bad performance. There will be things you haven’t really thought through.

To understand where your program is actually spending its time, a top down profiler is an invaluable tool. We use explicit profiler scopes in our code and pipe the data live over the network to an external tool that can visualize it in various ways (click for a larger image):

An (old) screenshot of the BitSquid Profiler

The top-down profiler tells you where your optimization efforts need to be focused. Do you spend 60 percent of the frame time in the animation system and 0.5 percent in the Gui. Then any optimizations you can make to the animations will really pay off, but what you do with the Gui won’t matter one iota.

With a top-down profiler you can insert narrower and narrower profiler scopes in the code to get to the root of a performance problem — where the time is actually being spent.

I use the general design guidelines to get a good baseline performance for all systems and then drill down with the top-down profiler to find those systems that need a little bit of extra optimization attention.

Use Bottom-up Profiling To Find Low-level Optimization Targets

I find that as a general tool, interactive top-down profiling with explicit scopes is more useful than a bottom-up sampling profiler.

But sampling profilers still have their uses. They are good at finding hotspot functions that are called from many different places and thus don’t necessary show up in a top-down profiler. Such hotspots can be a target for low-level, instruction-by-instruction optimizations. Or they can be an indication that you are doing something bad.

For example if strcmp() is showing up as a hotspot, then your program is being very very naughty and should be sent straight to bed without any cocoa.

A hotspot that often shows up in our code is lua_Vexecute(). This is not surprising. That is the main Lua VM function, a big switch statement that executes most of Lua’s opcodes. But it does tell us that some low level, platform specific optimizations of that function might actually result in real measurable performance benefits.

Beware Of Synthetic Benchmarks

I don’t do much synthetic benchmarking, i.e., looping the code 10 000 times over some made-up piece of data and measuring the execution time.

If I’m at a point where I don’t know whether a change will make the code faster or not, then I want to verify that with data from an actual game. Otherwise, how can I be sure that I’m not just optimizing the benchmark in ways that won’t carry over to real world cases.

A benchmark with 500 instances of the same entity, all playing the same animation is quite different from the same scene with 50 different unit types, all playing different animations. The data access patterns are completely different. Optimizations that improve one case may not matter at all in the other.

Optimization Is Gardening

Programmers optimize the engine. Artists put in more stuff. It has always been thus. And it is good.

Optimization is not an isolated activity that happens at a specific time. It is a part of the whole life cycle: design, maintenance and evolution. Optimization is an ongoing dialog between artists and programmers about what the capabilities of the engine should be.

Managing performance is like tending a garden, checking that everything is ok, rooting out the weeds and finding ways for the plants to grow better.

It is the job of the artists to push the engine to its knees. And it is the job of the programmers’ job to bring it back up again, only stronger. In the process, a middle ground will be found where the games can shine as bright as possible.(source:GAMASUTRA)

闽公网安备35020302001549号

闽公网安备35020302001549号