以IEZA框架解析游戏声音结构及其分类

游戏邦注:本文原作者是Sander Huiberts,他通过IEZA(Interface、Effect、Zone、Affect)框架,对游戏声音进行分类,旨在促进不同学科的设计者和开发者之间的交流及拓展这个新兴领域的范围。

在游戏学领域,鲜有关于游戏声音的结构和组成方面的文献。目前大多文献内容都着眼于游戏音频的制作(如录音和混音)和技术运用(如硬件、编程和安装)。游戏声音的分类理论文献相当少,而游戏声音的清晰结构就根本不存在。

基于现存的文献综述和目录,我们设计了这个游戏声音的结构。该结构描述了游戏声音的两个维数及各个维数涵盖的设计内容。

简介

过去35年,游戏声音的设计从粗陋的哔哔声、嘟嘟声和嘀嘀声到简单的乐曲,再到三维音效和长篇协奏音轨,可谓有了革命性的发展。游戏声音从此确定了自己在当下电子游戏构成中的独立身份,既可以使玩家的游戏体验更加紧张刺激,也可以向玩家传达必要的信息。

虽然游戏声音像一朵奇葩一样绽放在游戏这片沃土上,但关于她的理论仍然极端缺乏。大多文献关注的是游戏声音的制作和安装启动环节(如录音技术和声音引擎设计)。在游戏学中,关于游戏声音结构的文献就更是凤毛麟角了。

正是因为理论资料的缺乏,许多基本的问题到现在都无法得到解答(如游戏声音的组成、组成依据等)。但游戏研究领域仍然不能拿出实用而清晰的游戏声音结构来对这些问题进做出解答。此时此刻,如果能有一个关键的游戏声音论述文献,想必能为游戏设计者和开发者带来巨大福音:这份文献可以促进不同学科的开发者和设计者之间的交流并极大拓展这个领域的范围。游戏声音结构可以作为一个研究、设计和学习的工具,加深我们对游戏声音的理解、挖掘出最终引领游戏音频新革命的设计可能性。

本文主要是向读者详解我们所构建的实用清晰的游戏声音结构。在本文的第一部分,我们将列举当前的游戏声音分类情况、探讨各个类型的用途和设计价值。第二部分,我们将提出我们自己的游戏音频结构主张。虽然我们自知结构和模型有利于关键性的论述,我们还是得承认,一种游戏声音的定义可能与其他定义相冲突。正如Katie Salen 和 Eric Zimmerman所言:“必要的错误也可能是有用的。”(2004, p.3).我们同意他们的说法,某种定义并不能接近或者科学地代表“真实”。

我们最初关注的只是交互式电脑游戏的声音分类法。“游戏声音”这个词也用于指代游戏中某些与玩家无交互作用的声音,如介绍动画和过场动画中声音。“游戏声音”涉及的是游戏中的特征声音、互动环节的声音、游戏剧情之外的声音(如游戏预告),但不包含游戏设置的声音,如主菜单。在此,我们有意略过声音的使用,因为这部分内容会有其他更适合的结构框架或模型来分析(如电影声音理论用于分析过场动画的声音)。

游戏声音分类

目前,关于游戏声音,存在几个分类法。最普遍的分类法是基于以下三种声音:言谈、声响和音乐。该分类法来源于游戏声音的制作过程,因为各类型声音都有其特别的制作过程。备受赞誉的游戏音乐作曲家Troels Follman (2004)区分出发声、声音特效、氛围音乐特效且各自进一步细分多个子类别,从而扩充了这个分类法的内涵。

尽管这三个词被众多设计者广泛运用于游戏行业,但这种分类法并没有深入揭示游戏声音的结构组织,更没有阐明声音在游戏中的功能。

电影声音是比较接近游戏声音的一个知识领域。常见的电影声音分类是由Walter Murch提出的。在这个分类法中,声音被分为前景音、中景音和背景音,分别体现了设计者意图吸引玩家不同层次的关注。前景音是观众听到的部分;中景音和背景音只能或多或少地听到一些。中景音为前景音提供了环境,且直接影响到进行中的对象,而背景音则为二者引入情景。另外,电影声音理论家Michael Chion (1994)还提出了类似的“三段式”分类法。

可以说,这种分类法在游戏界近来新兴的实时适配混音领域将大有用处。实时适配混音是指在听觉性游戏环境的特定部分中不断地以玩家的注意力为中心的音效。然而,这种三层次的分类法也没有深入说明游戏声音的结构和组成。

Friberg和Gardenfors (2004, p.4)提出了另一种分类方法,也就是根据TiM 计划(作者注:研究把主流游戏改编为适合盲童玩的项目)研究的三个游戏的声音的实现法得出的分类体系。在他们的方法中,声音是根据声音资产在游戏代码中的组织分类的。这种分类法由玩家角色声音、物品声音、NPC(非玩家角色)声音、饰物声音和指令声音组成。

除了相当大一部分的重复(如物品声音和NPC声音之间的界限模糊),这个分类法只针对特定游戏的设计,同样也存在没有阐明声音结构的缺点。

Axel Stockburger (2003)将声音分类方法和声音在游戏代码中的组织进行整合,还结合考虑了声音在游戏环境中的来源。他根据自己对游戏《合金装备2》观察研究,得出了“声音对象”的五个类别:得分、效果、界面、地带和言语。

虽然Stockburger在描述声音的类别和声音的类型时并没有很一致,但他的分类法考虑到了游戏环境声音的来源,这有助于区分游戏声音的深层结构。声音的三个类别(效果、地带、界面)非常近似于一个框架性结构,因此这是个理想的分类法起点。但为了建立更清晰的结构,明确地区分声音类别和声音类型是必要的。

我们可以得出这么一个结论:游戏理论界中不存在游戏声音的清晰结构。当前的分类法对游戏声音结构所言甚少。设计者和研发者尚未得出关于游戏声音的完整而实用的定义。在接下来的内容里,我们将展示另一个游戏声音结构。

游戏声音的IEZA结构

根据对现存文献和目录的评述,我们得出了另一个分类游戏声音的结构模式:IEZA结构。本结构旨在通过解释分类法的清晰组织和揭示各个类别的内容及关系,使读者更加深入地了解游戏声音。

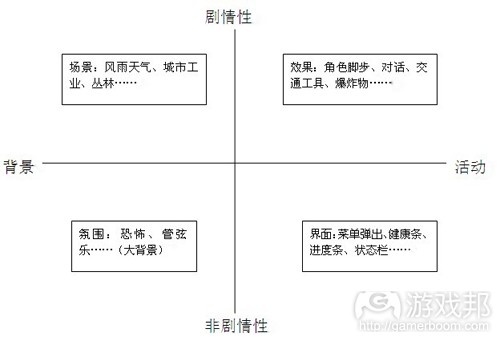

IEZA结构的分类和维数将描述在以下段落中,为了帮助读者理解,我们附上一张简图。

第一维

第一维由两部分组成,即虚拟游戏世界和非虚拟游戏世界。

在虚拟游戏世界里,游戏环境提供了来自不同声源的声音,如第一人称射击游戏中,游戏角色的脚步声、桌球游戏中的台球的撞击声、惊悚游戏中的雷雨声、冒险游戏中繁忙的餐馆中的聊天打趣声。

还有一些声音出自虚拟游戏世界外部的声源,如背景音乐、点击HUD上的按钮、敲打与HUD相关的按钮,如进度条和健康条等的声音。总之,来自游戏程序环境的声音与虚拟游戏世界的声音,处在相对不同的层面上。

Stockburger (2003)首次采用“剧情性”(diegetic)和“非剧情性”(non-diegetic)来形容环境声音的差别。这两个术语出自理论文献,但也用于电影声音理论(Chion,1994, p.73)。当这两个术语运用于游戏环境时,必须考虑到这么一个现实:游戏通常包含非剧情性元素,如在屏幕上可见的按钮、菜单和健康条。电影极少出现非剧情性视觉元素,就算有,通常也不带声音。(游戏邦注:在这里,剧情性部分可以理解为虚拟游戏世界,而非剧情性部分则指非虚拟游戏世界)

效果

IEZA结构的剧情部分的声音包括两类。第一类是效果,效果声音与游戏剧情中的特定声源存在可感知的联系,即可以认为效果声音是由某个声源产生或者归属于某个声源,无论是否显示在屏幕上,总归是存在于游戏世界。效果声音的例子如玩家色角发出的响声(脚步、呼吸)、人物对话、打斗声(枪声、刀剑声)、交通工具声(引擎、小汽车喇叭、轮胎滑行)和物体碰撞等。

当然,还有许多游戏并不存在现实性特征和模拟现实的元素,因此就无所谓真实声源。例如《俄罗斯方块》、《Rez》 和《新超级马里奥兄弟》。在《新超级马里奥兄弟》中,语言只有廖廖数句(出自角色马里奥和 路易吉),其余的是合成的哔哔声、嘟嘟声等。这些无图象声音信号指的是角色马里奥的活动、事件和游戏剧情的声源,我们可以认为这些也是效果类声音的组成部分。效果类声音通常对玩家在游戏剧情中的活动做出立即的反应、对由游戏触发的事件产生立即的反馈。

效果类声音通常模拟现实世界中声音的活动。在许多游戏中,这部分声音要不断地进行技术处理,如实时音量改变、转换、过滤和音响设计等。

当把剧情性和非剧情性用于描述游戏的剧情环境时,我们必须承认非剧情性声音可以影响剧情性声音,因为二者之间存在交互作用。例如,当游戏非剧情性配乐发生明显的变化时,玩家就会控制玩家角色改变行动,从而产生游戏剧情性声音。在使用这两个术语的某些情况下,这种剧情性转移过程必须考虑到。然而,剧情性和非剧情性或多或少已经成为游戏研究领域中的确立术语,用于描述游戏环境中的特定差异。

场景

场景是剧情性部分的第二类。场景音来源于游戏剧情的声源和与游戏剧情环境相关的声音组成。在当前的许多游戏,如《侠盗猎车手:圣安地列斯 》和《FIFA 07》中的场景音,模拟的是现实世界。一个场景可以理解为一个不同的空间环境,这个空间环境中包含了存在于游戏世界中的有限个的可见发声物(Stockburger, 2003, p. 6)。在某些游戏中,这可能就是所有的声音层了,或者组成声音层的一部分。

游戏声音设计师通常把场景音当成外界声音、环境声音或者背景声音。这样的例子如风雨天气、城市噪音、工业噪音或者丛林中的声音。效果音和场景音之间的主要区别在于,场景音主要由一个可认知的声音层组成,而不是离散的具体声源。另外,在当前的许多游戏,效果音直接与游戏环境的剧情性部分中的玩家活动和游戏事件同步。

场景音的设计通常与现实世界的环境声音相联系。场景音也常常作为“布景音”和游戏世界的反馈性声音。其实场景音的存在很大程度上是保证游戏世界充斥着各种声音,营造出一种“热闹”的游戏环境,从而使玩家产生身临其境之感。

界面

界面是IEZA结构的非剧情性部分的第一类。界面音由虚拟游戏世界之外的声源组成的。这类声音反映了游戏环境的非剧情部分的活动,如玩家自身的活动和游戏程序事件。许多游戏中的界面音包括了与HUD相关的声音,如健康条和状态条、弹出式菜单和得分板的同步声音。

你很容易就可以把界面音从效果音和场景音中区分出来,因为界面音存在这样的设计常规:信息通信技术式声音设计使用有图像和无图像声音信号。这是因为许多元素在现实世界中找不到等价声源,所以许多游戏“借用”剧情声概念,有意模糊界面音与效果音之间的界限。如在《职业滑板高手 4》中,界面音由滑板的移动、摩擦和滑动声音组成。在此,设计师把游戏世界的内容投射到界面,但与游戏世界不存在真正(功能性)的联系。

氛围

非剧情性部分的第二类是氛围。氛围音是由与游戏环境的非剧情性部分相关的声音组成,特别是表现非剧情的游戏大环境的声音。这类例子如冒险游戏中的管弦乐和惊悚游戏中的恐怖音效。界面音和氛围音的主要不同点是,界面音传达的是在非剧情性游戏环境下,玩家自身活动和游戏程序触发的事件的信息。而氛围音表现的是非剧情性游戏环境的大背景音效。

氛围音对设计师来说是一个相当强大的工具,可以为游戏增加或扩充社会性、文化性和情绪性的参照元素。例如,《职业滑板高手 4》中的音乐,显然是参考了特定的亚文化,为的是吸引这个游戏的目标玩家。氛围音经常突出现代流行乐中可以找到的亚文化元素,当然,许多游戏也运用了其他媒体的元素。因为大多数玩家对电影和流行乐都非常熟悉,所以氛围音借用这类元素能有效地体现游戏大环境。

第二维

我们已经看到,第一维把属于虚拟游戏世界(剧情性)的声音和不属于虚拟游戏世界(非剧情性)的声音区别开了。在IEZA结构的第二维中,界面音和效果音都传达了游戏活动的信息,而场景音和氛围音则传达了游戏背景的信息。

许多游戏的设计原则是这样的:特定的活动搭配相应的背景音乐,如根据危险级别和成功率这类参数,不断改变场景音和氛围音。关于游戏声音的反应性,我们可以得出如下结论:只有界面音和效果音可以直接由玩家自身触发。

总结

IEZA结构定义了游戏声音的二维框架结构。第一维描述了游戏声音的来源;第二维描述了游戏声音的表达。

IEZA结构把游戏声音(及其产生的声音)的来源分为剧情性(效果和场景)和非剧情(界面和氛围)性两个部分。

IEZA结构把游戏声音的表达分为游戏活动(界面和效果)和游戏背景(场景和氛围)两部分。

界面类表现了游戏环境的非剧情性部分中的活动。在不少游戏中,这类声音与HUD同步,既是作为对玩家自身活动的反馈,也作为对游戏程序活动的反馈。

效果类表现了游戏的剧情性部分中的活动。这类声音通常与游戏世界中的事件(由玩家和游戏本身触发)同步。但是,游戏的剧情性部分中的活动也可以包括声音流,如不断燃烧的火发出的声音。

场景类表现了游戏环境的剧情性部分中的背景(如地理背景和地质环境)。在当下的许多游戏中,场景音的设计的根据通常是玩家的游戏玩法对游戏世界的影响。

氛围类表现了游戏环境的非剧情性部分的大背景(如情绪性、社会性或文化性背景)。氛围通常反映了游戏的(能引起玩家的)情绪状态或者暗示了游戏中即将到来的事件。

展望

在本文,我们描述了TEZA结构的基本原理,这是我们在2005年和2007年之间的研究成果。该结构已被荷兰Utrecht艺术学校连续采用了三年,作为游戏声音课程的替代工具,供游戏设计专业学生和音效专业学生在课堂上使用。连续两年,我们给学生布置的作业是,设计一个简单的声音游戏。

这个结构作为设计方法,只在第二年时才展示给学生看。我们发现第二年的声音游戏设计呈现出这样的特征:更丰富的声音设计(更多世界观和多样性)、更易理解的声音(如学生清楚地区分了界面音和效果音)、更新颖的游戏设计(以声音为基础的游戏,而非以视觉游戏设计概念为基础)。学生们表示,这个结构帮助他们更好地理解游戏声音的结构和概念化声音游戏设计。

这个结构为进一步探究指出了许多条发展道路。如,观察不同类别中的内容和其之间的联系变得更有乐趣。一个有趣的发现:效果音和场景音在本质上共享了一个音响空间(具有相似的内容和反应),与之相反的是,界面音和氛围音则各有自己的的(通常是非音响)音响空间。

在不少多人模式游戏中,玩家能实时共享的只有效果和场景的音响空间。这种观察不仅对游戏声音设计师来说有价值,对游戏声音引擎的开发者也不无好处。因为无论玩家的声音是否被剧情内容所加工处理,无论玩家的声音是否表明有多少玩家感觉到了声音的来源,这种观察都能帮助设计师把玩家在游戏中的声音体现出来。

在设计这个结构时,我们发现界面音和效果音更适合传达特定的游戏信息,如数据和统计资料,而场景音和氛围音则更适合传达游戏的情感这类信息。

IEZA结构将作为游戏声音设计的一个专业名词和工具。在这个结构中,不同类别各自带有详尽的内容和特征,通过比较区分,我们可以更加了解游戏声音的机制。我们相信IEZA结构是一个实用的游戏声音分类标准,未来的研究和探讨将从中受益。(本文为游戏邦/gamerboom.com编译,如需转载请联系:游戏邦)

IEZA: A Framework For Game Audio

by Sander Huiberts

Surprisingly little has been written in the field of ludology about the structure and composition of game audio. The available literature mainly focuses on production issues (such as recording and mixing) and technological aspects (for example hardware, programming and implementation). Typologies for game audio are scarce and a coherent framework for game audio does not yet exist.

This article describes our search for a usable and coherent framework for game audio, in order to contribute to a critical discourse that can help designers and developers of different disciplines communicate and expand the borders of this emerging field.

Game Advertising Online

Based on a review of existing literature and repertoire we have formulated a framework for game audio. It describes the dimensions of game audio and introduces design properties for each dimension.

Introduction

Over the last 35 years, game audio has evolved drastically — from analogue bleeps, beeps and clicks and crude, simplistic melodies to three-dimensional sound effects and epic orchestral soundtracks. Sound has established itself as an indispensable constituent in current computer games, dynamizing1 as well as optimizing2 gameplay.

It is striking that in this emerging field, theory on game audio is still rather scarce. While most literature focuses on the production and implementation of game audio, like recording techniques and programming of sound engines, surprisingly little has been written in the field of ludology about the structure and composition of game audio.

Many fundamental questions, such as what game audio consists of and how (and why) it functions in games, still remain unanswered. At the moment, the field of game studies lacks a usable and coherent framework for game audio. A critical discourse for game audio can help designers and developers of different disciplines communicate and expand the borders of the field. It can serve as a tool for research, design and education, its structure providing new insights in our understanding of game audio and revealing design possibilities that may eventually lead to new conventions in game audio.

This article describes our search for a usable and coherent framework for game audio. We will review a number of existing typologies for game audio and discuss their usability for both the field of ludology, as well as their value for game audio designers. We will then propose an alternative framework for game audio. Although we are convinced frameworks and models can contribute to a critical discourse, we acknowledge the fact that one definition of game audio might contradict other definitions, which, in the words of Katie Salen and Eric Zimmerman “might not be necessarily wrong and which could be useful too” (2004, p.3). We agree with their statement that a definition is not a closed or scientific representation of “reality”.

We initially focus on a useful categorization of game audio within the context of interactive computer game play only. The term “game audio” also applies to sound during certain non-interactive parts of the game — for instance the introduction movie and cutscenes. It concerns parts of the game that do feature sound and interactivity as well, but do not include gameplay, like the main menu. It even includes applications of game audio completely outside the context of the game, such as game music that invades the international music charts and sound for game trailers. We intentionally leave out the use of audio in these contexts for the moment, as there might be other, more suitable, frameworks or models to analyze audio in each of these contexts — for example, film sound theory for an analysis of sound in a cutscene.

Typologies for game audio

Several typologies and classifications for game audio exist in the field. The most common classification is based on the three types of sound: speech, sound and music which seems derived from the workflow of game audio production, each of these three types having its own specific production process. Award-winning game music composer Troels Follman (2004) extends this classification by distinguishing vocalization, sound-FX, ambient-FX and music and even divides each category into multiple subcategories.

Although these three terms are widely used by many designers in the game industry, a classification based on the three types of sound does not specifically provide an insight in the organization of game audio and says very little about the functionality of audio in games.

A field of knowledge that is closely related to game audio is that of film sound. A commonly known film sound categorization comes from Walter Murch in Weis and Belton, (1985: 357). Sound is divided into foreground, mid-ground and background, each describing a different level of attention intended by the designer. Foreground is meant to be listened to, while mid-ground and background are more or less to be simply heard. Mid-ground provides a context to foreground and has a direct bearing on the subject in hand, while background sets the scene of it all. Others, such as film sound theoretician Michael Chion (1994), have introduced similar “three-stage” taxonomies.

We foresee that this classification can play an important role in the recently emerged area of real time adaptive mixing in games, which revolves around dynamically focusing the attention of the player on specific parts of the auditory game environment. However, these three levels of attention provide no insight in the structure and composition of game audio.

Friberg and Gardenfors (2004, p.4) suggest another approach, namely a categorization system according to the implementation of audio in three games developed within the TiM project3. In their approach, audio is divided according to the organization of sound assets within the game code. Their typology consists of avatar sounds, object sounds, (non-player) character sounds, ornamental sounds and instructions.

Besides the considerable overlap between the categories of this categorization (for instance, the distinction between object sounds and non-player character sounds can be rather ambiguous), this approach is very specific to only specific game designs. It says very little about the structure of sound in games.

Axel Stockburger (2003) combines both the approach of sound types and how sound is organized in the game code, but also looks at where in the game environment sound is originating from. Based on his observation of sound in the game Metal Gear Solid 2, Stockburger differentiates five categories of “sound objects”: score, effect, interface, zone and speech.

Although Stockburger is not consistent when describing categories of sound on one hand (zone, effect, and interface) and types of sound on the other (score [or music] and speech), the approach of looking at where in the game environment sound is emitted can help distinguish an underlying structure of game audio. The three categories of sound (effect, zone, interface) are very close to a framework and therefore a good starting point. But in order to develop a coherent framework, a clear distinction between categories of sound and types of sound is needed.

We may conclude that the field of game theory does not yet provide a coherent framework for game audio. Current typologies say little about the structure of game. Designers and researchers have not yet arrived at a definition of sound in games that is complete, usable and more than only a typology. In the following paragraph we will present an alternative framework for game audio.

The IEZA framework for audio in games

Based on our review of literature and repertoire we have formulated a framework that uses an alternate approach to classify game audio: the IEZA framework. The primary purpose is to refine insight in game audio by providing a coherent organization of categories and by exposing the various properties of and relations between these categories.

The categories and dimensions of the IEZA framework will be described in the following paragraphs and are represented in the following illustration:

Caption: The IEZA framework

The first dimension

On one hand, the game environment provides sound that represents separate sound sources from within the fictional game world, for example the footsteps of a game character in a first-person shooter, the sounds of colliding billiard balls in a snooker game, the rain and thunder of a thunderstorm in a survival horror game and the chatter and clatter of a busy restaurant setting in an adventure game.

On the other hand, there is sound that seemingly emanates from sound sources outside of the fictional game world, such as a background music track, the clicks and bleeps when pressing buttons in the Heads Up Display (HUD), as well as sound related to HUD-elements such as progress bars, health bars and events such as score updates. In other words, sound originating from a part of the game environment that is on a different ontological level as the fictional game world.

Stockburger (2003) was the first to describe this distinction in the game environment and uses the terms diegetic and non-diegetic. These two terms originate from literary theory, but are used in film sound theory as well (for instance by Chion (1994, p.73)). When they are applied to game environments, one has to consider the fact that games often contain non-diegetic elements like buttons, menus and health bars that are visible on screen4. Film rarely features non-diegetic visuals and even if it does, these visuals are not often accompanied by sound.

The diegetic side of the framework

Effect

The diegetic side of the IEZA framework consists of two categories. In the first category, named Effect, audio is found that is cognitively linked to specific sound sources belonging to the diegetic part of the game. This part of game audio is perceived as being produced by or is attributed to sources, either on-screen or off-screen, that exist within the game world. Common examples of the Effect category in current games are the sounds of the avatar (i.e. footsteps, breathing), characters (dialog), weapons (gunshots, swords), vehicles (engines, car horns, skidding tires) and colliding objects.

Of course, there are many games that do not feature such realistic, real-world elements and therefore no realistic sound sources. Examples are games such as Tetris, Rez and New Super Mario Bros. The latter features only a few samples of speech (that of the characters Mario and Luigi) while the rest of the audio consists of synthesized bleeps, beeps and plings. These non-iconic signs refer to activity of the avatar Mario and events and sound sources within the diegetic part of the game and we therefore consider these part of the Effect category. Sound of the Effect category generally provides immediate response of player activity in the diegetic part of the game environment, as well as immediate notification of events and occurs, triggered by the game, in the diegetic part of the game environment.

Sound of the Effect category often mimics the realistic behavior of sound in the real world. In many games it is the part of game audio that is dynamically processed using techniques such as real-time volume changes, panning, filtering and acoustics.

4 When the terms diegetic and non-diegetic are used in the context of games, one has to acknowledge the fact that non-diegetic information can influence the diegesis, because of interactivity. For example, a player controlling an avatar can decide to take caution when noticing a change in the non-diegetic musical score of the game, resulting in a change of behavior of the avatar in the diegetic part of the game. In some cases, this trans-diegetic process needs to be taken into account when using the terms diegetic and non-diegetic. Yet, diegetic and non-diegetic have more or less become the established terms within the field of game studies to describe this particular distinction in the game environment.

Zone

The second category, Zone, consists of sound sources that originate from the diegetic part of the game and which are linked to the environment in which the game is played. In many games of today, like Grand Theft Auto: San Andreas and FIFA 07, such environments are a virtual representation of environments found in the real world. A zone can be understood as a different spatial setting that contains a finite number of visual and sound objects in the game environment (Stockburger, 2003, p. 6). It might be a whole level in a given game, or part of a set of zones constituting the level.

Sound designers in the field often refer to Zone as ambient, environmental or background sound. Auditory examples include weather sounds of wind and rain, city noise, industrial noise or jungle sounds. The main difference between the Effect and Zone category is that the Zone category consists chiefly of one cognitive layer of sound instead of separate specific sound sources. Also, in many of today’s games, the Effect category is directly synced to player activity and game events in the diegetic part of the game environment.

Sound design of the Zone category is generally linked to how environments sound in our real world. Zone also often offers “set noise”, minimal feedback of the game world, to prevent complete silence in the game when no other sound is heard. The attention (and therefore immersion in the game) of the player can benefit from this functionality.

The non-diegetic side of the framework

Interface

The first category of the non-diegetic side of the IEZA framework, Interface, consists of sound that represents sound sources outside of the fictional game world. Sound of the Interface category expresses activity in the non-diegetic part of the game environment, such as player activity and game events. In many games Interface contains sounds related to the HUD (Heads Up Display) such as sounds synced to health and status bars, pop-up menus and the score display.

Sound of the Interface category often distinguishes itself from sound belonging to the diegetic part of the game (Effect and Zone) because of interface sound design conventions: ICT-like sound design using iconic and non-iconic signs. This is because many elements of this part of the game environment have no equivalent sound source in real life. Many games intentionally blur the boundaries of Interface and Effect by mimicking the diegetic concept. In Tony Hawk’s Pro Skater 4, Interface sound instances consist of the skidding, grinding and sliding sounds of skateboards. Designers choose to project properties of the game world onto the sound design of Interface, but there is no real (functional) relation with the game world.

Affect

The second category of the non-diegetic side of the framework, Affect, consists of sound that is linked to the non-diegetic part of the game environment and specifically that part that expresses the non-diegetic setting of the game. Examples include orchestral music in an adventure game and horror sound effects in a survival horror game. The main difference between Interface and Affect is that the Interface category provides information of player activity and events triggered by the game in the non-diegetic part of the game environment, while the Affect category expresses the setting of the non-diegetic part of the game environment.

The Affect category is a very powerful tool for designers to add or enlarge social, cultural and emotional references to a game. For instance, the music in Tony Hawk’s Pro Skater 4 clearly refers to a specific subculture and is meant to appeal to the target audience of this game. The Affect category often features affects of sub-cultures found in modern popular music, but the affects of other media are also found in many games. Because most players are familiar with media such as film and popular music it is a very effective way to include the intrinsic value of the affects.

The second dimension of the framework

As we have seen, the first dimension distinguishes categories belonging to the game world (diegetic) and those who are not belonging to the game world (non-diegetic). But there also is a second dimension. The right side of the IEZA framework (Interface and Effect) contains categories that convey information about the activity of the game, while the left side (Zone and Affect) contains categories that convey information about the setting of the game.

Many games are designed in such a way that the setting is somehow related to the activity, for example, by gradually changing the contents of Zone and Affect according to parameters such as level of threat and success rate, which are controlled by the game activity. We also gain an insight concerning the responsiveness of game audio: only the right side of the framework contains sound that can be directly triggered by the players themselves.

Summary

*

The IEZA framework defines the structure of game audio as consisting of two dimensions. The first dimension describes a division in the origin of game audio. The second dimension describes a division in the expression of game audio.

*

The IEZA framework divides the game environment (and the sound it emits) into diegetic (Effect and Zone) and non-diegetic (Interface and Affect).

*

The IEZA framework divides the expression of game audio into activity (Interface and Effect) and setting (Zone and Affect) of the game.

*

The Interface category expresses the activity in the non-diegetic part of the game environment. In many games of today this is sound that is synced with activity in the HUD, either as a response to player activity or as a response to game activity.

*

The Effect category expresses the activity in the diegetic part of the game. Sound is often synced to events in the game world, either triggered by the player or by the game itself. However, activity in the diegetic part of the game can also include sound streams, such as the sound of a continuously burning fire.

*

The Zone category expresses the setting (for example the geographical or topological setting) of the diegetic part of the game environment. In many games of today, Zone is often designed in such a way (using real time adaptation) that it reflects the consequences of game play on a game’s world.

*

The Affect category expresses the setting (for example the emotional, social and/or cultural setting) of the non-diegetic part of the game environment. Affect is often designed in such a way (using real time adaptation) that it reflects the emotional status of the game or that it anticipates upcoming events in the game.

4. Discussions and future work

In this article we have described the fundamentals of the IEZA framework, which we developed between 2005 and 2007. The framework has been used at the Utrecht School of the Arts (in the Netherlands) for three consecutive years as an alternative tool to teach game audio to game design students and audio design students. For two successive years we gave our students the assignment to design a simple audio game5.

The framework was only presented to the students of the second year as a design method. We found that the audio games developed in the second year featured richer sound design (more worlds and diversity), better understandable sounds (for instance, the students made a clear separation between Interface and Effect) and more innovative game design (games based on audio instead of game concepts based on visual game design). The students indicated that the framework offered them a better understanding of the structure of game audio and that this helped them conceptualize their audio game designs.

The framework offers many avenues for further exploration. For instance, it is interesting to look at the properties of and the relationships between the different categories. An example of this is the observation that both Effect and Zone in essence share an acoustic space (with similar properties and behavior), as opposed to Interface and Affect, which share a different (often non-) acoustic space6.

In many multiplayer games, it is only the acoustic space of Effect and Zone that is shared in real time by players. Such observations can not only be valuable for a game sound designer, but also for a developer of a game audio engine. It is also relevant for designers incorporating player sound in games, because whether or not the player sound is processed with diegetic properties, defines how players perceive the origin of the sound.

An insight we discovered while designing with the framework is that the right side (Interface and Effect) is more suited to convey specific game information such as data and statistics, whereas the left side (Zone and Affect) is more suited to convey game information such as the feel of the game.

The IEZA framework is intended as a vocabulary and a tool for game audio design. By distinguishing different categories, each with specific properties or characteristics, insight is gained in the mechanics of game audio. We believe the IEZA framework provides a useful typology for game audio from which future research and discussions can benefit. (source:gamasutra)