从“热手”现象看游戏风险与奖励机制设计

游戏邦注:本文原作者是认知心理学博士Paul Williams,他在这篇论文中讨论了游戏设计与“热手”心理现象之间的联系,并认为深入研究“热手”现象,可为游戏的风险和奖励机制设计指明方向。

摘要:本文主要通过探讨一款游戏的风险和奖励机制设计,研究被称为“热手”的心理现象。“热手”这种表达起源于篮球运动——人们普遍认为处于热手状态的球员在一定程度上,比起他们长期的记录情况更可能再次进球,很多运动员在赛场上表现出了这种倾向。然而,大量证据认为这其实是一种站不住脚的观念。对于这种观念与有效数据存在出入的一种解释是,处于成功势头的球员因为膨胀的信心,更加乐意去冒更大的风险。我们非常有兴趣通过开发一个上下神射手的游戏来研究这种可能性。这种游戏有独特的设计要求,它包括平衡性良好的风险和奖励机制,无论玩家采取何种策略,这种机制都能为玩家提供相应的奖励。我们对这种上下神射手的迭代开发过程的描述,包括定量分析玩家如何在变化的奖励机制下冒险。我们将根据游戏设计的一般原则,进一步讨论研究所发现的意义。

关键词:风险、奖励、热手、游戏设计、认知、心理学

背景介绍

平衡风险与奖励是设计电脑游戏时的一个重要考虑因素。一个优秀的平衡风险与奖励机制可以提供很多额外游戏价值。与之类似的是赌博所带来的兴奋感。当然,当玩家如果在一个策略上打赌,他们都会持有一定的胜算和风险。打赌时,希望更大的风险能得到更多奖励的想法是很合理的。Adams不仅称“风险总是必然与奖励同在”,而且他还认为这是电脑游戏设计的基本原则。

许多游戏设计书也讨论了平衡风险与奖励在游戏中的重要性:

·“奖励与风险相当”

·创造一个复杂而进退两难的困境,让玩家自己权衡利弊,判断每一步行动可能产生的风险或者回报

·给玩家一个选择的机会,要么在奖励少的情况下安全地玩,要么在奖励多的情况下冒险,这是使游戏有趣刺激的绝妙方法。

风险与奖励在其他领域也有所体现,例如股市交易和体育。在股票市场,风险与回报总会影响投资选择。因为高风险下潜藏着高回报,所以一些投资者可能喜欢冒险投资如纳米技术这种股票。其他投资者可能更保守,选择投资浮动性更小的联邦债券,虽然得到更低的回报,但同时也承担更小的风险。在体育赛场上,因为从远距离投球能得三分,所以篮球运动员有时会采用更困难的动作来争取三分球,因此得冒更大的风险。

心理学家、认知科学家、经济学家等对这些因素非常感兴趣,总喜欢研究这些因素对人类在风险和回报结构中不同决定的影响。然而,股票市场和体育领域是喧闹的环境,这一点给玩家和研究者分离出任何事件的风险与奖励情况增加了难度。电脑游戏提供了一种卓越的平台,可以在控制良好的环境下,让人们研究风险与奖励对玩家行为的影响。我们从认知科学和游戏设计两个角度来考察风险与奖励,并相信这两个角度是互补的。心理学可以为游戏设计提供理论依据,而设计得当的游戏也可以成为心理现象研究的有利工具。

本文讨论的对象是上下神射手这种可不断更改迭代、以玩家为主心,并运用于调查“热手”心理现象的游戏。虽然本文关注的重点在于风险与奖励机制的设计过程,这种机制符合热手游戏的设计需求,我们将从这种现象的综述和目前的研究情况入手进行探讨。在随后的部分中,我们将描述游戏设计和研发的三个阶段。在最后一部分,我们会把这些发现与游戏设计的更普遍原则相互联系起来。

热手效应

“热手”的表达起源于篮球,它描述的是认为球员在进球后更有可能在下一次投篮时得分的心理现象(游戏邦注:也就是说,人们普遍认为这些球员正处于得分顺势中,投篮顺手,这种心理现象被称为“热手效应”)。在一份针对100名篮球迷的调查中,91%的人认为球员在成功投篮两次或三次后更可能再次命中,而之前如果连失几个球,以后再投篮时就不会那么顺手了。

直观的看,这些观点和预言似乎合理,开创性研究并没有发现在1980-81年的费城76人队投篮命中率,或者1980-81年和1981-82年的凯尔特队的罚球命中率中有出现热手现象。随后一系列运动研究证实了一个惊人的发现——投篮表现的冷热势可能只是一种假象。

然而,之前的投篮热手结果研究揭示了一个更加复杂的情况。之前的研究暗示,有确定困难的任务和不定困难的任务之间存在显著区别。篮球中的罚球可以作为一个确定困难的任务的例子。

在这种投篮中,距离是不变的,所以每一次投篮都有相同的难度级别。而在不确定困难的任务里,就像篮球赛中的投篮,球员可能要调整他们每一投的风险级别,所以投篮的难度会随着不同的投射距离、守势的压力和整个比赛的情况而改变。

有证据表明,玩家可能在确定难度的任务,例如掷马蹄铁、台球和十瓶式保龄球中获得顺势。然而,在非固定难度的任务里,例如棒球、篮球和高尔夫球,这种冷热势并不明显——其实际情况与普遍观念相反。

对于流行观念和真实数据之间不一致的最普遍解释是,人类往往误读了数字中的小趋向模式。也就是说,我们倾向于形成基于几个事件组的模式,例如球员三连投,然后用这种模式来预测随后的情况。关于投篮,经过三次成功的投球后,人们会错误地认为下一个投射比起长期水准更可能成功。这就是顺势的谬误。

关于这种不一致的另一种解释表明,为了不产生失误,投球者在一连串的成功中往往要冒更大的风险。在这种情形下,一个球员在热手情况下确实表现更好—–因为他们在相同准确度的条件下承担了更困难的任务。这种能力的增强恰好印证了热手预言,然而传统的投篮表现纪录却没有发现这一点。尽管我们通过界定固定难度和非固定难度之间的区别(因为热手情况多发生于固定难度的任务中,运动员所面临的是难度固定的挑战),可以让这种假设暂时成立,但只有进一步的研究才能证实这种假设究竟是否站得住脚。

不幸的是,如果设法收集更多运动比赛中的数据来研究热手现象,则不免带有主观性因素。我们如何评估一个确定投射的难度超过另一个投射呢?如何辨别球员是否采取了更有风险的策略?

解决这个问题的一个好办法就是,设计出可以准确记录玩家策略变化、难度不定的电脑游戏。这种游戏或许可以回答与心理学和游戏设计相关的重要问题—-玩家如何对游戏中一连串的成功或失败做出反应?

这款“热手游戏”的开发过程正是本文讨论的重点。这种游戏需要一个非常协调的风险和奖励机制,并通过玩家所采取的冒险行为,不断调整游戏结构。在各个开发阶段,我们测试了玩家对风险和奖励机制作出的反应,然后按照玩家的策略和表现,分析这些结果,以便将其运用于下一阶段的游戏设计。

这种设计的特征是反复性、以玩家为主心。虽然本文所示的游戏设计比较简单,但考虑到心理学调查地准确性的要求,我们执行的是比一般游戏开发更为规范的玩家测试。结果发现,我们可以准确评估玩家策略的改变,发现即使是风险与奖励机制中的微小变化,也会对玩家的风险策略产生影响。

游戏要求和基本设计

这种热手游戏首先需要一个相当协调的风险和奖励机制,它必须具备几个(5-7)高度协调的风险级别,使得玩家乐于调整他们的冒险级别来应对成功和失败。比如,一个风险级别得到的奖励实际上多过其他的风险级别,玩家久而久之会学到这点,然后就不太可能在这个级别中改变策略了。所以我们希望每个风险级别都能让普遍玩家都得到相应奖励。换句话说,不论采用什么风险级别,玩家得到最佳奖励的机会应该是相等的。

第二个要求是,支持我们在玩家成功和失败后对其策略进行考察。如果玩家经常失败,我们就无法记录足够的成功次数。如果玩家大多时候成功了,我们就考察不了失败情况。所以这个游戏的核心要素和关键难度在于,提供平均的成功概率,其范围介于40-60%。

满足这些要求的是使用Actionscript在Flash环境中开发的上下神射手游戏。任何基于物理挑战,带有得失分的简单动作类游戏都适用于我们的研究,上下神射手则恰好具有这几个优势。首先,人们这种风格的游戏极为熟悉,这意味着玩家容易上手,有助于我们用这个游戏来收集实验数据。第二,简单的重点难度参数编码(即目标速度和加速度),可以使我们轻松而准确地操作奖励机制。最后,上下神射手游戏中的“一击”与篮球中的“一投”相类似,有相似的“命中”和“错失”结果。这就是当前实验与热手起源之间的联系所在。

在上下神射手游戏中,玩家的目标是在规定的时间内尽可能多地射击外星飞船。也就是说总射击量和命中数量取决于玩家的表现和策略。游戏的屏幕会显示两架飞船,代表外星人的飞船和玩家的射击机(图1)。简单的界面显示了当前命中数和所剩时间。在游戏过程中,玩家的飞船会在屏幕底部中间保持静止。任何时候屏幕都会只出现一架外星飞船,它会在屏幕上部水平地前后运动,并且每次返回都碰一下右边沿或左边沿。玩家按下空格键即向向外星飞船射击。玩家只有一次机会来摧毁每一架新出现的外星飞船。每击落一架外星飞船,玩家就得到一个命中数的奖励。

每一架外星飞船都是从屏幕上方进入游戏界面,随机向左边沿或右边沿移动。飞船飞离屏幕两边,水平移动,如此经过八次后才会离开屏幕。最初,外星飞船移动飞快,但它以相同的速率减速,每一次经过都更加缓慢。因此这个游戏能够展示一种不同难度的任务;玩家可以选择适合的风险级别,这样每次外星飞船经过时射击就更简单些。

对于玩家来说,这种风险和奖励的等式相当简单。无论玩家何时开火,每一次命中的得分都是一样的。因为目标是在一个游戏周期里摧毁尽可能多的外星飞船,所以玩家可以尽快从射击中获利;越早射中目标,玩家不仅得到命中数,同时获得更多的时间来击落随后的外星飞船。然而,因为外星飞船在八次经过的每一次经过里都减速,玩家越早开火就越难命中。如果射空了,玩家就减少1.5秒作为惩罚。也就是,下一架飞船的出现只有1.5秒的延迟,这就增加了准确射击的间隔时间。

第一阶段:玩家锁定目标

经过自测游戏,我们将游戏拓展到在线版。通过向学生、家庭和朋友发电子邮件,我们找到了五名实验玩家。我们要求玩家在给定时间内击落尽可能多的外星飞船。玩家先在练习级别上试玩6分钟,然后在竞技级别上玩12分钟。因为玩家的策略和命中率存在差别,所以他们遇到的外星飞船数量也各不相同。一个玩家有可能在60秒内遇到大约10架外星飞船。游戏结束后,玩家对每架外星飞船的反应时间和命中率都被记录在案。

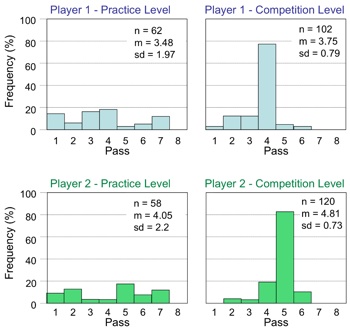

游戏的要求之一是,玩家在一系列难度级别中射击经过的飞船(越后面的飞船经过意味着更低的射击难度——这种简单的测试证明了在整个游戏中,玩家乐意探索空间,并且改变他们的冒险行为。图2是代表玩家1和玩家2的结果。通常玩家在游戏的练习级别时往往很有探索精神,这显示在第一次经过和第八次经过之间的射击数分布。然而在竞技级别,玩家往往采用单一策略,从图2的大尖峰可以看出来。它暗示了玩家在经过探索期后,试图通过射击三个固定的飞船经过以获得更多得分。

上图是两名玩家在游戏第一阶段的测试结果。玩家1数据显示在上部,玩家2的数据显示在下部。左边的柱形代表在练习阶段的射击频率;右边则代表在竞技阶级中各个经过的射击频率。受测玩家在练习模块,射击频率均匀地分布在各个飞船经过,如左边图所示。但之后在竞技模块中,玩家采取固定策略,右半边的图中的尖峰正体现了这种情况。在以上各图中,n代表玩家的尝试射击总数;m代表命中数,sd代表尝试射击数的标准差。

在实验术语里,守定一个策略的行为被称为“投资”。在游戏结束时玩家反映,因为飞船的匀速减速,那么如果他们锁定同一次飞船经过,且与边界达到特定距离,就总能射中目标。针地特定经过的外星飞船,玩家采用特定的限时策略(即一个特定的难度级别)。在同一次飞船经过的射击中,玩家在每个时间单元里的命中数总是最高的。在案例表格(图2)里,一个玩家“投资”于第四次经过,另一个则是第五次经过。这种类型的投资行为与热手游戏的一个要求相反,也暴露了游戏的一个主要设计缺陷,这需要在下一个迭代调整中进行修正。

第二阶段:鼓励玩家探索

游戏设计第二个阶段的目标是解决玩家在单一策略上的投资问题。我们提议的解决办法是改变玩家飞船的位置,这样它就不再出现在屏幕中间那个相同位置了,而是在每架外星飞船出现时随机转移到中间的左边或右边(图3)。这样,在每一次测试,玩家飞船的位置都从平均分布的100像素中心的左边或右边中随机移动。这个操作旨在防止玩家习惯单一策略,总是等待凑效的时间序列采取行动(例如总是在飞船第四次经过,与屏幕边沿有固定距离时射击)。

上图是游戏第二阶段的屏幕。蓝色矩形出现在这里是为了指明玩家飞船可以随机定位的范围,但在真正的游戏过程中不会出现这个蓝色矩形。

这一次我们推出了这个游戏的在线版本,并记录了6个玩家的测试数据。再次让他们先在练习级别上试玩6分钟,之后在竞技级别上玩12分钟。

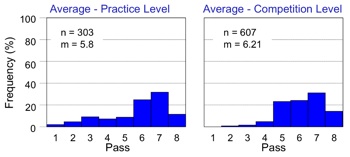

各个玩家在竞技级别的结果显示在图4上。在玩家射击位置里引入随机变化,显著减少了玩家投资于同一次飞船经过的倾向。与图2相比,图4变化的增加突显了投资的减少。因此,游戏中的微小调整对玩家的行为产生了重要影响,它鼓励玩家在游戏中改变冒险策略。另外,这种调整满足了热手研究的必备要求。

上图是玩家在测试第二阶段中竞技级别的个人结果。减少的峰值和变化的增加表明,与第一阶段相比,玩家在竞技级别的单次飞船经过中射击的倾向大大减少。以上各图,n代表玩家的尝试射击总数;m代表命中数,sd代表尝试射击数的标准差。

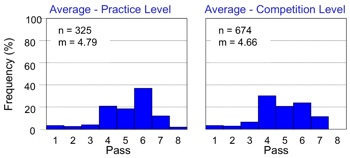

图5代表所有的玩家在练习级别和竞技级别的平均测试数据。所有玩家在练习和竞技级别中的平均数据,均突出了游戏奖励机制对玩家游戏策略的影响。左边的柱形代表练习级别(没有在图4中显示)的数据,右边则代表竞技级别的数据。

上图是第二阶段中玩家的平均数据结果。左边的柱形图代表在练习级别每一次飞船经过的射击频率(%),右边则显示了在竞技级别每一次飞船经过的射击频率(%)。在以上各图中,n是所有玩家在该模块中尝试射击的总数;m是平均命中数。练习级别和竞技级别的射击对比,突出了玩家在游戏进程中推迟射击的趋势。

图5显示随着游戏的推进,玩家的射击策略有预见性地发生改变。例如,在练习级别的平均命中数(m=5.8)比竞技级别的(m=6.21)更少。这样在竞技级别,玩家往往更迟发动射击。这表明游戏奖励总集中于后面几次飞船经过,而玩家越来越熟悉这种奖励机制时,就会相应地调整游戏玩法。

为了在热手研究中最小化这种偏差,我们在平均玩家表现的基础上检测了风险与回报机制。我们特别感兴趣的是,第一次成功射击的可能性以及这种可能性如何转换为奖励系统。在后面的飞船经过中射击要花更多时间,但与之相伴的是更高的命中率。因为热手游戏的目标是在12分钟内击中尽可能多的外星飞船,所以命中率与所花时间对奖励机制来说具有同等重要性。

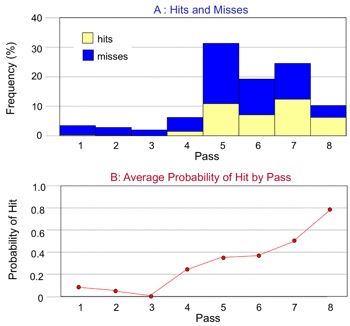

之后我们分析了一种情况,假如玩家坚持在特定的飞船经过里射击各个出现的飞船,那么12分钟内,平均命中数是多少。例如,在发现第一飞船经过的命中率后,玩家在第一次经过中会采取几次射击行动?第二次飞船经过时呢?以此类推,其后几次飞船经过的情况又将如何?图6显示了这个检测的结果。图6A显示了玩家在几次飞船经过时射击的平均数(柱形的总高度),以及每一次飞船经过的命中数(柱形黄色部分的高度)。图6B用这份数据表现成功的可能性,并且表明在后面几次的飞船经过中,玩家成功的概率更高。这从实验上证实,玩家在心理上感觉,后面几次飞船经过更容易让他们射中目标。

上图是游戏进程的第二阶段中的平均数据和模型预测。在图A,每个矩形的总高度表示尝试射击频率。黄色和蓝色矩形的高度表示命中和错失比例。图B表现的是给定尝试射击总数,每一次飞船经过命中的平均概率。图C和图D在实验结果的基础上,预测了假如玩家自始至终只在一次飞船经过时射击的命中数量。

这些可能性评估了在全程12分钟的模块里,只在一次从飞船经过中射击的情况下,一般玩家可能取得的总命中数。通过演示每次经过的期望命中数,我们为当前的游戏画出了一个最佳的策略曲线,如图6C所示。这个曲线是单调递增的,表明随着经过次数的增加,平均玩家的总命中数也随之增加。换句话说,玩家在低难度射击中更可能命中。游戏奖励明显集中于后面的飞船经过次数,这证实了玩家在游戏过程中改变了策略(即推后射击)。随着对奖励机制的熟悉,玩家的策略也相应地转向更迟,更容易的射击机会。

对游戏而言,在第8次飞船经过时射击可以认为是一种探索策略。图6C表明不断地在第8次飞船经过时进行射击产生了最大命中数,这也因此成了玩家的普遍策略。因为形成了这种策略,玩家为了获得一连串命中,就会减少早点射击的次数。可见这种设计仍然不能满足热手游戏的要求。

但只要一个简单的调整就能解决这个问题,那就是减少失败射击后的惩罚等待时间。目前惩罚时间是1.5秒,所以应该允许奖励机制中的惩罚时间发生一定的弹性变化。考虑到如果玩家选择在早一点的飞船经过时射击,击射次数越多,失误也就越多——减少每一次失误的惩罚时间,实际上等于是增加了在早期飞船经过时进行射击的奖励。

图6D表现的是在惩罚时间从1.5秒减少到0.25秒的情况下,一般玩家在12分钟里的预测命中数。这看似很小的调整平衡了奖励机制,这样玩家就得到更平衡的奖励(游戏邦注:至少从第3次飞船经过到第8次飞船经过是这样的)。对第一次飞船经过和第二次飞船经过准确率的评估是以小次数测试为基础的,这使它们难以成为测试模型;玩家避免采取更早的射击行动,有可能是因为外星飞船移动得太快。但允许玩家在第3次到第8次飞船经过中射击,仍然可为我们的热手研究提供了足够的参考数据。

第三阶段:平衡风险与奖励机制

在游戏设计的第二阶段,我们展示了玩家在飞船第8次经过时取得最佳表现的风险与奖励探索策略。我们认为这有可能就是促使玩家在飞船后面经过时射击的原因。可以用实验数据来模拟玩家表现,表明将惩罚时间降至0.25秒就能解决这个问题。

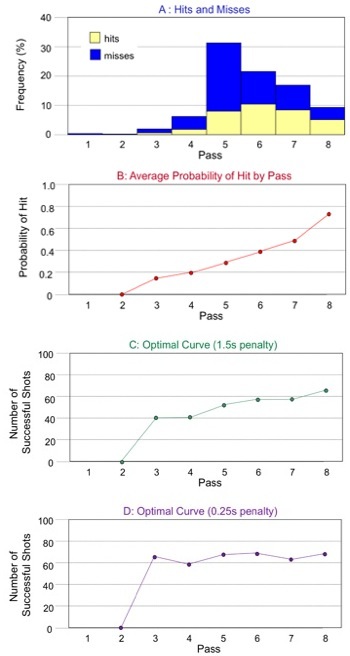

在改良版的在线游戏中,惩罚时间是0.25秒,五位玩家的测试数据均已一一记录。平均结果显示,在练习和竞技级别,玩家的射击大致发生在相同的飞船经过次数里(图7)。这个特征与图4相反,后者突显玩家在12分钟的竞技级别中,呈现了在飞船后面经过时才射击的倾向。这组数据证实了选择0.25秒惩罚时间的正确性,同时也证实了奖励机制的改变,可能影响玩家行为的说法。

图7:游戏第三阶段玩家的平均数据结果。左图代表玩家在练习级别的各次飞船经过的射击频率;右图代表玩家在竞技级别的各次飞船经过的射击频率。在以上各图中,m是平均命中率,n是玩家在该模块的总射击数。平均命中率显示了在均衡的奖励机制下,玩家不再尝试推迟射击。

我们开发热手游戏的前提条件就是,游戏在每一个假定风险级别中,都应该为一般玩家提供相应的奖励(总命中数)。第三阶段起的设计通过平衡奖励制度,开始与研究热手现象的要求保持一致。

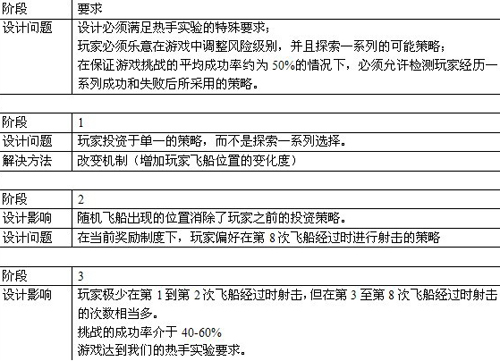

最后,我们要求这个游戏有一个总体上的难度级别,使玩家的尝试射击有40-60%的成功率。这个范围内的表现,有助于我们综合比较玩家对应一连串成功和失败的策略。也就是,对热势和冷势的测试。图8突出总体成功概率确实满足这个标准,总体成功概率(命中率)是43%。因此,这个游戏现在满足了研究热手现象的必要条件。

图8:游戏开发第三阶段的竞技级别的平均结果。在图A,每一块矩形的总高度代表玩家在每一次飞船经过时尝试射击的频率。命中和错失比例分别用黄色和蓝色表示。图B是根据总体射击次数的情况,显示每一次飞船经过时的平均射击成功概率。在图B里,ps是总体成功概率(命中率)。

总结

为了研究热手心理现象,我们才开发这个电脑游戏作为研究工具,需要以此观察玩家对一连串成功和失败挑战的冒险反应。

我们设计了一款简单的上下神射手游戏,在该游戏中,外星飞船在屏幕上来回经过8次,玩家只有1次射击机会。玩家在游戏中面对几次相同的挑战。该游戏的目标是在一系列时间段内击落尽可能多的外星飞船。随着飞船减速,游戏设置里的风险就相应减小。玩家在靠前的飞船经过时成功击落目标就可获得1个命中的奖励,并且立即出现新的外星飞船。错失一次射击,就以一段额外的等待时间作为对玩家的惩罚。

作为一款热手游戏,它需要达到特殊的冒险和奖励标准。玩家需要在游戏中探索一系列冒险策略,并且均衡地获得与风险级别相当的奖励。我们还希望这个游戏挑战有一个与失败率大致相当的平均成功率,即介于40-60%,这样我们就可以用这个游戏来收集玩家应对成功和失败的行为数据。

为了达到目的,我们开发了超过三个阶段的迭代游戏版本。在每一个阶段,我们都通过在线版游戏的测试收集实验数据,并分析玩家的策略和表现。在各个后续设计阶段,我们都调整了游戏机制,使其在一定程度上达到平衡,以满足热手游戏的特殊要求。这个游戏设计的调整及其影响总结如表1所示。

表1总结:各阶段的设计调整及其对热手实验要求的影响。

游戏设计书往往会描述出游戏迭代的设计过程。这种迭代过程支持设计者在后继开发阶段解决原先没有预料到的问题。由于游戏机制在开发之初并不明朗,只有在游戏创建和操作阶段才突显

出来,因此迭代过程对游戏的完善来说尤其重要。Salen和Zimmerman将这种迭代过程描述为“以试玩为基础“的设计,同时强调了”游戏测试和创建原型“的重要性。为了实现目标,开发者往往需要连续创建游戏原型。我们确实是以高要求开始用相同的迭代完善、创建原型方法来改进我们的游戏设置。

我们这个方法的主要不同点在于,我们在各个设计阶段都会更规范地测验玩家的策略和探索行为。考虑到我们的游戏要求相当独特,单纯的客观反馈无法支持我们对游戏机制的需求进行微小调整。比如,在最初的测试里,我们发现玩家倾向于单一的游戏策略。进一步的分析也表明玩家通过射击最后一次经过的飞船,可以容易地增加他们的总体命中数,这是玩家对游戏策略的潜在开发。

游戏策略的探索问题在游戏界常引起争议,并屡屡被作为心理学界研究对象。开发和探索之间的权衡现象在许多领域也存在,外部和内部条件决定了玩家为扩大最大利益,或者最小化损失所采取的策略。例如,在寻找食物过程中,玩家就会关注资源分布情况。集中的资源,会让玩家集中对资源丰富的就近地区进行开发,而分散的资源则会将玩家引向对空间的探索过程。

Hills等人表明,探索和开发策略在精神领域也存在竞争,这取决于对需求信息的奖励,以及为研究探索所付出的代价。在我们的游戏环境里,玩家始终在最容易的情况下(即飞船第8次经过时)射击的这种策略总会产生最高的奖励。这就鼓励了玩家在游戏中采取推迟射击的策略,反过来也抑制了玩家探索其他策略(更早的射击)的尝试。如果没有收集玩家的实验数据,我们不可能预测到这个结果。

收集实验数据的另一大优点在于,它支持我们在衡量玩家表现的基础上,改变我们的奖励制度。在第一和第二阶段,每次损失1.5秒,玩家就会错失一个外星飞船。在第三阶段,我们在分析

玩家表现的基础上,将惩罚时间降至0.25秒。这个微小的调整却足以改变玩家的行为,并鼓励他们更早地冒险射击外星飞船。我们的游戏从本质上来看是相当简单的,但它却足以证实设计一个平衡性良好的冒险与奖励机制的困难性和重要性。

其他游戏文献里谈到的另一个共同的设计原则是以玩家为中心,这被Adams定义为“一种由设计者想象自己所希望遇到的玩家类型的设计哲学。”尽管如此,还是有一些观点认为游戏设计通常是以设计者的经验为基础。将玩家纳入设计过程往往也涉及更多客观反馈,例如将中心群体和采访广泛运用于可用性设计。我们在研究中发现,即使是很简单的游戏挑战,使用实验数据来测验玩家如何应对游戏以及他们如何表现,这也会成为平衡游戏设置的一个重要元素。

我们也认识到这种方法有一些缺点,即平均考察每位玩家的表现,有可能忽略玩家之间的重要差异。如果有一个理想的玩家模型就太好了,但这种玩家是不可能存在的,事实上,关于谁是“玩家”这个问题本身就存在许多富有争议的不同观点,因此我们才需要收集不同玩家群体的实验数据。如果玩家之间的差异很大,设计者有可能得针对不同群体进行抽样调查,例如将其分为休闲玩家、硬核玩家等不同群体。

现在我们所完成的游戏设计已满足研究热手现象的要求。这个游戏也许可以回答以下几个问题:

1.在游戏挑战中,玩家如何对一连串的成功或失败作出反应?

2.如果玩家处于热势,他们会接受更困难的挑战吗?

3.如果玩家处于冷势,他们是否会降低风险?

4.这种多变的风险级别如何影响玩家表现的总体测验?

5.热手原则如何运用到游戏机制设计中?

相信关于这些问题的答案不仅会激发心理学家的研究兴趣,而且也能进一步促进游戏设计。例如,设计者可以激起玩家的热势,使其更倾向冒险或探索他们的策略。当然也有可能运用冷势来抑制玩家当前策略,这种游戏机制可以在不打断玩家注意力的情况下,不知不觉地控制玩家的冷热势。进一步探索热手现象,将对心理学研究和游戏设计产生重大意义。(本文为游戏邦/gamerboom.com编译,转载请注明来源:游戏邦)

Paul Williams is undertaking a PhD in Cognitive Psychology at the University of Newcastle, under the supervision of Dr. Ami Eidels. He is interested in developing online gaming platforms suitable for the investigation of cognitive phenomena, and is currently focused on refining and implementing a novel paradigm to study the behavioral phenomenon known as the “hot hand.”

Balancing Risk and Reward to Develop an Optimal Hot-Hand Game

Abstract

This paper explores the issue of player risk-taking and reward structures in a game designed to investigate the psychological phenomenon known as the ‘hot hand’. The expression ‘hot hand’ originates from the sport of basketball, and the common belief that players who are on a scoring streak are in some way more likely to score on their next shot than their long-term record would suggest. There is a widely held belief that players in many sports demonstrate such streaks in performance; however, a large body of evidence discredits this belief. One explanation for this disparity between beliefs and available data is that players on a successful run are willing to take greater risks due to their growing confidence. We are interested in investigating this possibility by developing a top-down shooter. Such a game has unique requirements, including a well-balanced risk and reward structure that provides equal rewards to players regardless of the tactics they adopt. We describe the iterative development of this top-down shooter, including quantitative analysis of how players adapt their risk taking under varying reward structures. We further discuss the implications of our findings in terms of general principles for game design.

Key Words: risk, reward, hot hand, game design, cognitive, psychology

Introduction

Balancing risk and reward is an important consideration in the design of computer games. A good risk and reward structure can provide a lot of additional entertainment value. It has even been likened to the thrill of gambling (Adams, 2010, p. 23). Of course, if players gamble on a strategy, they assume some odds, some amount of risk, as they do when betting. On winning a bet, a person reasonably expects to receive a reward. As in betting, it is reasonable to expect that greater risks will be compensated by greater rewards. Adams not only states that “A risk must always be accompanied by a reward” (2010, p. 23) but also believes that this is a fundamental rule for designing computer games.

Indeed, many game design books discuss the importance of balancing risk and reward in a game:

* “The reward should match the risk” (Thompson, 2007, p.109).

* “… create dilemmas that are more complex, where the players must weigh the potential outcomes of each move in terms of risks and rewards” (Fullerton, Swain, & Hoffman, 2004, p.275).

* “Giving a player the choice to play it safe for a low reward, or to take a risk for a big reward is a great way to make your game interesting and exciting” (Schell, 2008, p.181).

Risk and reward matter in many other domains, such as stock-market trading and sport. In the stock market, risks and rewards affect choices among investment options. Some investors may favour a risky investment in, say, nano-technology stocks, since the high risk is potentially accompanied by high rewards. Others may be more conservative and invest in solid federal bonds which fluctuate less, and therefore offer less reward, but also offer less risk. In sports, basketball players sometimes take more difficult and hence riskier shots from long distance, because these shots are worth three points rather than two.

Psychologists, cognitive scientists, economists and others are interested in the factors that affect human choices among options varying in their risk-reward structure. However, stock markets and sport arenas are ‘noisy’ environments, making it difficult (for both players and researchers) to isolate the risks and rewards of any given event. Computer games provide an excellent platform for studying, in a well-controlled environment, the effects of risk and reward on players’ behaviour.

We examine risk and reward from both cognitive science and game design perspectives. We believe these two perspectives are complementary. Psychological principles can help inform game design, while appropriately designed games can provide a useful tool for studying psychological phenomena.

Specifically, in the current paper we discuss the iterative, player-centric development (Sotamma, 2007) of a top-down shooter that can be used to investigate the psychological phenomenon known as the ‘hot hand’. Although the focus of this paper is on the process of designing risk-reward structures to suit the design requirements of a hot-hand game, we begin with an overview of this phenomenon and the current state of research. In subsequent sections we describe three stages of game design and development. In our final section we relate our findings back to more general principles of game design.

The Hot Hand

The expression ‘hot hand’ originates from basketball and describes the common belief that players who are on a streak of scoring are more likely to score on their next shot. That is, they are on a hot streak or have the ‘hot hand’. In a survey of 100 basketball fans, 91% believed that players had a better chance of making a shot after hitting their previous two or three shots than after missing their previous few shots (Gilovitch, Vallone, & Tversky, 1985).

While intuitively these beliefs and predictions seem reasonable, seminal research found no evidence for the hot hand in the field-goal shooting data of the 1980-81 Philadelphia 76ers, or the free-throw shooting data of the 1980-81 and 1981-82 Boston Celtics (Gilovitch et al., 1985). With few exceptions, subsequent studies across a range of sports confirm this surprising finding (Bar-Eli, Avugos, & Raab, 2006) – suggesting that hot and cold streaks of performance could be a myth.

However, results of previous hot hand investigations reveal a more complicated picture. Specifically, previous studies suggest that a distinction can be made between tasks of ‘fixed’ difficulty and tasks of ‘variable’ difficulty. A good example of a ‘fixed’ difficulty task is free-throw shooting in basketball. In this type of shooting the distance is kept constant, so each shot has the same difficulty level. In a ‘variable’ difficulty task, such as field shooting during the course of a basketball game, players may adjust their level of risk from shot-to-shot, so the difficulty of the shot varies depending on shooting distance, the amount of defensive pressure, and the overall game situation.

Evidence suggests it is possible for players to get on hot streaks in fixed difficulty tasks such as horseshoe pitching (Smith, 2003), billiards (Adams, 1996), and ten-pin bowling (Dorsey-Palmenter & Smith, 2004). In variable difficulty tasks, however, such as baseball (Albright, 1993), basketball (Gilovitch et al., 1985), and golf (Clark, 2003a, 2003b, 2005), there is no evidence for hot or cold streaks – despite the common belief to the contrary.

The most common explanation for the disparity between popular belief (hot hand exists) and actual data (lack of support for hot hand) is that humans tend to misinterpret patterns in small runs of numbers (Gilovitch et al., 1985). That is, we tend to form patterns based on a cluster of a few events, such as a player scoring three shoots in a row. We then use these patterns to help predict the outcome of the next event, even though there is insufficient information to make this prediction (Tversky & Kahneman, 1974). In relation to basketball shooting, after a run of three successful shots, people would incorrectly believe that the next shot is more likely to be successful than the player’s long term average. This is known as the hot-hand fallacy.

A different explanation for this disparity suggests shooters tend to take greater risks during a run of success, for no loss of accuracy (Smith, 2003). Under this scenario, a player does show an increase in performance during a hot streak – as they are performing a more difficult task at the same level of accuracy. This increase in performance may in turn be reflected in hot hand predictions, however would not be detected by traditional measures of performance. While this hypothetical account receives tentative support by drawing a distinction between fixed and variable difficulty tasks (as the hot hand is more likely to appear in fixed-difficulty tasks, where players cannot engage in a more difficult shot), this hypothesis requires further study.

Unfortunately, trying to gather more data to investigate the hot hand phenomenon from sporting games and contests is fraught with problems of subjectivity. How can one assess the difficulty of a given shot over another in basketball? How can one tell if a player is adopting an approach with more risk?

An excellent way to overcome this problem is to design a computer game of ‘variable’ difficulty tasks that can accurately record changes in player strategies. Such a game can potentially answer a key question relevant to both psychology and game design – how do people (players) respond to a run of success or failure (in a game challenge)?

The development of this game, which we call a ‘hot hand game’, is the focus of this paper. Such a game requires a finely tuned risk and reward structure, and the process of tuning this structure provides a unique empirical insight into players risk taking behaviour. At each stage of development we test the game to measure how players respond to the risk and reward structure. We then analyse these results in terms of player strategy and performance and use this analysis to inform our next stage of design.

This type of design could be characterised as iterative and player-centric (Sotamaa, 2007). While the game design in this instance is simple, due to the precise requirements of the psychological investigation, player testing is more formal than might traditionally be used in game development. Consequently, changes in player strategy can be precisely evaluated. We find that even subtle changes to risk and reward structures impact on player’s risk-taking strategy.

Game Requirements and Basic Design

A hot hand game that addresses how players respond to a run of success or failure has special requirements. First and foremost, the game requires a finely-tuned risk and reward structure. The game must have several (5-7), well-balanced risk levels, so that players are both able and willing to adjust their level of risk in response to success and failure. If, for example, one risk level provides substantially more reward than any other, players will learn this reward structure over time, and be unlikely to change strategy throughout play. We would thus like each risk level to be, for the average player, equally rewarding. In other words, regardless of the level of risk adopted, the player should have about the same chance of obtaining the best score.

The second requirement for an optimal hot hand game is that it allows measurement of players’ strategy after runs of both successes and failures. If people fail most of the time, we will not record enough runs of success. If people succeed most of the time, we will not observe enough runs of failure. Thus, the core challenge needs to provide a probability of success, on average, somewhere in the range of 40-60%.

The game developed to fulfil these requirements was a top-down shooter developed in Flash using Actionscript. While any simple action game based on a physical challenge with hit-miss scoring could be suitably modified for our purposes, a top-down shooter holds several advantages. Firstly, high familiarity with the style means the learning period for players is minimal, supporting our aims of using the game for experimental data collection. Secondly, the simple coding of key difficulty parameters (i.e. target speeds and accelerations) allows the reward structure to be easily and precisely manipulated. Lastly, a ‘shot’ of a top-down shooter is analogous to a ‘shot’ in basketball, with similar outcomes of ‘hit’ and ‘miss’. This forms a clear and identifiable connection between the current experiment and the origins of the hot hand.

In the top-down shooter, the goal of the player is to shoot down as many alien spaceships as possible within some fixed amount of time. This means the number of overall shots made, as well as the number of hits, depend on player performance and strategy. The game screen shows two spaceships, representing an alien and the player-shooter (Figure 1). The simple interface provides feedback about the current number of kills and the time remaining. During the game the player’s spaceship remains stationary at the bottom centre of the screen. Only a single alien spaceship appears at any one time. It moves horizontally back-and-forth across the top of the screen, and bounces back each time it hits the right or left edges. The player shoots at the alien ship by pressing the spacebar. For each new alien ship the player has only a single shot with which to destroy it. If an alien is destroyed the player is rewarded with a kill.

Figure 1: The playing screen.

Each alien craft enters from the top of the screen and randomly moves towards either the left or right edge. It bounces off each side of the screen, moving horizontally and making a total of eight passes before flying off. Initially the alien ship moves swiftly, but it decelerates at a constant rate, moving more slowly after each pass. This game therefore represents a variable difficulty task; a player can elect a desired level of risk as the shooting task becomes less difficult with each pass of the alien.

The risk and reward equation is quite simple for the player. The score for destroying an alien is the same regardless of when the player fires. Since the goal is to destroy as many aliens as possible in the game period, the player would benefit from shooting as quickly as possible; shooting in the early passes rewards the player with both a kill and more time to shoot at subsequent aliens. However, because the alien ship decelerates during each of the eight passes, the earlier a player shoots the less likely this player will hit the target. If a shot is missed, the player incurs a 1.5 second time penalty. That is, the next alien will appear only after a 1.5 second delay which is additional to the interval experienced for an accurate shot.

Stage One–Player Fixation

After self-testing the game, we deployed it so that it could be played online. Five players were recruited via an email circulated to students, family and friends. Players were instructed to shoot down as many aliens as possible within a given time block. They first played a practice level for six minutes before playing the competitive level for 12 minutes. The number of alien ships a player encountered varied depending on the player’s strategy and accuracy. A player could expect to encounter roughly 10 alien ships for every 60 seconds of play. At the completion of the game the player’s response time and accuracy were recorded for each alien ship.

Recall that one of the game requirements was that players take shots across a range of difficulty levels, represented by passes (later passes mean less difficult shots)–this simple test provides evidence that a player is willing to explore the search space and alter her or his risk-taking behaviour throughout the game. Typical results for Players one and two are shown in Figure 2. In general players tended to be very exploratory during the practice level of the game, as indicated by a good spread of shots between alien passes one and eight. During the competitive game time however players tended to invest in a single strategy, as indicated by the large spikes seen in the competition levels of Figure 2. This suggests that players, after an exploratory period, attempted to maximise their score by firing on a single, fixed pass.

Figure 2: Results for two typical players in Stage one of game development. The upper row shows data for Player 1, and the bottom row shows data for Player 2. The left column presents the frequency (%) of shots taken on each pass in the practice level, while the right column indicates the frequency (%) of shots taken on each pass in the competition level. Note that players experimented during the practice level, as evidenced by evenly spread frequencies across passes in the left panels, but then adopted a fixed strategy during the competitive block, as evidenced by spikes at pass 4 (Player 1) and pass 5 (Player 2). For each panel, n is the overall number of shots attempted by the player in that block, m is the mean firing pass, and sd is the standard deviation of the number of attempted shots.

In experimental terms, this fixation on a single strategy is known as ‘investment’. At the end of the game the players reported that, because of the constant level of deceleration, they could always shoot when the alien was at a specific distance from the wall if they stuck to the same pass. Players thus practiced a timing strategy specific to a particular alien pass (i.e., a specific difficulty level). The number of kills per unit time (i.e., the reward) was therefore always highest for that player when shooting at the same pass. In the example graphs (Figure 2), one player ‘invested’ in learning to shoot on pass four, the other, on pass five. This type of investment runs counter to one requirement of a hot-hand game, creating a major design flaw that needed to be fixed in the next iteration.

Stage Two–Encouraging Exploratory Play

The aim of the second stage of design was to overcome the problem of player investment in a single strategy. The proposed solution was to vary the position of the player’s ship so that it no longer appeared in the same location at the centre of the screen but rather was randomly shifted left and right of centre each time a new alien appeared (Figure 3). Thus, on each trial, the shooter’s location was sampled from a uniform distribution of 100 pixels to the left or to the right of the centre. This manipulation was intended to prevent the player from learning a single timing-sequence that was always successful on a single pass (such as always shooting on pass four when the alien was a certain distance from the side of the screen).

Figure 3: The screen in Stage two of game development. The blue rectangle appears here for illustration purposes and indicates the potential range of locations used to randomly position the player’s ship. It did not appear on the actual game screen.

Once again we deployed an online version of the game and recorded data from six players. Players once again played a practice level for six minutes before they played the competitive level for 12 minutes.

The results for all individual players in the competitive game level are shown in Figure 4. Introducing random variation into the players firing position significantly decreased players’ tendency to invest in and fixate on a single pass. This decrease in investment is highlighted by the increase in the variance seen in Figure 4 when compared to Figure 2. Thus, the slight change in gameplay had a significant effect on players’ behaviour, encouraging them to alter their risk-taking strategy throughout the game. Furthermore, this change helps to meet the requirements necessary for hot hand investigation.

Figure 4: Individual player results for the competition level in Stage two testing. Player’s tendency to fire on a single pass in the competition level has been significantly reduced compared to Stage One, as evidenced by the reduction in spikes and, in most cases, increase in variance. For each panel, n is the overall number of shots attempted by the player in that block, m is the mean firing pass, and sd is the standard deviation of the number of attempted shots.

In Figure 5 we present data averaged across all players for both the practice and competition levels. This summary highlights how the game’s reward structure influenced player strategy throughout play. The left column corresponds to the practice level (not shown in Figure 4), while the right column corresponds to the competition level.

Figure 5: Average player results for Stage two. The left column presents the frequency (%) of shots taken on each pass in the practice level, while the right column indicates the frequency (%) of shots taken on each pass in the competition level. For each panel, m is the mean firing pass and n is the overall number of shots attempted by all players in that block. A comparison of mean firing pass for practice and competition levels highlights that as the game progressed, players fired later.

An inspection of Figure 5 highlights the fact that players’ shooting strategy altered in a predictable manner as the game progressed. For example, the mean firing pass for the practice level (m = 5.8) was smaller than that seen in the competition level (m = 6.21). Thus players tended to shoot later in the competition level. This suggests that the reward structure of the game was biased towards firing at later passes, and that as players became familiar with this reward structure they altered their gameplay accordingly.

Given the need to minimise such bias for hot hand investigation, we examined the risk and reward structure on the basis of average player performance. We were particularly interested in the probability of success for each pass, and how this probability translated into our reward system. Recall that firing on later passes takes more time but is also accompanied by a higher likelihood for success. As the aim of the hot hand game is to kill as many aliens as possible within a 12 minute period, both the probability of hits as well as the time taken to achieve these hits are important when considering the reward structure.

We therefore analysed how many kills per 12-minute block the average, hypothetical player would make if he or she were to consistently fire on a specific pass for each and every alien that appeared. For example, given the observed likelihood of success on pass one, how many kills would a player make by shooting only on pass one? How many kills on pass two, and so on. Results of this examination are reported in Figure 6. Figure 6A shows the average number of shots taken by players on each pass of the alien (overall height of bar) along with the average number of hits at each pass (height of yellow part of the bar). Figure 6B uses this data to plot the observed probability of success and shows that the probability for success is higher for later passes. This empirically validates that later passes are in fact ‘easier’ in a psychological sense.

Figure 6: Averaged results and some modelling predictions from Stage two of game development. In Panel A, the frequency (%) of shots attempted on each pass is indicated by the overall height of each bar. The proportion of hits and misses are indicated in yellow and blue. Panel B depicts the average probability of a hit for each pass, given by the number of hits out of overall shot attempts. Based on the empirical results, Panels C and D show the predicted number of successful shots if players were to consistently shoot on only one pass for the entire game (see text for details).

These probabilities allow empirical estimation of the number of total kills likely to be attained by the hypothetical average player if they were to shoot on only one pass for an entire 12 minute block. By plotting the number of total kills expected for each pass number, we produce an optimal strategy curve for the current game, as shown in Figure 6C. The curve is monotonically increasing, indicating that the total number of kills expected of an average player increases as the pass number increases. In other words, players taking less difficult shots are expected to make more hits within each game. The reward structure is clearly biased toward later passes, which validates the change in player strategy (i.e. firing on later passes) as the game progressed. As the players became accustomed to the reward structure, their strategy shifted accordingly to favour later, easier shots.

In game terms it might be considered an exploit to shoot on pass eight. Figure 6C indicates that consistently firing on pass 8 would clearly result in the greatest number of kills, making it the ‘optimal’ strategy for the average player. Given that an exploit of this kind reduces the likelihood of players to fire earlier in response to a run of successful shots, the current design still failed to meet the requirements for our hot hand game.

One simple adjustment to overcome this issue was to reduce the penalty period after an unsuccessful shot. While the current time penalty for a missed shot was set to 1.5 seconds, the ability to vary this penalty allows a deal of flexibility within the reward structure. Given that players make many more shots, and thus many more misses, if they choose to fire on early passes – decreasing the time penalty for a miss substantially increases the relative reward for firing on early passes.

In line with this thinking, Figure 6D shows the predicted number of kills in 12 minutes for the average player if the penalty for missing is reduced from 1.5 seconds to 0.25 seconds. This seemingly small change balances the reward structure so that players are more evenly rewarded, at least for passes three to eight. Estimation of accuracy rate on passes one and two were based on a small number of trials, which makes them problematic for modelling; participants avoided taking early shots, perhaps because the alien was moving too fast for them to intercept. Allowing for players to fire on passes three to eight still provided us with sufficient number of possible strategies for a hot hand investigation.

Stage Three–Balancing Risk and Reward

In stage two of our design we uncovered an exploitation strategy in the risk and reward structure of the game where players could perform optimally by shooting on pass eight of the alien. We suspect this influenced players to fire at later passes of the alien, particularly as the game progressed. Using empirical data to model player performance suggested that reducing the time penalty for a miss to 0.25 seconds would overcome this problem.

A modified version of the game, with a 0.25 seconds penalty after a miss, was made available online and data were recorded from five players. Averaged results show that players shot at roughly the same mean pass of the alien in the practice level and the competitive level (Figure 7). This pattern is in contrast with Figure 4, which highlighted a tendency for players to fire at later passes in the 12 minute competitive level. This data confirms the empirical choice of a 0.25 second penalty, and provides yet another striking example of how subtle changes in reward structure may influence players’ behaviour.

Figure 7: Average player results for Stage three of game development. The left plot presents the frequency (%) of shots attempted on each pass in the practice level, while the right plot indicates the frequency (%) of shots attempted on each pass in the competition level. For each panel, m is the mean firing pass and n is the overall number of shots taken by all players in that block. As indicated by the mean firing pass, under a balanced reward structure players no longer attempted to shoot on later passes as the game progressed.

Recall that we began the development of a hot-hand game with the requirement that for each level of assumed risk the game should be equally rewarding (total number of kills) for the average player. By balancing the reward structure, the design from stage three is now consistent with this requirement for investigating the hot hand.

Finally, we required the game to have an overall level of difficulty such that players would succeed on about 40-60 percent of attempts. Performance within this range would allow us to compare player strategy in response to runs of both success and failure. That is, testing for both hot and cold streaks. As highlighted by Figure 8, the overall probability of success does indeed meet this criteria; the overall probability of success (hits) was 43%. Thus, the game now meets the essential criteria required to investigate the hot hand phenomenon.

Figure 8: Averaged results from the competition level of Stage three of game development. In Panel A, the frequency (%) of shots attempted on each pass is indicated by the overall height of each bar. The proportion of hits and misses are indicated in yellow and blue. Panel B depicts the average probability of success for each pass, given by the number of hits out of overall shot attempts. In Panel B, ps is the overall probability of success (hits).

Discussion

We set out to design a computer game as a tool for studying a fascinating and widely studied psychological phenomenon called the ‘hot hand’ (e.g., Gilovitch, Valone, & Tversky, 1985). For this we needed a game that allowed us to investigate player risk-taking in response to a string of successful or unsuccessful challenges.

We designed a simple top-down shooter game where players had a single shot at an alien spacecraft as it made eight passes across the screen. During the game the player faced this same challenge a number of times. The goal of the game was to kill as many aliens as possible in a set amount of time. The risk in the gameplay reduced on each pass as the alien ship slowed down. Shooting successfully on earlier passes rewarded the player with a kill and made a new alien appear immediately. Missing a shot penalised the player with an additional wait time before the next alien appeared.

As a hot hand game it was required to meet specific risk and reward criteria. Players should explore a range of risk-taking strategies in the game and they should be rewarded in a balanced way commensurate with this risk. We also wanted the game challenge to have an average success rate roughly equal to the failure rate, between 40 and 60 percent so that we could use the game to gather data about player’s behaviour in response to both success and failure.

To achieve our objective we developed the game in an iterative fashion over three stages. At each stage we tested an online version of the game, gathering empirical data and analysing the players’ strategy and performance. In each successive stage of design we then altered the game mechanics so they were balanced in a way that met our specific hot hand requirements. The design changes and their effects are summarised in Table 1.

Table 1. A summary of changes to design in each of the stages and the effect of these changes on meeting the hot hand requirements.

Books on game design tend to prescribe an iterative design process. Iterative processes allow unforseen problems to be addressed in successive stages of design. This is especially important in games where the requirements for the game mechanics are typically only partially known and tend to emerge as the game is built and played. Salen and Zimmerman describe this iterative process as “play-based” design and also emphasise the importance of “playtesting and prototyping” (2004, p. 4). For this purpose successive prototypes of the game are required. Indeed we began with only high-level requirements and used this same iterative, prototyping approach to refine our gameplay.

The main difference in our approach is that we more formally measured player’s strategies and exploration behaviours in each stage of design. Given that our game requirements are rather unique, it is unlikely that subjective feedback alone would have allowed us to make the required subtle changes to game mechanics. For example, during the initial testing of the game we found that players tended to invest in a single playing strategy. Further analysis also revealed a potential exploit in the game as players could easily optimise their total number of kills by shooting on the last pass of each alien ship.

The issue of exploits in games is often debated in gaming circles and is also well studied in psychology. Indeed trade-offs between exploitation and exploration exist in many domains (e.g., Hills, Todd, & Goldstone, 2008; Walsh, 1996). External and internal conditions determine which strategy the organism, or the player, will take in order to maximise gains and minimise loses. For example, when foraging for food, the distribution of resources matters. Clumped resources lead to a focused search in the nearby vicinity where they are abundant (exploitation), whereas diffused resources lead to broader exploration of the search space.

Hills et al. showed that exploration and exploitation strategies compete in mental spaces as well, depending on the reward for desired information and the toll incurred by search time for exploration. In the context of our game, a shooting strategy of consistently attempting the easiest shooting level produced the highest reward. This encouraged players to drift toward later firing as the game progressed, and in turn inhibited players from exploring alternate (earlier firing) strategies. It is unlikely we could have predicted this without collecting empirical data from players.

A further advantage of gathering empirical data was that it allowed us to remodel our reward structure based on precise measures of player performance. In stages one and two players lost 1.5 seconds each time they missed an alien. In stage three we reduced this penalty to 0.25 seconds based on our analysis and modelling of player behaviour. This relatively minor change was enough to change players’ behaviour and encourage them to risk earlier shots at the alien. The fact that our game is quite simple in nature reinforces both the difficulty and importance of designing a well-balanced risk and reward structure.

Another common principle referred to in game literature is player-centred design which is defined by Adams as “a philosophy of design in which the designer envisions a representative player of a game the designer wants to create.” (2010, p. 30). Although player-centred design is often a common principle referred to in game-design texts there is some suggestion that design is often based purely on designer experience (Sotamaa, 2007). Involving players in the design process typically involve more subjective feedback from approaches such as focus groups and interviews which have been generally used in usability design. In our study, when designing even a simple game challenge it is clear that the use of empirical data to measure how players approach the game and how they perform can be another vital element in balancing the gameplay.

We also recognise some dangers with this approach, as averaging player performance can hide important differences between players. It would be nice to have a model of an ideal player but it is unlikely such a player exists. In fact there are many different opinions about who the ‘player’ is (Sotamaa, 2007). The empirical data therefore need to be gathered from the available players’ population. If there are broad differences among these players then it may require the designer to sample different groups, for example, a group of casual players and a group of hard-core gamers.

Importantly for future research, the game design at which we arrived is now suitable to investigate the hot hand phenomena. Such a game can potentially answer a number of questions:

1. How do players respond to a run of success or failure in a game challenge?

2. Will a player take on more difficult challenges if they are on a hot streak?

3. Will they lower their risk if they are on a cold streak?

4. How will this variable risk level impact on their overall measure of performance?

5. How can the hot hand principle be used in the design of game mechanics?

Answers to such questions will not only be of interest to psychologists, but could also further inform game design. For example, it might allow the designer to engineer a hot streak so that players would take more risks or be more explorative in their strategies. Of course in a game it might even be appropriate to use a cold streak to discourage a player’s current strategy. The game mechanics could help engineer these streaks in a very transparent way without breaking player immersion. Further investigations of the hot hand hold significant promise for both psychology and game design.(source:gamestudies)

闽公网安备35020302001549号

闽公网安备35020302001549号